Deep Learning Frameworks and Network Tweaks

- Programming Example: Moving to a DL Framework

- The Problem of Saturated Neurons and Vanishing Gradients

- Initialization and Normalization Techniques to Avoid Saturated Neurons

- Cross-Entropy Loss Function to Mitigate Effect of Saturated Output Neurons

- Different Activation Functions to Avoid Vanishing Gradient in Hidden Layers

- Variations on Gradient Descent to Improve Learning

- Experiment: Tweaking Network and Learning Parameters

- Hyperparameter Tuning and Cross-Validation

- Concluding Remarks on the Path Toward Deep Learning

The director of architecture at NVIDIA Corporation walks through how to use a DL framework and introduces key techniques for getting deeper networks to learn well.

An obvious next step would be to see if adding more layers to our neural networks results in even better accuracy. However, it turns out getting deeper networks to learn well is a major obstacle. A number of innovations were needed to overcome these obstacles and enable deep learning (DL). We introduce the most important ones later in this chapter, but before doing so, we explain how to use a DL framework. The benefit of using a DL framework is that we do not need to implement all these new techniques from scratch in our neural network. The downside is that you will not deal with the details in as much depth as in previous chapters. You now have a solid enough foundation to build on. Now we switch gears a little and focus on the big picture of solving real-world problems using a DL framework. The emergence of DL frameworks played a significant role in making DL practical to adopt in the industry as well as in boosting productivity of academic research.

Programming Example: Moving to a DL Framework

In this programming example, we show how to implement the handwritten digit classification from Chapter 4, “Fully Connected Networks Applied to Multiclass Classification,” using a DL framework. In this book, we have chosen to use the two frameworks TensorFlow and PyTorch. Both of these frameworks are popular and flexible. The TensorFlow versions of the code examples are interspersed throughout the book, and the PyTorch versions are available online on the book Web site.

TensorFlow provides a number of different constructs and enables you to work at different abstraction levels using different application programming interfaces (APIs). In general, to keep things simple, you want to do your work at the highest abstraction level possible because that means that you do not need to implement the low-level details. For the examples we will study, the Keras API is a suitable abstraction level. Keras started as a stand-alone library. It was not tied to TensorFlow and could be used with multiple DL frameworks. However, at this point, Keras is fully supported inside of TensorFlow itself. See Appendix I for information about how to install TensorFlow and what version to use.

Appendix I also contains information about how to install PyTorch if that is your framework of choice. Almost all programming constructs in this book exist both in TensorFlow and in PyTorch. The section “Key Differences between PyTorch and TensorFlow” in Appendix I describes some key differences between the two frameworks. You will find it helpful if you do not want to pick a single framework but want to master both of them.

The frameworks are implemented as Python libraries. That is, we still write our program as a Python program and we just import the framework of choice as a library. We can then use DL functions from the famework in our program. The initialization code for our TensorFlow example is shown in Code Snippet 5-1.

Code Snippet 5-1 Import Statements for Our TensorFlow/Keras Example

import tensorflow as tf from tensorflow import keras from tensorflow.keras.utils import to_categorical import numpy as np import logging tf.get_logger().setLevel(logging.ERROR) tf.random.set_seed(7) EPOCHS = 20 BATCH_SIZE = 1

As you can see in the code, TensorFlow has its own random seed that needs to be set if we want reproducible results. However, this still does not guarantee that repeated runs produce identical results for all types of networks, so for the remainder of this book, we will not worry about setting the random seeds. The preceding code snippet also sets the logging level to only print out errors while suppressing warnings.

We then load and prepare our MNIST dataset. Because MNIST is a common dataset, it is included in Keras. We can access it by a call to keras.datasets.mnist and load_data. The variables train_images and test_images will contain the input values, and the variables train_labels and test_labels will contain the ground truth (Code Snippet 5-2).

Code Snippet 5-2 Load and Prepare the Training and Test Datasets

# Load training and test datasets.

mnist = keras.datasets.mnist

(train_images, train_labels), (test_images,

test_labels) = mnist.load_data()

# Standardize the data.

mean = np.mean(train_images)

stddev = np.std(train_images)

train_images = (train_images - mean) / stddev

test_images = (test_images - mean) / stddev

# One-hot encode labels.

train_labels = to_categorical(train_labels, num_classes=10)

test_labels = to_categorical(test_labels, num_classes=10)

Just as before, we need to standardize the input data and one-hot encode the labels. We use the function to_categorical to one-hot encode our labels instead of doing it manually, as we did in our previous example. This serves as an example of how the framework provides functionality to simplify our implementation of common tasks.

We are now ready to create our network. There is no need to define variables for individual neurons because the framework provides functionality to instantiate entire layers of neurons at once. We do need to decide how to initialize the weights, which we do by creating an initializer object, as shown in Code Snippet 5-3. This might seem somewhat convoluted but will come in handy when we want to experiment with different initialization values.

Code Snippet 5-3 Create the Network

# Object used to initialize weights.

initializer = keras.initializers.RandomUniform(

minval=-0.1, maxval=0.1)

# Create a Sequential model.

# 784 inputs.

# Two Dense (fully connected) layers with 25 and 10 neurons.

# tanh as activation function for hidden layer.

# Logistic (sigmoid) as activation function for output layer.

model = keras.Sequential([

keras.layers.Flatten(input_shape=(28, 28)),

keras.layers.Dense(25, activation='tanh',

kernel_initializer=initializer,

bias_initializer='zeros'),

keras.layers.Dense(10, activation='sigmoid',

kernel_initializer=initializer,

bias_initializer='zeros')])

The network is created by instantiating a keras.Sequential object, which implies that we are using the Keras Sequential API. (This is the simplest API, and we use it for the next few chapters until we start creating networks that require a more advanced API.) We pass a list of layers as an argument to the Sequential class. The first layer is a Flatten layer, which does not do computations but only changes the organization of the input. In our case, the inputs are changed from a 28×28 array into an array of 784 elements. If the data had already been organized into a 1D-array, we could have skipped the Flatten layer and simply declared the two Dense layers. If we had done it that way, then we would have needed to pass an input_shape parameter to the first Dense layer because we always have to declare the size of the inputs to the first layer in the network.

The second and third layers are both Dense layers, which means they are fully connected. The first argument tells how many neurons each layer should have, and the activation argument tells the type of activation function; we choose tanh and sigmoid, where sigmoid means the logistic sigmoid function. We pass our initializer object to initialize the regular weights using the kernel_initializer argument. The bias weights are initialized to 0 using the bias_initializer argument.

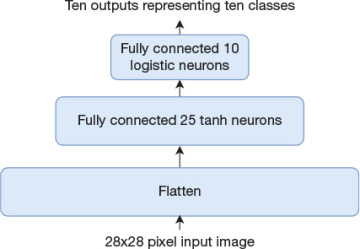

One thing that might seem odd is that we are not saying anything about the number of inputs and outputs for the second and third layers. If you think about it, the number of inputs is fully defined by saying that both layers are fully connected and the fact that we have specified the number of neurons in each layer along with the number of inputs to the first layer of the network. This discussion highlights that using the DL framework enables us to work at a higher abstraction level. In particular, we use layers instead of individual neurons as building blocks, and we need not worry about the details of how individual neurons are connected to each other. This is often reflected in our figures as well, where we work with individual neurons only when we need to explain alternative network topologies. On that note, Figure 5-1 illustrates our digit recognition network at this higher abstraction level. We use rectangular boxes with rounded corners to depict a layer of neurons, as opposed to circles that represent individual neurons.

We are now ready to train the network, which is done by Code Snippet 5-4. We first create a keras.optimizer.SGD object. This means that we want to use stochastic gradient descent (SGD) when training the network. Just as with the initializer, this might seem somewhat convoluted, but it provides flexibility to adjust parameters for the learning process, which we explore soon. For now, we just set the learning rate to 0.01 to match what we did in our plain Python example. We then prepare the model for training by calling the model’s compile function. We provide parameters to specify which loss function to use (where we use mean_squared_error as before), the optimizer that we just created and that we are interested in looking at the accuracy metric during training.

FIGURE 5-1 Digit classification network using layers as building blocks

Code Snippet 5-4 Train the Network

# Use stochastic gradient descent (SGD) with

# learning rate of 0.01 and no other bells and whistles.

# MSE as loss function and report accuracy during training.

opt = keras.optimizers.SGD(learning_rate=0.01)

model.compile(loss='mean_squared_error', optimizer = opt,

metrics =['accuracy'])

# Train the model for 20 epochs.

# Shuffle (randomize) order.

# Update weights after each example (batch_size=1).

history = model.fit(train_images, train_labels,

validation_data=(test_images, test_labels),

epochs=EPOCHS, batch_size=BATCH_SIZE,

verbose=2, shuffle=True)

We finally call the fit function for the model, which starts the training process. As the function name indicates, it fits the model to the data. The first two arguments specify the training dataset. The parameter validation_data is the test dataset. Our variables EPOCHS and BATCH_SIZE from the initialization code determine how many epochs to train for and what batch size we use. We had set BATCH_SIZE to 1, which means that we update the weight after a single training example, as we did in our plain Python example. We set verbose=2 to get a reasonable amount of information printed during the training process and set shuffle to True to indicate that we want the order of the training data to be randomized during the training process. All in all, these parameters match what we did in our plain Python example.

Depending on what TensorFlow version you run, you might get a fair number of printouts about opening libraries, detecting the graphics processing unit (GPU), and other issues as the program starts. If you want it less verbose, you can set the environment variable TF_CPP_MIN_LOG_LEVEL to 2. If you are using bash, you can do that with the following command line:

export TF_CPP_MIN_LOG_LEVEL=2

Another option is to add the following code snippet at the top of your program.

import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

The printouts for the first few training epochs are shown here. We stripped out some timestamps to make it more readable.

Epoch 1/20 loss: 0.0535 - acc: 0.6624 - val_loss: 0.0276 - val_acc: 0.8893 Epoch 2/20 loss: 0.0216 - acc: 0.8997 - val_loss: 0.0172 - val_acc: 0.9132 Epoch 3/20 loss: 0.0162 - acc: 0.9155 - val_loss: 0.0145 - val_acc: 0.9249 Epoch 4/20 loss: 0.0142 - acc: 0.9227 - val_loss: 0.0131 - val_acc: 0.9307 Epoch 5/20 loss: 0.0131 - acc: 0.9274 - val_loss: 0.0125 - val_acc: 0.9309 Epoch 6/20 loss: 0.0123 - acc: 0.9313 - val_loss: 0.0121 - val_acc: 0.9329

In the printouts, loss represents the mean squared error (MSE) of the training data, acc represents the prediction accuracy on the training data, val_loss represents the MSE of the test data, and val_acc represents the prediction accuracy of the test data. It is worth noting that we do not get exactly the same learning behavior as was observed in our plain Python model. It is hard to know why without diving into the details of how TensorFlow is implemented. Most likely, it could be subtle issues related to how initial parameters are randomized and the random order in which training examples are picked. Another thing worth noting is how simple it was to implement our digit classification application using TensorFlow. Using the TensorFlow framework enables us to study more advanced techniques while still keeping the code size at a manageable level.

We now move on to describing some techniques needed to enable learning in deeper networks. After that, we can finally do our first DL experiment in the next chapter.