Managing HDFS Storage

You deal with very large amounts of data in a Hadoop cluster, often ranging over multiple petabytes. However, your cluster is also going to use a lot of that space, sometimes with several terabytes of data arriving daily. This section shows you how to check for used and free space in your cluster, and manage HDFS space quotas. The following section shows how to balance HDFS data across the cluster.

The following subsections show how to

Check HDFS disk usage (used and free space)

Allocate HDFS space quotas

Checking HDFS Disk Usage

Throughout this book, I show how to use various HDFS commands in their appropriate contexts. Here, let’s review some HDFS space and file related commands. You can view the help facility for any individual HDFS file command by issuing the following command first:

$ hdfs dfs –usage

Let’s review some of the most useful file system commands that let you check the HDFS usage in your cluster. The following sections explain how to

Use the df command to check free space in HDFS

Use the du command to check space usage

Use the dfsadmin command to check free and used space

Finding Free Space with the df Command

You can check the free space in an HDFS directory with a couple of commands. The -df command shows the configured capacity, available free space and used space of a file system in HDFS.

# hdfs dfs -df Filesystem Size Used Available Use% hdfs://hadoop01-ns 2068027170816000 1591361508626924 476665662189076 77% #

You can specify the –h option with the df command for more readable and concise output:

# hdfs dfs -df -h Filesystem Size Used Available Use% hdfs://hadoop01-ns 1.8 P 1.4 P 433.5 T 77% #

The df –h command shows that this cluster’s currently configured HDFS storage is 1.8PB, of which 1.4PB have been used so far.

Finding the Used Space with the du Command

You can view the size of the files and directories in a specific directory with the du command. The command will show you the space (in bytes) used by the files that match the file pattern you specify. If it’s a file, you’ll get the length of the file. The usage of the du command is as follows:

$ hdfs dfs –du URI

Here’s an example:

$ hdfs dfs -du /user/alapati 67545099068 67545099068 /user/alapati/.Trash 212190509 328843053 /user/alapati/.staging 26159 78477 /user/alapati/catalyst 3291761247 6275115145 /user/alapati/hive $

You can view the used storage in the entire HDFS file system with the following command:

$ hdfs dfs -du / 414032717599186 883032417554123 /data 0 0 /home 0 0 /lost+found 111738 335214 /schema 1829104769791 5401313868645 /tmp 325747953341360 690430023788615 /user $

The following command uses the –h option to get more readable output:

$ hdfs dfs -du -h / 353.4 T 733.6 T /data 0 0 /home 0 0 /lost+found 109.1 K 327.4 K /schema 2.1 T 6.1 T /tmp 277.3 T 570.9 T /user $

Note the following about the output of the du –h command shown here:

The first column shows the actual size (raw size) of the files that users have placed in the various HDFS directories.

The second column shows the actual space consumed by those files in HDFS.

The values shown in the second column are much higher than the values shown in the first column. Why? The reason is that the second column’s value is derived by multiplying the size of each file in a directory by its replication factor, to arrive at the actual space occupied by that file.

As you can see, directories such as /schema and /tmp reveal that the replication factor for all files in these two directories is three. However, not all files in the /data and the /user directories are being replicated three times. If they were, the second column’s value for these two file systems would also be three times the value of its first column.

If you sum up the sizes in the second column of the dfs –du command, you’ll find that it’s identical to that shown by the Used column of the dfs -df command, as shown here:

$ hdfs dfs -df -h / Filesystem Size Used Available Use% hdfs://hadoop01-ns 553.8 T 409.3 T 143.1 T 74% $

Getting a Summary of Used Space with the du -s Command

The du –s command lets you summarize the used space in all files instead of giving individual file sizes as the du command does.

$ hdfs dfs -du -s -h / 131.0 T 391.1 T / $

How to Check Whether Hadoop Can Use More Storage Space

If you’re under severe space pressure and you can’t add additional DataNodes right away, you can see if there’s additional space left on the local file system that you can commandeer for HDFS use immediately. In Chapter 3, I showed how to configure the HDFS storage directories by specifying multiple disks or volumes with the dfs.data.dir configuration parameter in the hdfs-site.xml file. Here’s an example:

<property> <name>df.data.dir</name> value>/u01/hadoop/data,/u02/hadoop/data,/u03/hadoop/data</value> </property>

There’s another configuration parameter you can specify in the same file, named dfs.datanode.du.reserved, which determines how much space Hadoop can use from each disk you list as a value for the dfs.data.dir parameter. The dfs.datanode.du.reserved parameter specifies the space reserved for non-HDFS use per DataNode. Hadoop can use all data in a disk above this limit, leaving the rest for non-HDFS uses. Here’s how you set the dfs.datanode.du.reserved configuration property:

<property> <name>dfs.datanode.du.reserved</name> <value>10737418240</value> <description>Reserved space in bytes per volume. Always leave this much space free for non-dfs use. </description> </property>

In this example, the dfs.datanode.du.reserved parameter is set to 10GB (the value is specified in bytes). HDFS will keep storing data in the data directories you assigned to it with the dfs.data.dir parameter, until the Linux file system reaches a free space of 10GB on a node. By default, this parameter is set to 10GB. You may consider lowering the value for the dfs.datanode.du.reserved parameter if you think there’s plenty of unused space lying around on the local file system on the disks configured for Hadoop’s use.

Storage Statistics from the dfsadmin Command

You’ve seen how you can get storage statistics for the entire cluster, as well as for each individual node, by running the dfsadmin –report command. The Used, Available and Use% statistics from the dfs –du command match the disk storage statistics from the dfsadmin –report command, as shown here:

bash-3.2$ hdfs dfs -df -h / Filesystem Size Used Available Use% hdfs://hadoop01-ns 1.8 P 1.5 P 269.6 T 85%

In the following example, the top portion of the output generated by the dfsadmin–report command shows the cluster’s storage capacity:

bash-3.2$ hdfs dfsadmin -report Configured Capacity: 2068027170816000 (1.84 PB) Present Capacity: 2067978866301041 (1.84 PB) DFS Remaining: 296412818768806 (269.59 TB) DFS Used: 1771566047532235 (1.57 PB) DFS Used%: 85.67% ...

You can see that both the dfs –du command and the dfsadmin –report command show identical information regarding the used and available HDFS space.

Testing for Files

You can check whether a certain HDFS file path exists and whether that path is a directory or a file with the test command:

$ hdfs dfs –test –e /users/alapati/test

This command uses the –e option to check whether the specified path exists.

You can create a file of zero length with the touchz command, which is identical to the Linux touch command:

$ hdfs dfs -touchz /user/alapati/test3.txt

Allocating HDFS Space Quotas

You can configure quotas on HDFS directories, thus allowing you to limit how much HDFS space users or applications can consume. HDFS space allocations don’t have a direct connection to the space allocations on the underlying Linux file system. Hadoop lets you actually set two types of quotas:

Space quotas: Allow you to set a ceiling on the amount of space used for an individual directory

Name quotas: Let you specify the maximum number of file and directory names in the tree rooted at a directory

The following sections cover

Setting name quotas

Setting space quotas

Checking name and space quotas

Clearing name and space quotas

Setting Name Quotas

You can set a limit on the number of files and directory names in any directory by specifying a name quota. If the user tries to create files or directories that go beyond the specified numerical quota, the file/directory creation will fail. Use the dfsadmin command –setQuota to set the HDFS name quota for a directory. Here’s the syntax for this command:

$ hdfs dfsadmin –setQuota <max_number> <directory>

For example, you can set the maximum number of files that can be used by a user under a specific directory by doing this:

$ hdfs dfsadmin –setQuota 100000 /user/alapati

This command sets a limit on the number of files user alapati can create under that user’s home directory, which is /user/alapati. If you grant user alapati privileges on other directories, of course, the user can create files in those directories, and those files won’t count against the name quota you set on the user’s home directory. In other words, name quotas (and space quotas) aren’t user specific—rather, they are directory specific.

Setting Space Quotas on HDFS Directories

A space quota lets you set a limit on the storage assigned to a specific directory under HDFS. This quota is the number of bytes that can be used by all files in a directory. Once the directory uses up its assigned space quota, users and applications can’t create files in the directory.

A space quota sets a hard limit on the amount of disk space that can be consumed by all files within an HDFS directory tree. You can restrict a user’s space consumption by setting limits on the user’s home directory or other directories that the user shares with other users. If you don’t set a space quota on a directory it means that the disk space quota is unlimited for that directory—it can potentially use the entire HDFS.

Hadoop checks disk space quotas recursively, starting at a given directory and traversing up to the root. The quota on any directory is the minimum of the following:

Directory space quota

Parent space quota

Grandparent space quota

Root space quota

Managing HDFS Space Quotas

It’s important to understand that in HDFS, there must be enough quota space to accommodate an entire block. If the user has, let’s say, 200MB free in their allocated quota, they can’t create a new file, regardless of the file size, if the HDFS block size happens to be 256MB. You can set the HDFS space quota for a user by executing the setSpace-Quota command. Here’s the syntax:

$ hdfs dfsadmin –setSpaceQuota <N> <dirname>...<dirname>

The space quota you set acts as the ceiling on the total size of all files in a directory. You can set the space quota in bytes (b), megabytes (m), gigabytes (g), terabytes (t) and even petabytes (by specifying p—yes, this is big data!). And here’s an example that shows how to set a user’s space quota to 60GB:

$ hdfs dfsadmin -setSpaceQuota 60G /user/alapati

You can set quotas on multiple directories at a time, as shown here:

$ hdfs dfsadmin -setSpaceQuota 10g /user/alapati /test/alapati

This command sets a quota of 10GB on two directories—/user/alapati and /test/alapati. Both the directories must already exist. If they do not, you can create them with the dfs –mkdir command.

You use the same command, -setSpaceQuota, both for setting the initial limits and modifying them later on. When you create an HDFS directory, by default, it has no space quota until you formally set one.

You can remove the space quota for any directory by issuing the –clrSpaceQuota command, as shown here:

$ dfsadmin –clrSpaceQuota /user/alapati

If you remove the space quota for a user’s directory, that user can, theoretically speaking, use up all the space you have in HDFS. As with the –setSpaceQuota command, you can specify multiple directories in the –clrSpaceQuota command.

Things to Remember about Hadoop Space Quotas

Both the Hadoop block size you choose and the replication factor in force are key determinants of how a user’s space quota works. Let’s suppose that you grant a new user a space quota of 30GB and the user has more than 500MB still free. If the user tries to load a 500MB file into one of his directories, the attempt will fail with an error similar to the following, even though the directory had a bit over 500MB of free space.

org.apache.hadoop.hdfs.protocol.DSQuotaExceededException: The DiskSpace quota

of /user/alapati is exceeded: quota = 32212254720 B = 30 GB but

diskspace consumed = 32697410316 B = 30.45 GB

In this case, the user had enough free space to load a 500MB file but still received the error indicating that the file system quota for the user was exceeded. This is so because the HDFS block size was 128MB, and so the file needed 4 blocks in this case. Hadoop tried to replicate the file three times since the default replication factor was three and so was looking for 128*12=1556MB of space, which clearly was over the space quota left for this user.

The administrator can reduce the space quota for a directory to a level below the combined disk space usage under a directory tree. In this case, the directory is left in an indefinite quota violation state until the administrator or the user removes some files from the directory. The user can continue to use the files in the overfull directory but, of course, can’t store any new files there since their quota is violated.

Checking Current Space Quotas

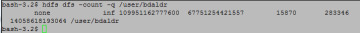

You can check the size of a user’s HDFS space quota by using the dfs –count –q command as shown in Figure 9.7.

Figure 9.7 How to check a user’s current space usage in HDFS against their assigned storage limits

When you issue a dfs –count –q command, you’ll see eight different columns in the output. This is what each of the columns stands for:

QUOTA: Limit on the files and directories

REMAINING_QUOTA: Remaining number of files and directories in the quota that can be created by this user

SPACE_QUOTA: Space quota granted to this user

REMAINING_SPACE_QUOTA: Space quota remaining for this user

DIR_COUNT: The number of directories

FILE_COUNT: The number of files

CONTENT_SIZE: The file sizes

PATH_NAME: The path for the directories

The -count –q command shows that the space quota for user bdaldr is about 100TB. Of this, the user has about 67 TB left as free space.

Clearing Current Space Quotas

You can clear the current space quota for a user by issuing the clrSpaceQuota command as shown here:

$ hdfs dfsadmin -clrSpaceQuota

Here’s an example showing how to clear the space quota for a user:

$ hdfs dfsadmin -clrSpaceQuota /user/alapati

$ hdfs dfs -count -q /user/alapati

none inf none inf 2

0 0 /user/alapati

$

The user still can use HDFS to read files but won’t be able to create any files in that user’s HDFS “home” directory. If the user has sufficient privileges, however, she can create files in other HDFS directories. It’s a good practice to set HDFS quotas on a peruser basis. You must also set quotas for data directories on a per-project basis.