4.3 Context Switching

The kernel switches among threads in an effort to share the CPU effectively; this activity is called context switching. When a thread executes for the duration of its time slice or when it blocks because it requires a resource that is currently unavailable, the kernel finds another thread to run and context switches to it. The system can also interrupt the currently executing thread to run a thread triggered by an asynchronous event, such as a device interrupt. Although both scenarios involve switching the execution context of the CPU, switching between threads occurs synchronously with respect to the currently executing thread, whereas servicing interrupts occurs asynchronously with respect to the current thread. In addition, interprocess context switches are classified as voluntary or involuntary. A voluntary context switch occurs when a thread blocks because it requires a resource that is unavailable. An involuntary context switch takes place when a thread executes for the duration of its time slice or when the system identifies a higher-priority thread to run.

Each type of context switching is done through a different interface. Voluntary context switching is initiated with a call to the sleep() routine, whereas an involuntary context switch is forced by direct invocation of the low-level context-switching mechanism embodied in the mi_switch() and setrunnable() routines. Asynchronous event handling is triggered by the underlying hardware and is effectively transparent to the system.

Thread State

Context switching between threads requires that both the kernel- and user-mode context be changed. To simplify this change, the system ensures that all of a thread’s user-mode state is located in the thread structure while most kernel state is kept elsewhere. The following conventions apply to this localization:

- Kernel-mode hardware-execution state: Context switching can take place in only kernel mode. The kernel’s hardware-execution state is defined by the contents of the TSB that is located in the thread structure.

- User-mode hardware-execution state: When execution is in kernel mode, the user-mode state of a thread (such as copies of the program counter, stack pointer, and general registers) always resides on the kernel’s execution stack that is located in the thread structure. The kernel ensures this location of user-mode state by requiring that the system-call and trap handlers save the contents of the user-mode execution context each time that the kernel is entered (see Section 3.1).

- The process structure: The process structure always remains resident in memory.

- Memory resources: Memory resources of a process are effectively described by the contents of the memory-management registers located in the TSB and by the values present in the process and thread structures. As long as the process remains in memory, these values will remain valid and context switches can be done without the associated page tables being saved and restored. However, these values need to be recalculated when the process returns to main memory after being swapped to secondary storage.

Low-Level Context Switching

The localization of a process’s context in that process’s thread structure permits the kernel to perform context switching simply by changing the notion of the current thread structure and (if necessary) process structure, and restoring the context described by the TSB within the thread structure (including the mapping of the virtual address space). Whenever a context switch is required, a call to the mi_switch() routine causes the highest-priority thread to run. The mi_switch() routine first selects the appropriate thread from the scheduling queues, and then resumes the selected thread by loading its context from its TSB.

Voluntary Context Switching

A voluntary context switch occurs whenever a thread must await the availability of a resource or the arrival of an event. Voluntary context switches happen frequently in normal system operation. In FreeBSD, voluntary context switches are initiated through a request to obtain a lock that is already held by another thread or by a call to the sleep() routine. When a thread no longer needs the CPU, it is suspended, awaiting the resource described by a wait channel, and is given a scheduling priority that should be assigned to the thread when that thread is awakened. This priority does not affect the user-level scheduling priority.

When blocking on a lock, the wait channel is usually the address of the lock. When blocking for a resource or an event, the wait channel is typically the address of some data structure that identifies the resource or event for which the thread is waiting. For example, the address of a disk buffer is used while the thread is waiting for the buffer to be filled. When the buffer is filled, threads sleeping on that wait channel will be awakened. In addition to the resource addresses that are used as wait channels, there are some addresses that are used for special purposes:

- When a parent process does a wait system call to collect the termination status of its children, it must wait for one of those children to exit. Since it cannot know which of its children will exit first, and since it can sleep on only a single wait channel, there is a quandary about how to wait for the next of multiple events. The solution is to have the parent sleep on its own process structure. When a child exits, it awakens its parent’s process-structure address rather than its own. Thus, the parent doing the wait will awaken independently of which child process is the first to exit. Once running, it must scan its list of children to determine which one exited.

- When a thread does a sigsuspend system call, it does not want to run until it receives a signal. Thus, it needs to do an interruptible sleep on a wait channel that will never be awakened. By convention, the address of the signal-actions structure is given as the wait channel.

A thread may block for a short, medium, or long period of time depending on the reason that it needs to wait. A short wait occurs when a thread needs to wait for access to a lock that protects a data structure. A medium wait occurs while a thread waits for an event that is expected to occur quickly such as waiting for data to be read from a disk. A long wait occurs when a thread is waiting for an event that will happen at an indeterminate time in the future such as input from a user.

Short-term waits arise only from a lock request. Short-term locks include mutexes, read-writer locks, and read-mostly locks. Details on these locks are given later in this section. A requirement of short-term locks is that they may not be held while blocking for an event as is done for medium- and long-term locks. The only reason that a thread holding a short-term lock is not running is that it has been preempted by a higher-priority thread. It is always possible to get a short-term lock released by running the thread that holds it and any threads that block the thread that holds it.

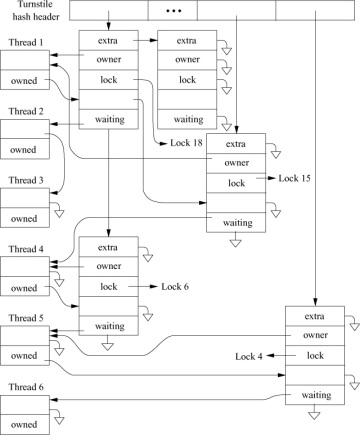

A short-term lock is managed by a turnstile data structure. The turnstile tracks the current owner of the lock and the list of threads waiting for access to the lock. Figure 4.3 shows how turnstiles are used to track blocked threads. Across the top of the figure is a set of hash headers that allow a quick lookup to find a lock with waiting threads. If a turnstile is found, it provides a pointer to the thread that currently owns the lock and lists of the threads that are waiting for exclusive and shared access. The most important use of the turnstile is to quickly find the threads that need to be awakened when a lock is released. In Figure 4.3, Lock 18 is owned by thread 1 and has threads 2 and 3 waiting for exclusive access to it. The turnstile in this example also shows that thread 1 holds contested Lock 15.

Figure 4.3 Turnstile structures for blocked threads.

A turnstile is needed each time a thread blocks on a contested lock. Because blocking is common, it would be prohibitively slow to allocate and free a turnstile every time one is needed. So each thread allocates a turnstile when it is created. As a thread may only be blocked on one lock at any point in time, it will never need more than one turnstile. Turnstiles are allocated by threads rather than being incorporated into each lock structure because there are far more locks in the kernel than there are threads. Allocating one turnstile per thread rather than one per lock results in lower memory utilization in the kernel.

When a thread is about to block on a short-term lock, it provides its turnstile to be used to track the lock. If it is the first thread to block on the lock, its turnstile is used. If it is not the first thread to block, then an earlier thread’s turnstile will be in use to do the tracking. The additional turnstiles that are provided are kept on a free list whose head is the turnstile being used to track the lock. When a thread is awakened and is being made runnable, it is given a turnstile from the free list (which may not be the same one that it originally provided). When the last thread is awakened, the free list will be empty and the turnstile no longer needed, so it can be taken by the awakening thread.

In Figure 4.3, the turnstile tracking Lock 18 was provided by thread 2 as it was the first to block. The spare turnstile that it references was provided by thread 3. If thread 2 is the first to be awakened, it will get the spare turnstile provided by thread 3 and when thread 3 is awakened later, it will be the last to be awakened so will get the no-longer-needed turnstile originally provided by thread 2.

A priority inversion occurs when a thread trying to acquire a short-term lock finds that the thread holding the lock has a lower priority than its own priority. The owner and list of blocked threads tracked by the turnstile allows priority propagation of the higher priority from the thread that is about to be blocked to the thread that holds the lock. With the higher priority, the thread holding the lock will run, and if, in turn, it is blocked by a thread with lower priority, it will propagate its new higher priority to that thread. When finished with its access to the protected data structure, the thread with the temporarily raised priority will release the lock. As part of releasing the lock, the propagated priority will be dropped, which usually results in the thread from which the priority was propagated getting to run and now being able to acquire the lock.

Processes blocking on medium-term and long-term locks use sleepqueue data structures rather than turnstiles to track the blocked threads. The sleepqueue data structure is similar to the turnstile except that it does not need to track the owner of the lock. The owner need not be tracked because sleepqueues do not need to provide priority propagation. Threads blocked on medium- and long-term locks cannot proceed until the event for which they are waiting has occurred. Raising their priority will not allow them to run any sooner.

Sleepqueues have many similarities to turnstiles including a hash table to allow quick lookup of contested locks and lists of the threads blocked because they are awaiting shared and exclusive locks. When created, each thread allocates a sleepqueue structure. It provides its sleepqueue structure when it is about to be put to sleep and is returned a sleepqueue structure when it is awakened.

Unlike short-term locks, the medium- and long-term locks can request a time limit so that if the event for which they are waiting has not occurred within the specified period of time, they will be awakened with an error return that indicates that the time limit expired rather than the event occurring. Finally, long-term locks can request that they be interruptible, meaning that they will be awakened if a signal is sent to them before the event for which they are waiting has occurred.

Suspending a thread takes the following steps in its operation:

- Prevents events that might cause thread-state transitions. Historically a global scheduling lock was used, but it was a bottleneck. Now each thread uses a lock tied to its current state to protect its per-thread state. For example, when a thread is on a run queue, the lock for that run queue is used; when the thread is blocked on a turnstile, the turnstile’s lock is used; when a thread is blocked on a sleep queue, the lock for the wait channels hash chain is used.

- Records the wait channel in the thread structure and hashes the wait-channel value to check for an existing turnstile or sleepqueue for the wait-channel. If one exists, links the thread to it and saves the turnstile or sleepqueue structure provided by the thread. Otherwise places the turnstile or sleepqueue onto the hash chain and links the thread into it.

- For threads being placed on a turnstile, if the current thread’s priority is higher than the priority of the thread currently holding the lock, propagates the current thread’s priority to the thread currently holding the lock. For threads being placed on a sleepqueue, sets the thread’s priority to the priority that the thread will have when the thread is awakened and sets its SLEEPING flag.

- For threads being placed on a turnstile, sort the thread into the list of waiting threads such that the highest priority thread appears first in the list. For threads being placed on a sleepqueue, place the thread at the end of the list of threads waiting for that wait-channel.

- Calls mi_switch() to request that a new thread be scheduled; the associated mutex is released as part of switching to the other thread.

A sleeping thread is not selected to execute until it is removed from a turnstile or sleepqueue and is marked runnable. This operation is done either implicitly as part of a lock being released, or explicitly by a call to the wakeup() routine to signal that an event has occurred or that a resource is available. When wakeup() is invoked, it is given a wait channel that it uses to find the corresponding sleepqueue (using a hashed lookup). It awakens all threads sleeping on that wait channel. All threads waiting for the resource are awakened to ensure that none are inadvertently left sleeping. If only one thread were awakened, it might not request the resource on which it was sleeping. If it does not use and release the resource, any other threads waiting for that resource will be left sleeping forever. A thread that needs an empty disk buffer in which to write data is an example of a thread that may not request the resource on which it was sleeping. Such a thread can use any available buffer. If none is available, it will try to create one by requesting that a dirty buffer be written to disk and then waiting for the I/O to complete. When the I/O finishes, the thread will awaken and will check for an empty buffer. If several are available, it may not use the one that it cleaned, leaving any other threads to sleep forever as they wait for the cleaned buffer.

In instances where a thread will always use a resource when it becomes available, wakeup_one() can be used instead of wakeup(). The wakeup_one() routine wakes up only the first thread that it finds waiting for a resource as it will have been asleep the longest. The assumption is that when the awakened thread is done with the resource, it will issue another wakeup_one() to notify the next waiting thread that the resource is available. The succession of wakeup_one() calls will continue until all threads waiting for the resource have been awakened and had a chance to use it. Because the threads are ordered from longest to shortest waiting, that is the order in which they will be awakened and gain access to the resource.

When releasing a turnstile lock, all waiting threads are released. Because the threads are ordered from highest to lowest priority, that is the order in which they will be awakened. Usually they will then be scheduled in the order in which they were released. When threads end up being run concurrently, the adaptive spinning (described later in this section) usually ensures that they will not block. And because they are released from highest to lowest priority, the highest priority thread will usually be the first to acquire the lock. There will be no need for, and hence no overhead from, priority propagation. Rather, the lock will be handed down from the highest priority threads through the intermediate priorities to the lowest priority.

To avoid having excessive numbers of threads awakened, kernel programmers try to use locks and wait channels with fine-enough granularity that unrelated uses will not collide on the same resource. For example, they put locks on each buffer in the buffer cache rather than putting a single lock on the buffer cache as a whole.

Resuming a thread takes the following steps in its operation:

- Removes the thread from its turnstile or sleepqueue. If it is the last thread to be awakened, the turnstile or sleepqueue is returned to it. If it is not the last thread to be awakened, a turnstile or sleepqueue from the free list is returned to it.

- Recomputes the user-mode scheduling priority if the thread has been sleeping longer than one second.

- If the thread had been blocked on a turnstile, it is placed on the run queue. If the thread had been blocked on a sleepqueue, it is placed on the run queue if it is in a SLEEPING state and if its process is not swapped out of main memory. If the process has been swapped out, the swapin process will be awakened to load it back into memory (see Section 6.12); if the thread is in a STOPPED state, it is not put on a run queue until it is explicitly restarted by a user-level process, either by a ptrace system call (see Section 4.9) or by a continue signal (see Section 4.7).

If any threads are placed on the run queue and one of them has a scheduling priority higher than that of the currently executing thread, it will also request that the CPU be rescheduled as soon as possible.

Synchronization

The FreeBSD kernel supports both symmetric multiprocessing (SMP) and nonuniform memory access (NUMA) architectures. An SMP architecture is one in which all the CPUs are connected to a common main memory while a NUMA architecture is one in which the CPUs are connected to a non-uniform memory. With a NUMA architecture, some memory is local to a CPU and is quickly accessible while other memory is slower to access because it is local to another CPU or shared between CPUs. Throughout this book, references to multiprocessors and multiprocessing refer to both SMP and NUMA architectures.

A multiprocessing kernel requires extensive and fine-grained synchronization. The simplist form of synchronization is a critical section. While a thread is running in a critical section, it can neither be migrated to another CPU nor preempted by another thread. A critical section protects per-CPU data structures such as a run queue or CPU-specific memory-allocation data structures. A critical section controls only a single CPU, so it cannot protect systemwide data structures; one of the locking mechanisms described below must be used. While critical sections are useful for only a limited set of data structures, they are beneficial in those cases because they have significantly lower overhead than locks. A critical section begins by calling critical_enter() and continues until calling the function critical_exit().

Table 4.3 shows the hierarchy of locking that is necessary to support multiprocessing. The column labelled Sleep in Table 4.3 shows whether a lock of that type may be held when a thread blocks for a medium- or long-term sleep.

Table 4.3 Locking hierarchy.

Level |

Type |

Sleep |

Description |

Highest |

witness |

yes |

partially ordered sleep locks |

|

lock manager |

yes |

drainable shared/exclusive access |

|

condition variables |

yes |

event-based thread blocking |

|

shared-exclusive lock |

yes |

shared and exclusive access |

|

read-mostly lock |

no |

optimized for read access |

|

reader-writer lock |

no |

shared and exclusive access |

|

sleep mutex |

no |

spin for a while, then sleep |

|

spin mutex |

no |

spin lock |

Lowest |

hardware |

no |

memory-interlocked compare-and-swap |

Although it is possible to build locks using single-memory operations [Dekker, 2013], to be practical, the hardware must provide a memory interlocked compare-and-swap instruction. The compare-and-swap instruction must allow two operations to be done on a main-memory location—the reading and comparing to a specified compare-value of the existing value followed by the writing of a new value if the read value matches the compare-value—without any other processor being able to read or write that memory location between the two memory operations. All the locking primitives in the FreeBSD system are built using the compare-and-swap instruction.

Mutex Synchronization

Mutexes are the primary method of short-term thread synchronization. The major design considerations for mutexes are as follows:

- Acquiring and releasing uncontested mutexes should be as fast as possible.

- Mutexes must have the information and storage space to support priority propagation. In FreeBSD, mutexes use turnstiles to manage priority propagation.

- A thread must be able to acquire a mutex recursively if the mutex is initialized to support recursion.

Mutexes are built from the hardware compare-and-swap instruction. A memory location is reserved for the lock. When the lock is free, the value of MTX_UNOWNED is stored in the memory location; when the lock is held, a pointer to the thread owning the lock is stored in the memory location. The compare-and-swap instruction tries to acquire the lock. The value in the lock is compared with MTX_UNOWNED; if it matches, it is replaced with the pointer to the thread. The instruction returns the old value; if the old value was MTX_OWNED, then the lock was successfully acquired and the thread may proceed. Otherwise, some other thread held the lock so the thread must loop doing the compare-and-swap until the thread holding the lock (and running on a different processor) stores MTX_OWNED into the lock to show that it is done with it.

There are currently two flavors of mutexes: those that block and those that do not. By default, threads will block when they request a mutex that is already held. Most kernel code uses the default lock type that allows the thread to be suspended from the CPU if it cannot get the lock.

Mutexes that do not sleep are called spin mutexes. A spin mutex will not relinquish the CPU when it cannot immediately get the requested lock, but it will loop, waiting for the mutex to be released by another CPU. Spinning can result in deadlock if a thread interrupted the thread that held a mutex and then tried to acquire the mutex. To protect an interrupt thread from blocking against itself during the period that it is held, a spin mutex runs inside a critical section with interrupts disabled on that CPU. Thus, an interrupt thread can run only on another CPU during the period that the spin mutex is held.

Spin mutexes are specialized locks that are intended to be held for short periods of time. A thread may hold multiple spin mutexes, but it is required to release the mutexes in the opposite order from which they were acquired. A thread may not go to sleep while holding a spin mutex.

On most architectures, both acquiring and releasing an uncontested spin mutex are more expensive than the same operation on a nonspin mutex. Spin mutexes are more expensive than blocking locks because spin mutexes have to disable or defer interrupts while they are held to prevent races with interrupt handling code. As a result, holding spin mutexes can increase interrupt latency. To minimize interrupt latency and reduce locking overhead, FreeBSD uses spin mutexes only in code that does low-level scheduling and context switching.

The time to acquire a lock can vary. Consider the time to wait for a lock needed to search for an item on a list. The thread holding the search lock may have to acquire another lock before it can remove an item it has found from the list. If the needed lock is already held, it will block to wait for it. A different thread that tries to acquire the search lock uses adaptive spinning. Adaptive spinning is implemented by having the thread that wants the lock extract the thread pointer of the owning thread from the lock structure. It then checks to see if the thread is currently executing. If so, it spins until either the lock is released or the thread stops executing. The effect is to spin so long as the current lock holder is executing on another CPU. The reasons for taking this approach are many:

- Locks are usually held for brief periods of time, so if the owner is running, then it will probably release the lock before the current thread completes the process of blocking on the lock.

- If a lock holder is not running, then the current thread has to wait at least one context switch time before it can acquire the lock.

- If the owner is on a run queue, then the current thread should block immediately so it can lend its priority to the lock owner.

- It is cheaper to release an uncontested lock with a single atomic operation than a contested lock. A contested lock has to find the turnstile, lock the turnstile chain and turnstile, and then awaken all the waiters. So adaptive spinning reduces overhead on both the lock owner and the thread trying to acquire the lock.

The lower cost for releasing an uncontested lock explains the algorithm used to awaken waiters on a mutex. Historically, the mutex code would only awaken a single waiter when a contested lock was released, which left the lock in a contested state if there were more than one waiter. However, leaving a contested lock ensured that the new lock holder would have to perform a more expensive unlock operation. Indeed, all but the last waiter would have an expensive unlock operation. In the current FreeBSD system, all the waiters are awakened when the lock is released. Usually they end up being scheduled sequentially, which results in them all getting to do cheaper unlock operations. If they do all end up running concurrently, they will then use adaptive spinning and will finish the chain of lock requests sooner since the context switches to awaken the threads are performed in parallel rather than sequentially. This change in behavior was motivated by documentation of these effects noted in Solaris Internals [McDougall & Mauro, 2006].

It is wasteful of CPU cycles to use spin mutexes for resources that will be held for long periods of time (more than a few microseconds). For example, a spin mutex would be inappropriate for a disk buffer that would need to be locked throughout the time that a disk I/O was being done. Here, a sleep lock should be used. When a thread trying to acquire a medium- or long-term lock finds that the lock is held, it is put to sleep so that other threads can run until the lock becomes available.

Spin mutexes are never appropriate on a uniprocessor since the only way that a resource held by another thread will ever be released will be when that thread gets to run. Spin mutexes are always converted to sleep locks when running on a uniprocessor. As with the multi-processor, interrupts are disabled while the spin mutexes are held. Since there is no other processor on which the interrupts can run, interrupt latency becomes much more apparent on a uniprocessor.

Mutex Interface

The mtx_init() function must be used to initialize a mutex before it can be used. The mtx_init() function specifies a type that the witness code uses to classify a mutex when doing checks of lock ordering. It is not permissible to pass the same mutex to mtx_init() multiple times without intervening calls to mtx_destroy().

The mtx_lock() function acquires a mutual exclusion lock for the currently running kernel thread. If another kernel thread is holding the mutex, the caller will sleep until the mutex is available. The mtx_lock_spin() function is similar to the mtx_lock() function except that it will spin until the mutex becomes available. A critical section is entered when the spin mutex is obtained and is exited when the spin mutex is released. Interrupts are blocked on the CPU on which the thread holding the spin mutex is running. No other threads, including interrupt threads, can run on the CPU during the period that the spin mutex is held.

It is possible for the same thread to acquire a mutex recursively with no ill effects if the MTX_RECURSE bit was passed to mtx_init() during the initialization of the mutex. The witness module verifies that a thread does not recurse on a non-recursive lock. A recursive lock is useful if a resource may be locked at two or more levels in the kernel. By allowing a recursive lock, a lower layer need not check if the resource has already been locked by a higher layer; it can simply lock and release the resource as needed.

The mtx_trylock() function tries to acquire a mutual exclusion lock for the currently running kernel thread. If the mutex cannot be immediately acquired, mtx_trylock() will return 0; otherwise the mutex will be acquired and a nonzero value will be returned. The mtx_trylock() function cannot be used with spin mutexes.

The mtx_unlock() function releases a mutual exclusion lock; if a higher-priority thread is waiting for the mutex, the releasing thread will be put to sleep to allow the higher-priority thread to acquire the mutex and run. A mutex that allows recursive locking maintains a reference count showing the number of times that it has been locked. Each successful lock request must have a corresponding unlock request. The mutex is not released until the final unlock has been done, causing the reference count to drop to zero.

The mtx_unlock_spin() function releases a spin-type mutual exclusion lock; the critical section entered before acquiring the mutex is exited.

The mtx_destroy() function destroys a mutex so the data associated with it may be freed or otherwise overwritten. Any mutex that is destroyed must previously have been initialized with mtx_init(). It is permissible to have a single reference to a mutex when it is destroyed. It is not permissible to hold the mutex recursively or have another thread blocked on the mutex when it is destroyed. If these rules are violated, the kernel will panic.

Normally, a mutex is allocated within the structure that it will protect. For long-lived structures or structures that are allocated from a zone (structures in a zone are created once and used many times before they are destroyed), the time overhead of initializing and destroying it is insignificant. For a short-lived structure that is not allocated out of a zone, the cost of initializing and destroying an embedded mutex may exceed the time during which the structure is used. In addition, mutexes are large and may double or triple the size of a small short-lived structure (a mutex is often the size of a cache line, which is typically 128 bytes). To avoid this overhead, the kernel provides a pool of mutexes that may be borrowed for use with a short-lived structure. The short-lived structure does not need to reserve space for a mutex, just space for a pointer to a pool mutex. When the structure is allocated, it requests a pool mutex to which it sets its pointer. When it is done, the pool mutex is returned to the kernel and the structure freed. An example of a use of a pool mutex comes from the poll system call implementation that needs a structure to track a poll request from the time the system call is entered until the requested data arrives on the descriptor.

Lock Synchronization

Interprocess synchronization to a resource typically is implemented by associating it with a lock structure. The kernel has several lock managers that manipulate a lock. The operations provided by all the lock managers are:

- Request shared: Get one of many possible shared locks. If a thread holding an exclusive lock requests a shared lock, some lock managers will downgrade the exclusive lock to a shared lock while others simply return an error.

- Request exclusive: When all shared locks have cleared, grant an exclusive lock. To ensure that the exclusive lock will be granted quickly, some lock managers stop granting shared locks when an exclusive lock is requested. Others grant new shared locks only for recursive lock requests. Only one exclusive lock may exist at a time, except that a thread holding an exclusive lock may get additional exclusive locks if the canrecurse flag was set when the lock was initialized. Some lock managers allow the canrecurse flag to be specified in the lock request.

- Request release: Release one instance of a lock.

In addition to these basic requests, some of the lock managers provide the following additional functions:

- Request upgrade: The thread must hold a shared lock that it wants to have upgraded to an exclusive lock. Other threads may get exclusive access to the resource between the time that the upgrade is requested and the time that it is granted. Some lock managers allow only a limited version of upgrade where it is granted if immediately available, but do not provide a mechanism to wait for an upgrade.

- Request exclusive upgrade: The thread must hold a shared lock that it wants to have upgraded to an exclusive lock. If the request succeeds, no other threads will have received exclusive access to the resource between the time that the upgrade is requested and the time that it is granted. However, if another thread has already requested an upgrade, the request will fail.

- Request downgrade: The thread must hold an exclusive lock that it wants to have downgraded to a shared lock. If the thread holds multiple (recursive) exclusive locks, some lock managers will downgrade them all to shared locks; other lock managers will fail the request.

- Request drain: Wait for all activity on the lock to end, and then mark it decommissioned. This feature is used before freeing a lock that is part of a piece of memory that is about to be released.

Locks must be initialized before their first use by calling their initialization function. Parameters to the initialization function may include the following:

- A top-half kernel priority at which the thread should run if it was blocked before it acquired the lock

- Flags such as canrecurse that allow the thread currently holding an exclusive lock to get another exclusive lock rather than panicking with a “locking against myself ” failure

- A string that describes the resource that the lock protects, referred to as the wait channel message

- An optional maximum time to wait for the lock to become available

Not all types of locks support all these options. When a lock is no longer needed, it must be released.

As shown in Table 4.3, the lowest-level type of lock is the reader-writer lock. The reader-writer lock operates much like a mutex except that a reader-writer lock supports both shared and exclusive access. Like a mutex, it is managed by a turnstile so it cannot be held during a medium- or long-term sleep and provides priority propagation for exclusive (but not shared) locks. Reader-writer locks may be recursed.

Next up in Table 4.3 is the read-mostly lock. The read-mostly lock has the same capabilities and restrictions as reader-writer locks while they also add priority propagation for shared locks by tracking shared owners using a caller-supplied tracker data structure. Read-mostly locks are used to protect data that are read far more often than they are written. They work by trying the read without acquiring a lock assuming that the read will succeed and only fall back to using locks when the assumption fails. Reads usually happen more quickly but at a higher cost if the underlying resource is modified. The routing table is a good example of a read-mostly data structure. Routes are rarely updated, but are read frequently.

The remaining types of locks all permit medium- and long-term sleeping. None of these locks support priority propagation. The shared-exclusive locks are the fastest of these locks with the fewest features. In addition to the basic shared and exclusive access, they provide recursion for both shared and exclusive locks, the ability to be interrupted by a signal, and limited upgrade and downgrade capabilities.

The lock-manager locks are the most full featured but also the slowest of the locking schemes. In addition to the features of the shared-exclusive locks, they provide full upgrade and downgrade capabilities, the ability to be awakened after a specified interval, the ability to drain all users in preparation for being deallocated, and the ability to pass ownership of locks between threads and to the kernel.

Condition variables are used with mutexes to wait for conditions to occur. Threads wait on condition variables by calling cv_wait(), cv_wait_sig() (wait unless interrupted by a signal), cv_timedwait() (wait for a maximum time), or cv_timedwait_sig() (wait unless interrupted by a signal or for a maximum time). Threads unblock waiters by calling cv_signal() to unblock one waiter, or cv_broadcast() to unblock all waiters. The cv_waitq_remove() function removes a waiting thread from a condition-variable wait queue if it is on one.

A thread must hold a mutex before calling cv_wait(), cv_wait_sig(), cv_timedwait(), or cv_timedwait_sig(). When a thread waits on a condition, the mutex is atomically released before the thread is blocked, and then atomically reacquired before the function call returns. All waiters must use the same mutex with a condition variable. A thread must hold the mutex while calling cv_signal() or cv_broadcast().

Deadlock Prevention

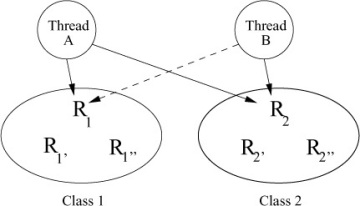

The highest-level locking primitive prevents threads from deadlocking when locking multiple resources. Suppose that two threads, A and B, require exclusive access to two resources, R1 and R2, to do some operation as shown in Figure 4.4. If thread A acquires R1 and thread B acquires R2, then a deadlock occurs when thread A tries to acquire R2 and thread B tries to acquire R1. To avoid deadlock, FreeBSD maintains a partial ordering on all the locks. The two partial-ordering rules are as follows:

Figure 4.4 Partial ordering of resources.

- A thread may acquire only one lock in each class.

- A thread may acquire only a lock in a higher-numbered class than the highest-numbered class for which it already holds a lock.

Figure 4.4 shows two classes. Class 1 with resources R1, R1′, and R1″. Class 2 with resources R2, R2′, and R2″. In Figure 4.4, Thread A holds R1 and can request R2 as R1 and R2 are in different classes and R2 is in a higher-numbered class than R1. However, Thread B must release R2 before requesting R1, since R2 is in a higher class than R1. Thus, Thread A will be able to acquire R2 when it is released by Thread B. After Thread A completes and releases R1 and R2, Thread B will be able to acquire both of those locks and run to completion without deadlock.

Historically, the class members and ordering were poorly documented and unenforced. Violations were discovered when threads would deadlock and a careful analysis was done to figure out what ordering had been violated. With an increasing number of developers and a growing kernel, the ad hoc method of maintaining the partial ordering of locks became untenable. A witness module was added to the kernel to derive and enforce the partial ordering of the locks. The witness module keeps track of the locks acquired and released by each thread. It also keeps track of the order in which locks are acquired relative to each other. Each time a lock is acquired, the witness module uses these two lists to verify that a lock is not being acquired in the wrong order. If a lock order violation is detected, then a message is output to the console detailing the locks involved and the locations in the code in which they were acquired. The witness module also verifies that no locks that prohibit sleeping are held when requesting a sleep lock or voluntarily going to sleep.

The witness module can be configured to either panic or drop into the kernel debugger when an order violation occurs or some other witness check fails. When running the debugger, the witness module can output the list of locks held by the current thread to the console along with the filename and line number at which each lock was last acquired. It can also dump the current order list to the console. The code first displays the lock order tree for all the sleep locks. Then it displays the lock order tree for all the spin mutexes. Finally, it displays a list of locks that have not yet been acquired.