- "Do I Know This Already?" Quiz

- Foundation Topics

- Exam Preparation Tasks

- Answer Review Questions

Foundation Topics

Objective 3.1—Manage vSphere Storage Virtualization

This section provides details on how to set up and use storage area network (SAN) and network access server (NAS) storage in a virtualized environment. vSphere storage virtualization combines APIs and features built into vSphere, which abstracts the physical layer of storage into storage space that can be managed and utilized by virtual machines. A virtual machine (VM) uses a virtual disk or disks to store files that it needs to run an operating system and an application or applications. The files comprising a virtual machine are detailed in Chapter 16, “Virtual Machines.” One of the files that encompass a VM is a virtual disk, which contains the data for the VM.

This chapter concentrates on SAN- and NAS-based storage. A SAN is a block-based storage protocol that makes data available over the Ethernet or Fibre Channel network, using the Fibre Channel, FCoE, or iSCSI protocols. On the other hand, a NAS is a file-based storage system that makes data available over an Ethernet network, using the Network File System (NFS) protocol. Both a SAN and a NAS are shared devices that connect to an ESXi Host through a network or storage adapter. Now let’s discuss how to configure and manage these shared storage protocols.

Storage Protocols

Storage is a resource that vSphere uses to store virtual machines. Four main protocols can be used within vSphere: Fibre Channel (FC), Fibre Channel over Ethernet (FCoE), Internet Small Computer System Interface (iSCSI), and Network File System (NFS). These protocols can be used to connect the ESXi Host with a storage device.

Identify Storage Adapters and Devices

A storage adapter connects the ESXi Host and a storage SAN/NAS device. There are different adapters, and interaction is dependent on how much intelligence is built into the card. Some adapters have firmware or integrated circuitry on the card that can improve performance between the ESXi Host and the storage device. A VM resides on a storage device and needs a software driver to control communication with the ESXi Host. No matter which protocol you use, the VM residing on the storage device relies on a SCSI device driver to communicate to the ESXi Host. The VM uses a virtual SCSI controller to access the virtual disks of the virtual machine. One factor that determines which storage adapter to use is what protocol is being utilized for the communication between the ESXi Host and the storage device. Before we discuss each of the storage protocols, this chapter shows how to find the storage adapters and devices within vSphere using the vSphere Web Client. Then it describes each of these protocols and identifies important characteristics to consider when using each of these options.

Display Storage Adapters for a Host

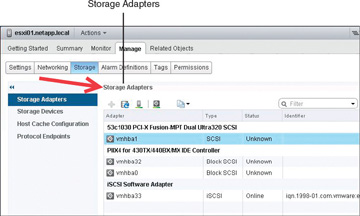

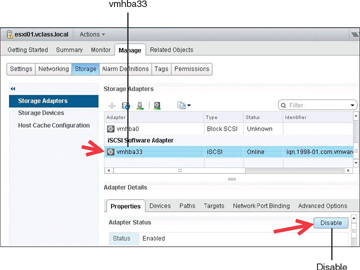

The procedure to display all of a host’s storage adapters using the vSphere Web Client is to highlight the ESXi Host in the hosts and clusters inventory. Then click the Manage tab, click Storage, and select Storage Adapters. In Figure 4-1 you can see four adapters. The last listed adapter, vmhba33, is a software-based iSCSI initiator.

FIGURE 4-1 Storage Adapters

Storage Devices for an Adapter

Figure 4-1 shows connections between storage and the ESXi Host. You can have multiple storage devices reserved, and they can contain VMs. One method to distinguish one storage device from another is to use a logical unit number (LUN). A LUN is the identifier of a device that is being addressed by the SCSI protocol or similar protocols, such as Fibre Channel and iSCSI. The protocol may be used with any device that supports read/write operations, such as a tape drive, but the term is most often used to refer to a disk drive, and these terms are, in fact, often used synonymously. Tapes, CD-ROMs, and even scanners and printers can be connected to a SCSI bus and may therefore appear as LUNs.

A VMware VMDK (Virtual Machine Disk) becomes a LUN when it is mapped to a VM using the virtual SCSI adapter. LUN is one of the many terms that are used incorrectly most of the time. A SAN is structured just the same way as a traditional SCSI bus except that the old ribbon cable has been replaced with a network. This is why LUN masking is needed: SCSI is a master/slave architecture (initiator/target), and there is no authentication mechanism in the SCSI protocol to dictate which hosts can acquire a particular LUN; hence LUN masking “masks” (hides) LUNs from all hosts except the ones it is configured for.

The procedure to display a list of storage devices for a storage adapter on an ESXi Host is to highlight the ESXi Host in the hosts and clusters inventory. Then click the Manage tab, click Storage, and select Storage Adapters. Once you select the storage adapter, the storage devices that can be accessed through the adapter are displayed in the Adapter Details section by selecting Devices. In Figure 4-2, the iSCSI software adapter has been selected and is displaying three iSCSI LUN devices.

FIGURE 4-2 Available iSCSI LUNs

Fibre Channel Protocol

Fibre Channel (FC) is a transport layer that can be used to transmit data on a SAN. Many devices can communicate with each other on a SAN, such as switches, hubs, initiator HBAs, and storage adapters using the Fibre Channel Protocol (FCP). The protocol has been around since the mid-1980s, so it is a well understand topology, and it has been popular due to its excellent performance. Fibre Channel replaced Small Computer Systems Interface (SCSI) as the primary means of host-to-storage communication. In order to facilitate the compatibility, the FC frame encapsulates the SCSI protocol. Thus, FC is moving SCSI packets around its network.

The terms initiator and target were originally SCSI terms. FCP connects the actual initiator and target hardware, which must log into the fabric and be properly zoned (like VLANs) for storage discovery by the ESXi Host. The host operating system communicates via SCSI commands with the disk drives in a SAN. The cabling that FC uses can be either copper cables or optical cables. Using optical cables, the SCSI protocol is serialized (that is, the bits are converted from parallel to serial, one bit at a time) and transmitted as light pulses across the optical cable. Data runs at the speed of light, without the limitations of short-distance SCSI cables. Fibre Channel is like a SAN highway where other protocols such as SCSI and IP can drive.

Fibre Channel over Ethernet Protocol

The Fibre Channel over Ethernet (FCoE) is a protocol that takes a Fibre Channel (FC) packet and puts it over an Ethernet network of 10 Gbps or higher speeds. Each FC frame is encapsulated into an Ethernet frame with a one-to-one mapping. ESXi servers connect to a SAN fabric using host bus adapters (HBAs). Connectivity to FCoE fabrics is enabled through converged network adapters (CNAs). Each HBA/CNA can run as either an initiator (ESXi Host) or a target (storage array). Each adapter has a global unique address referred to as a World Wide Name (WWN). Each WWN must be known in order to configure LUN access on a NetApp storage array.

iSCSI Protocol

The Internet Small Computer System Interface (iSCSI) protocol provides access to storage devices over Ethernet-based TCP/IP networks. iSCSI enables data transfers by carrying SCSI commands over an IP network. Routers and switches can be used to extend the IP storage network to the wide area network—or even through the Internet with the use of tunneling protocols. The iSCSI protocol establishes communication sessions between initiators (clients) and targets (servers). The initiators are devices that request commands be executed. Targets are devices that carry out the commands. The structure used to communicate a command from an application client to a device server is referred to as a Command Descriptor Block (CDB). The basic functions of the SCSI driver are to build SCSI CDBs from requests issued by the application and forward them to the iSCSI layer.

NFS Protocol

The Network File System (NFS) service lets you share files over a network between a storage device and an ESXi Host. The files are centrally located on the NFS server, which enables the NFS client access using a client/client architecture. The NFS server is the storage array, and it provides not only the data but also the file system. The NFS client is the ESXi Host, and the client code has been built into the VMkernel since 2002, running NFS version 3. Because the NFSv3 client is automatically loaded into the ESXi Host, it was the only version of NFS that VMware supported. What is new in vSphere 6.0 is that VMware reworked the source code to add support for both NFSv3 and NFSv4.1. NFS version 4.1 adds additional features that aid in interactions between the storage and the ESXi Host.

Authentication NFSv4.1 with Kerberos Authentication

NFSv4.1 supports both Kerberos and non-root user authentication. With NFSv3, remote files are accessed with root permissions, and servers have to be configured with the no_root_squash option to allow root access to files. This is known as an AUTH_SYS mechanism.

Native Multipathing and Session Trunking

The ability to add multiple IP addresses associated with a single NFS mount for redundancy is known as session trunking, or multipathing. In the server field, you add a comma to separate the IP addresses in a list to do load balancing or multipathing.

In-band, Mandatory, and Stateful Server-Side File Locking

In NFSv3 VMware does not use the standard lockd daemon because of how VMware’s high availability conflicts with the standard server lock daemon. Thus, in NFSv3, VMware uses its own client-side locking mechanism for file locking. In NFSv4.1, VMware now uses the standard server-side lockd daemon.

Identify Storage Naming Conventions

In the previous sections, you have learned that various protocols can be presented to vSphere. If you want to create a virtual machine, you need a place to store the virtual machine files, and thus you need a datastore. The naming convention is based on which protocol you use. In order to create a datastore, you need to ask the storage administrator to create either a LUN or an NFS server. If you are using local drives, iSCSI, or Fibre Channel, then the device naming begins with vmhba. If you are using NFS, the device naming includes the NFS export name. Each LUN or storage device can be identified by several names.

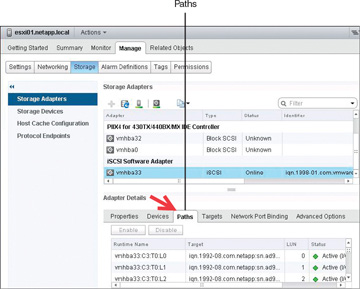

The procedure to display the storage paths using the vSphere Web Client is to highlight the ESXi Host in the hosts and clusters inventory. Then click the Manage tab, click Storage, and select Storage Adapters. Once you select the storage adapter, the storage devices that can be displayed through the adapter are displayed in the Adapter Details section when you select Paths. In Figure 4-3 the iSCSI software adapter is selected and showing three iSCSI LUN devices:

FIGURE 4-3 Displaying iSCSI LUN Paths

Runtime name: vmhbaAdapter:CChannel:TTarget:LLUN

vmhbaAdapter is the name of the storage adapter. The name refers to the physical adapter on the host, not to the SCSI controller used by the virtual machine. In vmhba in Figure 4-3, vm refers to the VMkernel, and hba is the host bus adapter.

CChannel is the storage channel, if the adapter has multiple connections, the first channel is channel 0. Software iSCSI adapters and dependent hardware adapters use the channel number to show multiple paths to the same target.

TTarget is the target number. The target number might change if the mappings of targets visible to the host change or if the ESXi Host reboots.

LLun is the LUN, which shows the position of the LUN within the target. If a target has only one LUN, the LUN is always 0.

For example, vmhba2:C0:T2:L3 would be LUN 3 on target 2, accessed through the storage adapter vmhba2 and channel 0.

The target name shows the Network Address Authority (NAA) ID, which can appear in different formats, based on the device itself. In Figure 4-3, the target name is for a software-based iSCSI device that is using the iqn scheme.

LUN number: This is the LUN for the device.

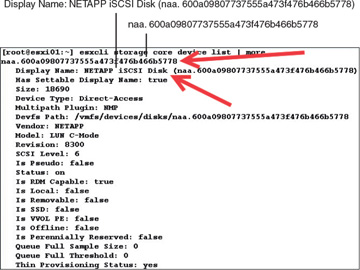

To display all device names using the CLI, type in the following command (see Figure 4-4):

# esxcli storage core device list

FIGURE 4-4 Use the CLI to Display Storage Device Information

Identify Hardware/Dependent Hardware/Software iSCSI Initiator Requirements

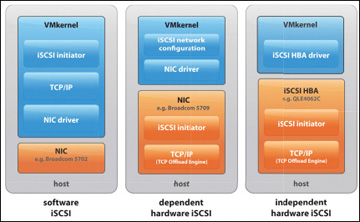

The ESXi Host uses the iSCSI protocol to access LUNs on the storage system and is the initiator over a standard Ethernet interface. The host needs a network adapter to send and receive the iSCSI packets over TCP/IP. There are three iSCSI initiator options available, as shown in Figure 4-5:

Software iSCSI initiator: A software iSCSI initiator is a standard 1 GbE or 10 GbE NIC. There is nothing special about the network interface. A Send Targets command creates an iSCSI session between the initiator and the target on TCP port 3260. The VMkernel on the ESXi Host is responsible for discovering the LUNs by issuing the Send Targets request.

Dependent hardware iSCSI initiator: A dependent hardware iSCSI initiator is a network interface card that has some built-in intelligence. It could be a chip or firmware, but either way, the card can “speak” iSCSI, which means the TCP offload function is on the card. However, not everything is done on the NIC; you must configure networking for the iSCSI traffic and bind the adapter to an appropriate VMkernel iSCSI port.

Independent hardware iSCSI initiator: An independent hardware iSCSI initiator handles all network and iSCSI processing and management for the ESXi Host. For example, QLogic makes adapters that provide for the discovery of LUNs as well as the TCP offload engine (TOE). The ESXi Host does not need a VMkernel port for this type of card. This type of card is more expensive than the other types of NICs, but it also gets better performance.

FIGURE 4-5 iSCSI Initiators

Discover New Storage LUNs

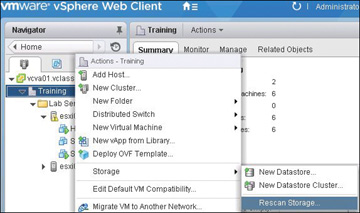

When an ESXi Host boots up, it scans or sends a signal down its bus path, which discovers all the LUNs that are connected to the host. Then the host stops scanning for any new LUNs. I like to think of the host as being on siesta, taking a break from scanning for any new LUNs. While the host is on siesta, if the storage administrator presents any new LUNs to the ESXi Host, they will not be seen. So you need to wake up the ESXi Host by selecting Rescan Storage, as shown in Figure 4-6.

FIGURE 4-6 Rescan Storage

After you click OK, a new box pops up, with check boxes for Scan for New Storage Devices (to scan all host bus adapters looking for new LUNs) and Scan for New VMFS Volumes (to rescan all known storage devices for new VMFS volumes that have been added since the last scan).

Configure FC/iSCSI/FCoE LUNs as ESXi Boot Devices

You can set up your ESXi Host to boot from a FC or iSCSI LUN instead of booting from a local hard disk. The host’s boot image is stored on the LUN that is being used exclusively for that ESXi Host. Thus, vSphere supports an ESXi Host’s ability to boot from a LUN on a SAN. The ability of an ESXi Host to boot from a SAN is supported using FC and iSCSI. This capability does require that each host have unique access to its own LUN. You also need to enable the boot adapter in the host BIOS.

FC

In order for a Fibre Channel (FC) device to boot from a SAN, the BIOS of the FC adapter must be configured with the World Wide Name (WWN) and LUN of the boot device. In addition, the system BIOS must designate the FC adapter as a boot controller.

iSCSI

It is possible to boot an ESXi Host using an independent hardware iSCSI, dependent hardware iSCSI, or software iSCSI initiator. If your ESXi Host uses an independent hardware iSCSI initiator, you need to configure the adapter to boot from the SAN. How you configure the adapter varies, depending on the vendor of the adapter.

If you are using a dependent hardware iSCSI or software iSCSI initiator, you must have an iSCSI boot-capable network adapter that supports the iSCSI Boot Firmware Table (iBFT) format. iBFT is a protocol defined in Advanced Configuration and Power Interface (ACPI) that defines parameters used to communicate between the storage adapter and the operating system. This is needed because the ESXi Host needs to load up enough information from the firmware to discover the iSCSI LUN over the network.

FCoE

You can boot an ESXi Host from a Fibre Channel over Ethernet (FCoE) network adapter. The FCoE initiator must support the FCoE Boot Firmware Table (FBFT) or FCoE Boot Parameter Table (FBPT). During the ESXi boot process, the parameters to find the boot LUN over the network are loaded into the system memory.

Create an NFS Share for Use with vSphere

The Network File System (NFS) is a client/server service that allows users to view, store, and modify files on a remote system as though they were on their own local computer. NFS allows systems of different architectures running different operating systems to access and share files across a network. The ESXi Host is the NFS client, while typically a SAN device such as EMC or NetApp acts as an NFS server. The NFS server shares the files, and the ESXi Host accesses the shared files over the network. How you create an NFS server and set up NFS shares depend on the system that is being used as the NFS server.

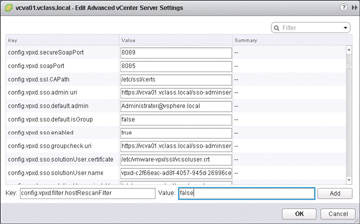

Enable/Configure/Disable vCenter Server Storage Filters

vCenter Server provides storage filters to avoid presenting storage that should be avoided due to performance problems or unsupported storage devices. The vSphere environment provides four storage filters that can affect the action of the vCenter Server when scanning storage. Without these filters, when vCenter Server is scanning for storage, all storage that is found could be presented to vSphere, even if it is in use. The filters prevent this type of unwanted activity. However, some specific use cases can affect what storage devices are found during scanning. By default, the storage filters are set to true and are designed to prevent specific storage datastore problems. Except in certain situations, it is best to leave the storage filters in their enabled state. Table 4-2 displays the vCenter Server storage filters and their respective Advanced Setting keys:

Table 4-2 vCenter Server Storage Filters

Filter |

Advanced Setting Key |

RDM |

config.vpxd.filter.rdmFilter |

VMFS |

config.vpxd.filter.vmfsFilter |

Host Rescan |

config.vpxd.filter.hostRescanFilter |

Same Host and Transports |

config.vpxd.filter.SameHostAndTransportsFilter |

RDM filter: Filters out LUNs that have been claimed by any RDM on any ESXi Host managed by vCenter Server. This storage filter can be used in a situation such as when using Microsoft Cluster Server. When set to false, the filter is disabled, allowing a LUN to be added as an RDM, even though the LUN is already being utilized as an RDM by another VM. To set up a SCSI-3 quorum disk for MSCS, this storage filter needs to be disabled.

VMFS filter: Filters out LUNs that have been claimed and VMFS formatted on any ESXi Host managed by vCenter Server. Thus, in the vSphere client, when you go to the Add Storage Wizard, you do not see any VMFS-formatted LUNs. If the setting is switched to false, the LUN is seen as available by the vSphere Client, and any ESXi Host could attempt to format it and claim it.

Host Rescan filter: By default, when a VMFS volume is created, an automatic rescan occurs on all hosts connected to the vCenter Server. If the setting is switched to false, the automatic rescan is disabled when creating a VMFS datastore on another host. For example, you could run a PowerCLI cmdlet to add 100 datastores; you should wait until the cmdlet is finished before scanning all the hosts in the cluster.

Same Host and Transports filter: Filters out LUNs that cannot be used as VMFS datastore extents due to host or storage incompatibility. If the setting is switched to false, an incompatible LUN could be added as an extent to an existing volume. An example of an incompatible LUN would be adding a LUN as an extent that is not seen by all of the hosts.

As an example, the Host Rescan filter could be set to false to stop an automatic rescan when you create a new datastore. Since you are going to run a PowerCLI cmdlet to add 100 datastores, you would want to turn this off until the cmdlet is finished running. Thus, after the 100 datastores are added, you could scan to discover all of the new storage. Figure 4-7 displays the vCenter Advanced Settings screen, which is where storage filters can be enabled. The steps to turn off the filter begin by using the vSphere Web Client navigator and starting at Home and selecting Hosts and Clusters. Then highlight your vCenter Server, click the Manage tab, and click Settings. To disable a filter, first add it by clicking Advanced Settings and then Edit. This opens the Edit Advanced vCenter Server Settings window. At the bottom of the window, in the Key box, type in the storage filter config.vpxd.filter.hostRescanFilter and add the Value false to enable the storage filter, as shown in Figure 4-7.

FIGURE 4-7 Enable a Storage Filter

iSCSI

vSphere provides support for several different methods of booting up using an iSCSI LUN. An independent hardware iSCSI adapter, such as a QLogic iSCSI HBA, first needs to boot from the VMware installation media, which loads an iSCSI HBA configuration menu. The HBA config menu allows you to configure host adapter settings.

In addition to the independent hardware adapter, the ESXi Host can boot from a software adapter or dependent hardware iSCSI adapter. The network adapter must support the iSCSI Boot Firmware Table (iBFT) format to deploy an ESXi Host from an iSCSI SAN. The iBFT allows for communicating parameters about the iSCSI boot device to an operating system.

Configure/Edit Hardware/Dependent Hardware Initiators

A hardware initiator or a dependent initiator is a network adapter that has either firmware or a chip built in to the adapter that speaks iSCSI. The network adapter can handle standard networking as well as the iSCSI offload engine. After you install the hardware initiator, it appears in the list of network adapters. However, you need to associate a VMkernel port with the adapter before you can configure the iSCSI settings.

Enable/Disable Software iSCSI Initiator

A software iSCSI initiator is a standard 1 GB or 10 GB network adapter. It is simply a supported adapter, but nothing on the physical card is designed with SCSI in mind. The network adapter relies on the VMkernel to handle discovery and sends the processing of encapsulation and de-encapsulation of network packets up to the kernel. You need to activate only one software iSCSI adapter on the ESXi Host.

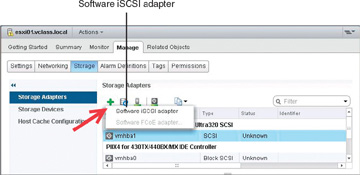

Enable a Software iSCSI Initiator

The steps to enable the software iSCSI initiator begin with browsing to the host using the vSphere Web Client. Click the Manage tab and then click Storage. Click Storage Adapters and then click Add. As you can see in Figure 4-8, select Software iSCSI Adapter and confirm that you want to add the driver to your ESXi Host.

FIGURE 4-8 Enable a Software iSCSI Initiator

Disable a Software iSCSI Initiator

The steps to disable the software iSCSI initiator begin with browsing to the host using the vSphere Web Client. Click the Manage tab and then click Storage. Click Storage Adapters and then highlight the iSCSI software adapter that you want to disable. Underneath Storage Adapters is the Adapter Details section; click Properties and click the Disable button. Disabling the adapter marks it for removal, but the adapter is not removed until the next host reboot. Figure 4-9 shows the iSCSI initiator being disabled. After you reboot the host, the iSCSI adapter no longer appears in the list of storage adapters.

FIGURE 4-9 Disable a Software iSCSI Initiator

Configure/Edit Software iSCSI Initiator Settings

There are several different settings you can make on an iSCSI initiator. The settings can be configured in the Adapter Details section. One option is to enable and disable paths for the iSCSI adapter. Another option when you are editing an iSCSI initiator is to use dynamic or static discovery. With dynamic discovery, the iSCSI initiator sends a Send Targets request to the storage array, which returns a list of available targets to the initiator. The other option is to use static discovery, where you manually add targets. Also, you can set up CHAP authentication, which is discussed in an upcoming section of this chapter.

Determine Use Case for Hardware/Dependent Hardware/Software iSCSI Initiator

A good reason to use a software iSCSI initiator is that you do not need to purchase a specialized network adapter. You only need to buy a standard 1 GB or 10 GB network adapter. Another reason you might use a software initiator is that it is the only initiator that supports bidirectional CHAP.

The benefit of a dependent hardware iSCSI initiator is that part of the processing happens on the network adapter and part on the CPU. The adapter offloads iSCSI processing to the adapter, which speeds up processing of the iSCSI packets. It also reduces CPU overhead because only part of the processing of the iSCSI packets happens on the CPU, and part happens on the network adapter.

You use an independent hardware iSCSI initiator when performance is most important. This adapter handles all its own networking, iSCSI processing, and management of the interface.

Configure iSCSI Port Binding

For most implementations, iSCSI storage is connected to the ESXi Hosts and is used to host virtual machines. The ESXi Host is then considered to be the initiator because it is requesting the storage, and the iSCSI storage device is considered the target because it is delivering the storage. The initiator for the ESXi Host can be software or hardware based. A software iSCSI initiator is included and built in to the VMkernel. When using a software iSCSI initiator, a standard 1 GB or 10 GB network adapter is used for storage transport. You might have multiple network adapters, and iSCSI port binding enables you to specify the network interface that iSCSI can use.

A VMkernel port must be configured on the same network as the storage device to use the software iSCSI initiator. This is because you need to associate a VMkernel port with a specific iSCSI adapter in order to configure iSCSI port binding. The initiator uses the first network adapter port it finds that can see the storage device and use it exclusively for transport. This means that just having multiple adapter ports is not enough to balance storage workloads. However, you can achieve load balancing by configuring multiple VMkernel ports and binding them to multiple adapter ports.

After you load the software iSCSI kernel module, the next step is to bind the iSCSI driver to a port. There is a one-to-one relationship between the VMkernel port and the network adapter. This is a requirement for iSCSI port binding and multipathing. Only one port can be in the active state for a VMkernel adapter. Because only one port can be in the active state, you need to make sure that all other ports are set to Unused.

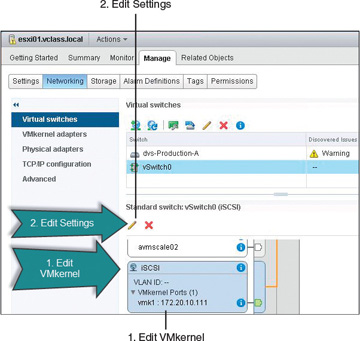

From the Home page, go to Hosts and Clusters. Select the ESXi Host in the inventory, click the Manage tab, and click the Networking tab. Choose the virtual switch that you want to use to bind the VMkernel port to a physical adapter. Next, choose the VMkernel adapter by highlighting the network label as shown in Figure 4-10 (step 1), which turns the VMkernel adapter blue. Then click the Edit Settings icon, shown as step 2 in Figure 4-10, which opens the Edit Settings window.

FIGURE 4-10 Select the VMkernel Port to Bind

The final step is to bind the VMkernel port to a network adapter. This can be accomplished by selecting the override switch failover order option, where one port is active and all of the other ports are set to the Unused state. The Edit Settings for the iSCSI VMkernel adapter then appear. To modify the vmnics, click Teaming and Failover. Select the check box for failover order Override. Then highlight all vmnics except for the one vmnic that will remain active. Move all other vmnics to Unused adapters using the blue down arrow. Then click OK.

Enable/Configure/Disable iSCSI CHAP

There is an optional security feature you can enable for iSCSI, called Challenge Handshake Authentication Protocol (CHAP). CHAP is a method for authenticating the ESXi Host and a storage device using password authentication. The authentication can be either one-way (unidirectional CHAP) or two-way (bidirectional, or mutual, CHAP):

Unidirectional: Also called one-way CHAP. The storage array or target authenticates the ESXi Host or initiator. With unidirectional CHAP, the ESXi Host does not authenticate the storage device. The ESXi Host authenticates by sending the CHAP secret to the storage target.

Bidirectional: Also called mutual CHAP. Authentication is done both ways, and the secret is different in the two directions. vSphere supports this method for software and dependent hardware iSCSI adapters only.

CHAP is a three-way handshake used to authenticate the ESXi Host, and bidirectional CHAP also authenticates the storage array. The CHAP secret is nothing more than a password. For both software iSCSI and dependent hardware iSCSI initiators, ESXi also supports per-target CHAP authentication.

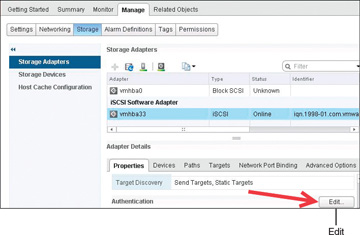

As shown in Figure 4-11, in order to enable and configure iSCSI CHAP on ESXi Host, begin at Home then Hosts and Clusters and select the host on which you want to configure CHAP. Then select Manage, click Storage, and then Storage Adapters. Highlight iSCSI Software Adapter, and in the Adapter Details section, select the Properties tab and click the Edit button for authentication to set up CHAP.

FIGURE 4-11 iSCSI CHAP’s Edit Button

The CHAP authentication method needs to match the storage array’s CHAP implementation and is vendor specific. You need to consult the storage array’s documentation to help determine which CHAP security level is supported. When you set up the CHAP parameters, you need to specify which security level for CHAP should be utilized between the ESXi Host and the storage array. The different CHAP security levels, descriptions, and their corresponding supported adapters are listed in Table 4-3.

Table 4-3 CHAP Security Levels

CHAP Security Level |

Description |

Supported |

None |

CHAP authentication is not used. Select this option to disable authentication if it is currently enabled. |

Software iSCSI Dependent hardware iSCSI Independent hardware iSCSI |

Use Unidirectional CHAP if Required by Target |

Host prefers non-CHAP connection but can use CHAP if required by the target. |

Software iSCSI Dependent hardware iSCSI |

Use Unidirectional CHAP Unless Prohibited by Target |

Host prefers CHAP but can use non-CHAP if the target does not support CHAP. |

Software iSCSI Dependent hardware iSCSI Independent hardware iSCSI |

Use Unidirectional CHAP |

Host requires CHAP authentication. The connection fails if CHAP negotiation fails. |

Software iSCSI Dependent hardware iSCSI Independent hardware iSCSI |

Use Bidirectional CHAP |

Host and target both support bidirectional CHAP. |

Software iSCSI Dependent hardware iSCSI |

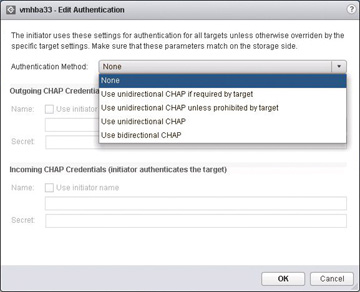

To configure CHAP, after selecting the Properties tab, click the Edit button to open up the Edit Authentication window, as shown in Figure 4-12. This is where you set up CHAP to authenticate. Keep in mind that CHAP does not encrypt anything; the communication between the initiator and target are in the clear. The CHAP name should not exceed 511 alphanumeric characters, and the CHAP secret should not exceed 255 alphanumeric characters. Also note that some hardware-based iSCSI initiators might have lower limits. For example, the QLogic adapter limits the CHAP name to 255 alphanumeric characters and the CHAP secret to 100 alphanumeric characters.

FIGURE 4-12 CHAP Setup Options

Determine Use Cases for Fibre Channel Zoning

One goal in storage is to make sure the host communicates with the correct storage and the storage communicates with the correct host. This can be challenging because many devices and nodes can be attached to a SAN. FC and FCoE switches use zoning and LUN masking to isolate communication between ESXi Hosts and storage devices. Zoning blocks communication between targets and initiators to isolate traffic to scan only devices that should be seeing each other. There are two kinds of zoning: hard zoning, which uses the physical switch port IDs, and soft zoning, which uses the WWPNs of the initiators and targets. Hardware and software zoning both attempt to reduce the number of targets and LUNs presented to the host. The best practice with LUN masking is to configure it on the storage array. The storage administrator configures which hosts have access to a LUN using masking. As a VMware best practice, LUN masking should be done on the ESXi Host using CLI but not in the vSphere client.

Compare and Contrast Array and Virtual Disk Thin Provisioning

Thin provisioning involves presenting more storage space to the hosts connecting to the storage system than is actually available on the storage system. For example, say that a storage system has usable capacity of 500 GB. The storage administrator then presents two hosts, each with a LUN of 300 GB. The mapping of these two LUNs means the storage array is presenting more storage to both hosts than is physically available on the storage array. When a LUN is created, the storage array does not dedicate specific blocks out of the volume for the LUN at the time of provisioning; rather, blocks are allocated when the data is actually written to the LUN. In this way, it is possible to provision more storage space, as seen from the connected ESXi Hosts, than actually physically exists in the storage array.

Array Thin Provisioning

ESXi supports thin-provisioned LUNs. Array thin provisioning simply means that the thin provisioning is done on the storage array, which reports the LUN’s logical size instead of the real physical capacity of the LUN. When a LUN is thin provisioned, the storage array does not assign specific blocks for the LUN; instead, it waits until blocks are going to be written to zero out the data blocks and then performs the write. The storage array vendor uses its own file system and bookkeeping for the LUN, thus filling the LUN capacity on demand and saving storage space. By promising more storage space than the LUN actually has, you can overallocate storage. One built-in advantage that many storage array vendors have is the ability to grow the volume automatically when it is running out of space. Many storage vendors have automatic processes to grow the volume or delete certain file system constructs such as array-based snapshots.

Virtual Disk Thin Provisioning

When a thin virtual disk is created, it does not preallocate capacity, and it does not zero out the data block on the VMFS file system and the backend storage. Instead, the virtual disk consumes storage space only when data is required due to write to a disk. Initially, the VMDK’s actual space usage starts out small and grows as more writes to disk occur. However, the guest OS sees the full allocated disk size at all times. The VMDK hides from the guest OS the fact that it is has not actually claimed all of the data blocks. The virtual machine’s disk consumes only the amount of space that is needed for the current files in the file system. When writes occur and more space is needed, the VMkernel grows storage for VMFS 1 MB at a time. So as writes are committed and the 1 MB data block fills up, another 1 MB is allocated to the virtual disk. If the file system is NFS, which is by default thin provisioned, it grows 4 KB at a time, which is the size of its data block. Since the VMFS datastore is shared, SCSI locking needs to be performed by VMware vStorage API for Array Integration (VAAI). The metadata operation of SCSI locking is accomplished by VAAI.

To put array-based and virtual disk thin provisioning into perspective, the new data block or blocks need to be zeroed out when you are working with a virtual thin disk and new writes occur. However, before this operation can occur, the thin disk might have to obtain additional capacity from the datastore. Therefore, the storage array’s math depends on how much space it has available compared to the ESXi Host. Thus, the thin provisioning is working at different levels.

Determine Use Case for and Configure Array Thin Provisioning

The ESXi Host can use storage arrays that present thin-provisioned storage. When a LUN is thin provisioned, it reports the logical size of the LUN and not its actual physical size. The storage array can promise more storage than its physical capacity. For example, an array may report that the LUN has 3 TB of space, when it really physically has 2 TB of disk space. The VMFS datastore is going to believe it has 3 TB of disk space that it can use. Thin provisioning is useful in virtual desktop environments where the system disk is shared among a number of users. You can save money by not having to reserve storage for each desktop’s system disk. On the server side, thin provisioning can also be useful when you want to save money. However, be careful to monitor growth because running out of space with certain types of applications can be hazardous to your employment.

Summary

You have now read the chapter covering exam topics on storage. You should use information in the following sections to complete your preparation for Objective 3.1.