1.2 Threats

We can consider potential harm to assets in two ways: First, we can look at what bad things can happen to assets, and second, we can look at who or what can cause or allow those bad things to happen. These two perspectives enable us to determine how to protect assets.

Think for a moment about what makes your computer valuable to you. First, you expect the “personal” aspect of a personal computer to stay personal, meaning you want it to protect your confidentiality. For example, you want your email messages to be communicated only between you and your listed recipients; you don’t want them broadcast to other people. And when you write an essay, you expect that no one can copy it without your permission. Second, you rely heavily on your computer’s integrity. When you write a paper and save it, you trust that the paper will reload exactly as you saved it. Similarly, you expect that the photo a friend passes you on a flash drive will appear the same when you load it into your computer as when you saw it on your friend’s device. Finally, you expect it to be available as a tool whenever you want to use it, whether to send and receive email, search the web, write papers, or perform many other tasks.

These three aspects—confidentiality, integrity, and availability—make your computer valuable to you. But viewed from another perspective, they are three possible ways to make it less valuable, that is, to cause you harm. If someone steals your computer, scrambles data on your disk, or looks at your private data files, the value of your computer has been diminished or your computer use has been harmed. These characteristics are both basic security properties and the objects of security threats.

Taken together, the properties are called the C-I-A triad or the security triad. We can define these three properties formally as follows:

confidentiality: the ability of a system to ensure that an asset is viewed only by authorized parties

integrity: the ability of a system to ensure that an asset is modified only by authorized parties

availability: the ability of a system to ensure that an asset can be used by any authorized parties

These three properties, hallmarks of solid security, appear in the literature as early as James Anderson’s essay on computer security [AND73] and reappear frequently in more recent computer security papers and discussions. Key groups concerned with security—such as the International Standards Organization (ISO) and the U.S. Department of Defense—also add properties that they consider desirable. The ISO [ISO89], an independent nongovernmental body composed of standards organizations from 167 nations, adds two properties, particularly important in communication networks:

authentication: the ability of a system to confirm the identity of a sender

nonrepudiation or accountability: the ability of a system to confirm that a sender cannot convincingly deny having sent something

The U.S. Department of Defense [DOD85] adds auditability: the ability of a system to trace all actions related to a given asset.

The C-I-A triad forms a foundation for thinking about security. Authenticity and nonrepudiation extend security notions to network communications, and auditability is important in establishing individual accountability for computer activity. In this book we generally use the C-I-A triad as our security taxonomy so that we can frame threats, vulnerabilities, and controls in terms of the C-I-A properties affected. We highlight one of the other properties when it is relevant to a particular threat we are describing. For now, we focus on just the three elements of the triad.

C-I-A triad: confidentiality, integrity, availability

What can happen to harm any of these three properties? Suppose a thief steals your computer. All three properties are harmed. For instance, you no longer have access, so you have lost availability. If the thief looks at the pictures or documents you have stored, your confidentiality is compromised. And if the thief changes the content of your music files but then gives them back with your computer, the integrity of your data has been harmed. You can envision many scenarios based around these three properties.

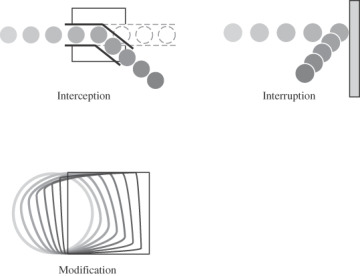

The C-I-A triad can also be viewed from a different perspective: the nature of the harm caused to assets. Harm can also be characterized by three acts: interception, interruption, and modification. These three acts are depicted in Figure 1-5. From this point of view, confidentiality can suffer if someone intercepts data, integrity can fail if someone or something modifies data or even fabricates false data, and availability can be lost if someone or something interrupts a flow of data or access to a computer. Thinking of these kinds of acts can help you determine what threats might exist against the computers you are trying to protect.

FIGURE 1.5 Acts to Cause Security Harm

To analyze harm, we next refine the C-I-A triad, looking more closely at each of its elements.

Confidentiality

Some things obviously need confidentiality protection. For example, students’ grades, financial transactions, medical records, and tax returns are sensitive. A proud student may run out of a classroom shouting “I got an A!” but the student should be the one to choose whether to reveal that grade to others. Other things, such as diplomatic and military secrets, companies’ marketing and product development plans, and educators’ tests, also must be carefully controlled.

Sometimes, however, it is not so obvious that something is sensitive. For example, a military food order may seem like innocuous information, but a sudden increase in the order at a particular location could be a sign of incipient engagement in conflict at that site. Purchases of clothing, hourly changes in location, and access to books are not things you would ordinarily consider confidential, but they can reveal something related that someone wants kept confidential.

The definition of confidentiality is straightforward: Only authorized people or systems can access protected data. However, as we see in later chapters, ensuring confidentiality can be difficult. To see why, consider what happens when you visit the home page of WebsiteX.com. A large amount of data is associated with your visit. You may have started by using a browser to search for “WebsiteX” or “WebsiteX.com.” Or you may have been reading an email or text message with advertising for WebsiteX, so you clicked on the embedded link. Or you may have reached the site from WebsiteX’s app. Once at WebsiteX.com, you read some of the home page’s contents; the time you spend on that page, called the dwell time, is captured by the site’s owner and perhaps the internet service provider (ISP). Of interest too is where you head from the home page: to links within the website, to links to other websites that are embedded in WebsiteX.com (such as payment pages for your credit card provider), or even to unrelated pages when you tire of what WebsiteX has to offer. Each of these actions generates data that are of interest to many parties: your ISP, WebsiteX, search engine companies, advertisers, and more. And the data items, especially when viewed in concert with other data collected at other sites, reveals information about you: what interests you, how much money you spend, and what kind of advertising works best to convince you to buy something. The data may also reveal information about your health (when you search for information about a drug or illness), your network of friends, your movements (as when you buy a rail or airline ticket), or your job.

Thus, each action you take can generate data collected by many parties. Confidentiality addresses much more than determining which people or systems are authorized to access the current system and how the authorization occurs. It also addresses protecting access to all the associated data items. In our example, WebsiteX may be gathering information with or without your knowledge or approval. But can WebsiteX disclose data to other parties? And who or what is responsible when confidentiality is breached by the other parties?

Despite these complications, confidentiality is the security property we understand best because its meaning is narrower than that of the other two. We also understand confidentiality well because we can relate computing examples to those of preserving confidentiality in the real world: for example, keeping employment records or a new invention’s design confidential.

Here are some properties that could mean a failure of data confidentiality:

An unauthorized person accesses a data item.

An unauthorized process or program accesses a data item.

A person authorized to access certain data accesses other data not authorized (which is a specialized version of “an unauthorized person accesses a data item”).

An unauthorized person accesses an approximate data value (for example, not knowing someone’s exact salary but knowing that the salary falls in a particular range or exceeds a particular amount).

An unauthorized person learns the existence of a piece of data (for example, knowing that a company is developing a certain new product or that talks are underway about the merger of two companies).

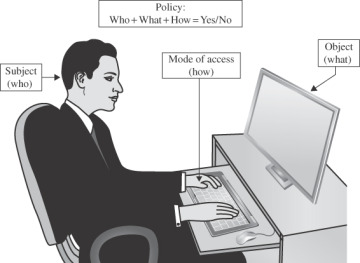

Notice the general pattern of these statements: A person, process, or program is (or is not) authorized to access a data item in a particular way. We call the person, process, or program a subject, the data item an object, the kind of access (such as read, write, or execute) an access mode, and the authorization a policy, as shown in Figure 1-6. These four terms reappear throughout this book because they are fundamental aspects of computer security.

FIGURE 1.6 Access Control

One word that captures most aspects of confidentiality is view, although you should not take that term literally. A failure of confidentiality does not necessarily mean that someone sees an object; in fact, it is virtually impossible to look at bits in any meaningful way (although you may look at their representation as characters or pictures). The word “view” does connote another aspect of confidentiality in computer security, through the association with viewing a movie or a painting in a museum: look but do not touch. In computer security, confidentiality usually means obtaining but not modifying. Modification is the subject of integrity, which we consider next.

Integrity

Examples of integrity failures are easy to find. A number of years ago, a malicious macro in a Word document inserted the word “not” after some random instances of the word “is”; you can imagine the havoc that ensued. Because the document remained syntactically correct, readers did not immediately detect the change. In another case, a model of Intel’s Pentium computer chip produced an incorrect result in certain circumstances of floating-point arithmetic. Although the circumstances of failure were exceedingly rare, Intel decided to manufacture and replace the chips. This kind of error occurs frequently in many aspects of our lives. For instance, many of us receive mail that is misaddressed because someone typed something wrong when transcribing from a written list. A worse situation occurs when that inaccuracy is propagated to other mailing lists such that we can never seem to find and correct the root of the problem. Other times we notice that a spreadsheet seems to be wrong, only to find that someone typed “123” (with a space before the number) in a cell, changing it from a numeric value to text, so the spreadsheet program misused that cell in computation. The error can occur in a process too: an incorrect formula, a message directed to the wrong recipient, or a circular reference in a program or spreadsheet. These cases show some of the breadth of examples of integrity failures.

Integrity is harder to pin down than confidentiality. As Stephen Welke and Terry Mayfield [WEL90, MAY91, NCS91a] point out, integrity means different things in different contexts. When we survey the way some people use the term, we find several different meanings. For example, if we say we have preserved the integrity of an item, we may mean that the item is

precisely defined

accurate

unmodified

modified only in acceptable ways

modified only by authorized people

modified only by authorized processes

consistent

internally consistent

meaningful and usable

Integrity can also mean two or more of these properties. Welke and Mayfield discuss integrity by recognizing three particular aspects of it: authorized actions, separation and protection of resources, and detection and correction of errors. Integrity can be enforced in much the same way as can confidentiality: by rigorous control of who or what can access which resources in what ways.

Availability

A computer user’s worst nightmare: You turn on the switch and the computer does nothing. Your data and programs are presumably still there, but you cannot get to them. Fortunately, few of us experience that failure. Many of us do experience overload, however: access gets slower and slower; the computer responds but not in a way we consider normal or acceptable. Each of these instances illustrates a degradation of availability.

Availability applies both to data and services (that is, to information and to information processing), and, like confidentiality, it is similarly complex. Different people may expect availability to mean different things. For example, an object or service is thought to be available if the following are true:

It is present in a usable form.

It has enough capacity to meet the service’s needs.

It is making clear progress, and, if in wait mode, it has a bounded waiting time.

The service is completed in an acceptable period of time.

We can construct an overall description of availability by combining these goals. Following are some criteria to define availability.

There is a timely response to our request.

Resources are allocated fairly so that some requesters are not favored over others.

Concurrency is controlled; that is, simultaneous access, deadlock management, and exclusive access are supported as required.

The service or system involved follows a philosophy of fault tolerance, whereby hardware or software faults lead to graceful cessation of service or to work-arounds rather than to crashes and abrupt loss of information. (Cessation does mean end; whether it is graceful or not, ultimately the system is unavailable. However, with fair warning of the system’s stopping, the user may be able to move to another system and continue work.)

The service or system can be used easily and in the way it was intended to be used. (This description is an aspect of usability. An unusable system may also cause an availability failure.)

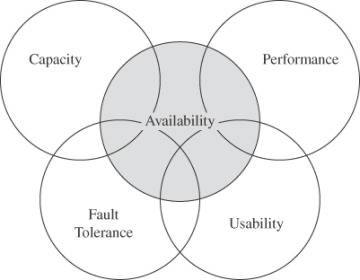

As you can see, expectations of availability are far-reaching. Figure 1-7 depicts some of the properties with which availability overlaps.

FIGURE 1.7 Availability and Related Aspects

So far, we have described a system’s availability. But the notion of availability applies to an individual data item too. A person or system can do three basic things with a data item: view it, modify it, or use it. Thus, viewing (confidentiality), modifying (integrity), and using (availability) are the basic modes of access that computer security seeks to preserve.

Computer security seeks to prevent unauthorized viewing (confidentiality) or modification (integrity) of data while preserving access (availability).

For a given system, we ensure availability by designing one or more policies to guide the way access is permitted to people, programs, and processes. These policies are often based on a key model of computer security known as access control: To implement a policy, the computer security programs control all accesses by all subjects to all protected objects in all modes of access. A small, centralized control of access is fundamental to preserving confidentiality and integrity, but it is not clear that a single access control point can enforce availability. Indeed, experts on dependability note that single points of control can become single points of failure, making it easy for an attacker to destroy availability by disabling the single control point. Much of computer security’s past success has focused on confidentiality and integrity; there are models of confidentiality and integrity, for example, see David Bell and Leonard La Padula [BEL73, BEL76] for confidentiality and Kenneth Biba [BIB77] for integrity. Designing effective availability policies is one of security’s great challenges.

We have just described the C-I-A triad and the three fundamental security properties it represents. Our description of these properties was in the context of those things that need protection. To motivate your understanding of these concepts, we offered some examples of harm and threats to cause harm. Our next step is to think about the nature of threats themselves.

Types of Threats

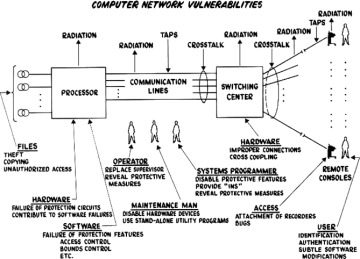

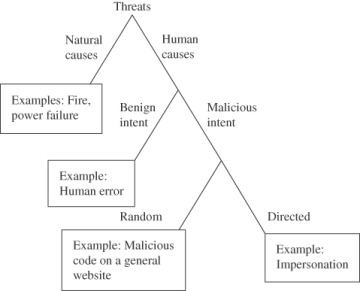

In Figure 1-8, taken from Willis Ware’s report [WAR70], we illustrate some types of harm. Ware’s discussion is still instructive today, even though it was written when computers were so big, so expensive, and so difficult to operate that only large organizations like universities, major corporations, or government departments would have them. Ware was concerned primarily with the protection of classified data, that is, with preserving confidentiality. In the figure, he depicts threats from humans such as programmers and maintenance staff gaining access to data, as well as from radiation by which data can escape as signals. From the figure you can see some of the many kinds of threats to a computer system.

FIGURE 1.8 Computer [Network] Vulnerabilities (from [WAR70])

One way to analyze harm is to consider its cause or source. We call a potential cause of harm a threat. Harm can be caused by humans, of course, either through malicious intent or by accident. But harm can also be caused by nonhuman threats such as natural disasters like fires or floods; loss of electrical power; failure of a component such as a communications cable, processor chip, or disk drive; or attack by a wild animal.

Threats are caused both by human and other sources.

In this book, we focus primarily on human threats. Nonmalicious kinds of harm include someone’s accidentally spilling a soft drink on a laptop, unintentionally deleting text, inadvertently sending an email message to the wrong person, carelessly typing 12 instead of 21 when entering a phone number, or clicking [yes] instead of [no] to overwrite a file. These inadvertent, human errors happen to most people; we just hope that the seriousness of the resulting harm is not too great, or that if it is, the mistake will not be repeated.

Threats can be malicious or not.

Most planned computer security activity relates to potential and actual malicious, human-caused harm: If a malicious person wants to cause harm, we often use the term “attack” for the resulting computer security event. Malicious attacks can be either random or directed. In a random attack, the attacker wants to harm any computer or user; such an attack is analogous to accosting the next pedestrian who walks down the street. Similarly, a random attack might involve malicious code posted on a website that could be visited by anybody.

In a directed attack, the attacker intends to harm specific computers, perhaps at a particular organization (think of attacks against a political group) or belonging to a specific individual (think of trying to drain a specific person’s bank account, for example, by impersonation). Another class of directed attack is against a particular product, such as any computer running a particular browser, perhaps to damage the reputation of the browser’s developer. The range of possible directed attacks is practically unlimited. Different kinds of threats are shown in Figure 1-9.

Threats can be targeted or random.

Although the distinctions shown in Figure 1-9 seem clear-cut, sometimes the nature of an attack is not obvious until the attack is well underway or has even ended. A normal hardware failure can seem like a directed, malicious attack to deny access, and hackers often try to conceal their activity by making system behaviors look like actions of authorized users. As computer security experts, we need to anticipate what bad things might happen and act to prevent them, instead of waiting for the attack to happen or debating whether the attack is intentional or accidental.

FIGURE 1.9 Kinds of Threats

Neither this book nor any checklist or method can show you all the harms that can affect computer assets. There are too many ways to interfere with your use of these assets and too many paths to enable the interference. Two retrospective lists of known vulnerabilities are of interest, however. The Common Vulnerabilities and Exposures (CVE) list (see cve.org) is a dictionary of publicly known security vulnerabilities and exposures. The distinct identifiers provide a common language for describing vulnerabilities, enabling data exchange between security products and their users. By classifying vulnerabilities in the same way, researchers can tally the number and types of vulnerabilities identified across systems and provide baseline measurements for evaluating coverage of security tools and services. Similarly, to measure the extent of harm, the Common Vulnerability Scoring System (CVSS) (see nvd.nist.gov/vuln-metrics/cvss) provides a standard measurement system that allows accurate and consistent scoring of vulnerability impact.

Cyberthreats

It is time to introduce the widely used term cyber. So far, we have discussed threats and vulnerabilities to computer systems and failings of computer security. Computer security often refers to individual computing devices: a laptop, the computer that maintains a bank’s accounts, or an onboard computer that controls a spacecraft. However, these devices seldom stand alone; they are usually connected to networks of other computers, networked devices (such as thermostats or doorbells with cameras), and the internet. Enlarging the scope from one device to many devices, users, and connections leads to the word “cyber.”

A cyberthreat is thus a threat not just against a single computer but against many computers that belong to a network. Cyberspace is the online world of computers, especially the internet. And a cybercrime is an illegal attack against computers connected to or reached from their network, as well as their users, data, services, and infrastructure. In this book, we examine security as it applies to individual computing devices and single users; but we also consider the broader collection of devices in networks with other users and devices, that is, cybersecurity.

For a parallel situation, consider the phrase “organized crime.” Certainly, organized crime groups commit crimes—extortion, theft, fraud, assault, and murder, among others. But an individual thief operates on a different scale from a gang of thieves who perpetrates many coordinated thefts. Police use many techniques for investigating individual thefts when they also look at organized crime, but the police consider the organization and scale of coordinated attacks too.

The distinction between computer and cyber is fine, and few people will criticize you if you refer to computer security instead of cybersecurity. The difference is especially tricky because to secure cyberspace we need to secure individual computers, networks, and their users, as well as be aware of how geopolitical issues shape computer security threats and vulnerabilities. But because you will encounter both terms—”computer security” and “cybersecurity”—we want you to recognize the distinctions people mean when they use one or the other.

Our next topic involves threats that are certainly broader than against one or a few computers.

Advanced Persistent Threats

Security experts are becoming increasingly concerned about a particular class of threats called advanced persistent threats. A lone attacker might create a random attack that snares a few, or a few million, individuals, but the resulting impact is limited to what that single attacker can organize and manage. (We do not minimize the harm one person can cause.) Such attackers tend to be opportunistic, picking unlucky victims’ pockets and then moving on to other activities. A collection of attackers—think, for example, of the cyber equivalent of a street gang or an organized crime squad—might work together to purloin credit card numbers or similar financial assets to fund other illegal activity. Such activity can be skillfully planned and coordinated.

Advanced persistent threat attacks come from organized, well-financed, patient assailants. Sometimes affiliated with governments or quasi-governmental groups, these attackers engage in long-term campaigns. They carefully select their targets, crafting attacks that appeal to specifically those targets. For example, a set of email messages called spear phishing (described in Chapter 4, “The Internet—User Side”) is intended to seduce recipients to take a specific action, like revealing financial information or clicking on a link to a site that then downloads malicious code. Typically the attacks are silent, avoiding any obvious impact that would alert a victim and thereby allowing the attacker to continue exploiting the victim’s data or access rights over a long time.

The motive of such attacks is sometimes unclear. One popular objective is economic espionage: stealing information to gain economic advantage. For instance, a series of attacks, apparently organized and supported by the Chinese government, occurred between 2010 and 2019 to obtain product designs from aerospace companies in the United States. Evidence suggested that the stub of the attack code was loaded into victim machines long in advance of the attack; then, the attackers installed the more complex code and extracted the desired data. The U.S. Justice Department indicted four Chinese hackers for these attacks [VIJ19]. Reports indicate that engineering secrets stolen by Chinese actors helped China develop its flagship C919 twinjet airliner.

In the summer of 2014, a series of attacks against J.P. Morgan Chase bank and up to a dozen similar financial institutions allowed the assailants access to 76 million names, phone numbers, and email addresses. The attackers are alleged to have been working together since 2007. The United States indicted two Israelis, one Russian, and one U.S. citizen in conjunction with the attacks [ZET15]. The indictments allege the attackers operated a stock price manipulation scheme as well as numerous illegal online gambling sites and a cryptocurrency exchange. The four accepted plea agreements requiring prison sentences and the forfeiting of as much as US$74 million.

These two attack sketches should tell you that cyberattacks are an international phenomenon. Attackers can launch strikes from a distant country. They can often disguise the origin to make it difficult to tell immediately where the attack is coming from, much less who is causing it. Stealth is also a characteristic of many attacks so the victim may not readily perceive an attack is imminent or even underway.

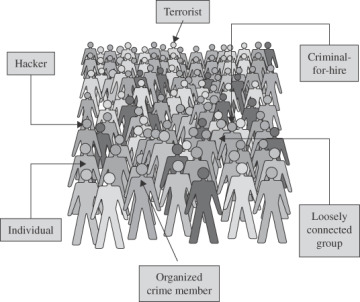

To help you imagine the full landscape of possible attacks, you may find it useful to consider the kinds of people who attack computer systems. Although potentially anyone is an attacker, certain classes of people stand out because of their backgrounds or objectives. Thus, in the following sections, we look at profiles of some classes of attackers.

Types of Attackers

As we have seen, attackers’ motivations range from taking advantage of a chance opportunity to targeting a specific person, government, or system. Putting aside attacks from natural and benign causes or accidents, we can explore who the attackers are and what motivates them.

Most studies of attackers focus on computer criminals, that is, people who have actually been convicted of crimes, primarily because that group is easy to identify and study. The ones who got away or who carried off an attack without being detected may have characteristics different from those of criminals who have been caught. Worse, by studying only the criminals we have caught, we may not learn how to catch attackers who know how to abuse the system without being apprehended.

What does a cyber criminal look like? In cowboy, fairy tale, and gangster television shows and films, villains often wear shabby clothes, look mean and sinister, and live in gangs somewhere out of town. By contrast, the “good guys” dress well, stand proud and tall, are known and respected by everyone in town, and strike fear in the hearts of most criminals. It certainly would be convenient if we could identify cyber criminals as easily as the villains in these dramas.

To be sure, some computer criminals are mean and sinister types. But many more wear business suits, have university degrees, and appear to be pillars of their communities. Some are high school or university students. Others are middle-aged business executives. Some are overtly hostile, have mental health issues, or are extremely committed to a cause, and they attack computers as a symbol of something larger that outrages them. Other criminals are ordinary people tempted by personal profit, revenge, challenge, advancement, or job security—like perpetrators of any crime, using a computer or not.

Researchers have tried to discover psychological traits that distinguish attackers from law-abiding citizens. These studies are far from conclusive, however, and the traits they identify may show correlation but not necessarily causality. To appreciate this point, suppose a study found that a disproportionate number of people convicted of computer crime were left-handed. Would that result imply that all left-handed people are computer criminals or that only left-handed people are? Certainly not. No single profile captures the characteristics of a “typical” computer attacker, and the characteristics of some notorious attackers also match many people who are not attackers. As shown in Figure 1-10, attackers look just like anybody in a crowd.

FIGURE 1.10 Attackers

No one pattern matches all attackers.

Individuals

Originally, computer attackers were individuals, acting with motives of fun, challenge, or revenge. Early attackers acted alone. Two of the most well known among them are Robert Morris, Jr., the Cornell University graduate student who brought down the internet in 1988 [SPA89], and Kevin Mitnick, the man who broke into and stole data from dozens of computers, including the San Diego Supercomputer Center [MAR95]. In Sidebar 1-1, we describe an aspect of Mitnick’s psychology that may relate to his hacking activity.

Worldwide Groups

More recent attacks have involved groups of people. An attack against the government of Estonia (described in more detail in Chapter 13, “Emerging Topics”) is believed to have been an uncoordinated outburst from a loose federation of attackers from around the world. Kevin Poulsen [POU05] quotes Tim Rosenberg, a research professor at George Washington University, warning of “multinational groups of hackers backed by organized crime” and showing the sophistication of prohibition-era mobsters. Poulsen also reports that Christopher Painter, deputy director of the U.S. Department of Justice’s computer crime section from 2001 to 2008, argued that cyber criminals and serious fraud artists are increasingly working in concert or are one and the same. According to Painter, loosely connected groups of criminals all over the world work together to break into systems and steal and sell information, such as credit card numbers.

To fight coordinated crime, law enforcement agencies throughout the world are joining forces. For example, the attorneys general of five nations known as “the quintet”—the United States, the United Kingdom, Canada, Australia, and New Zealand—meet regularly to present a united front in fighting cybercrime. The United States has also established a transnational and high-tech crime global law enforcement network, with special attention paid to cybercrime. Personnel from the U.S. Department of Justice are stationed in São Paulo, Bucharest, The Hague, Zagreb, Hong Kong, Kuala Lumpur, and Bangkok to build relationships with their counterparts in other countries and work collaboratively on cybercrime, cyber-enabled crime (such as online fraud), and intellectual property crime. In 2021, after four years of negotiations, the Council of Europe amended its 2001 treaty to facilitate cooperation among European nations in fighting cybercrime.

Whereas early motives for computer attackers were personal, such as prestige or accomplishment (they could brag about getting around a system’s security), recent attacks have been heavily influenced by financial gain. Security firm McAfee reports, “Criminals have realized the huge financial gains to be made from the internet with little risk. They bring the skills, knowledge, and connections needed for large scale, high-value criminal enterprise that, when combined with computer skills, expand the scope and risk of cybercrime” [MCA05].

Organized Crime

Emerging cybercrimes include fraud, extortion, money laundering, and drug trafficking, areas in which organized crime has a well-established presence. In fact, traditional criminals are recruiting hackers to join the lucrative world of cybercrime. For example, Albert Gonzales was sentenced in March 2010 to 20 years in prison for working with a crime ring to steal 40 million credit card numbers from retailer TJMaxx and others, costing over $200 million (Reuters, 26 March 2010).

Organized crime may use computer crime (such as stealing credit card numbers or bank account details) to finance other aspects of crime. Recent attacks suggest that professional criminals have discovered just how lucrative computer crime can be. Mike Danseglio, a security project manager with Microsoft, said, “In 2006, the attackers want to pay the rent. They don’t want to write a worm that destroys your hardware. They want to assimilate your computers and use them to make money” [NAR06a]. Mikko Hyppönen, chief research officer with Finnish security company f-Secure, observes that with today’s attacks often coming from Russia, Asia, and Brazil, the motive is now profit, not fame [BRA06]. Ken Dunham, director of the Rapid Response Team for Verisign, says he is “convinced that groups of well-organized mobsters have taken control of a global billion-dollar crime network powered by skillful hackers” [NAR06b].

Organized crime groups are discovering that computer crime can be lucrative.

Brian Snow [SNO05] observes that individual hackers sometimes want a score or some kind of evidence to give them bragging rights. On the other hand, organized crime seeks a resource, such as profit or access; such criminals want to stay under the radar to be able to extract profit or information from the system over time. These different objectives lead to different approaches to crime: The novice hacker often uses a crude attack, whereas the professional attacker wants a neat, robust, and undetectable method that can deliver rewards for a long time. For more detail on the organization of organized crime, see Sidebar 1-2.

Terrorists

The link between computer security and terrorism is quite evident. We see terrorists using computers in four ways:

Computer as target of attack: Denial-of-service attacks and website defacements are popular activities for any political organization because they attract attention to the cause and bring undesired negative attention to the object of the attack. An example is an attack in 2020 from several Chinese ISPs designed to block traffic to a range of Google addresses. (We explore denial-of-service attacks in Chapter 13.)

Computer as method of attack: Launching offensive attacks can require the use of computers. Stuxnet, an example of malicious computer code called a worm, was known to attack automated control systems, specifically a model of control system manufactured by Siemens. Experts say the code is designed to disable machinery used in the control of nuclear reactors in Iran [MAR10]. The infection is believed to have spread through USB flash drives brought in by engineers maintaining the computer controllers. (We examine the Stuxnet worm in more detail in Chapter 6.)

Computer as enabler of attack: Websites, weblogs, and email lists are effective, fast, and inexpensive ways to allow many people to coordinate. According to the Council on Foreign Relations, the terrorists responsible for the November 2008 attack that killed over 200 people in Mumbai used GPS systems to guide their boats, Blackberry smartphones for their communication, and Google Earth to plot their routes.

Computer as enhancer of attack: The internet has proved to be an invaluable means for terrorists to spread propaganda and recruit agents. In October 2009, the FBI arrested Colleen LaRose, also known as JihadJane, after she had spent months using email, YouTube, MySpace, and electronic message boards to recruit radicals in Europe and South Asia to “wage violent jihad,” according to a federal indictment. LaRose pled guilty to all charges and in 2014 was convicted and sentenced to ten years in prison.

We cannot accurately measure the degree to which terrorists use computers. Not only do terrorists keep secret the nature of their activities, but our definitions and measurement tools are rather weak. Still, incidents like the one described in Sidebar 1-3 provide evidence that all four of these activities are increasing.

In this section, we point out several ways that outsiders can gain access to or affect the workings of your computer system from afar. In the next section, we examine the harm that can come from the presence of a computer security threat on your own computer systems.