- Professionalism

- Reasonable Expectations

- The Bill of Rights

- Conclusion

Reasonable Expectations

What follows is a list of perfectly reasonable expectations that managers, users, and customers have of us. Notice as you read through this list that one side of your brain agrees that each item is perfectly reasonable. Notice that the other side of your brain, the programmer side, reacts in horror. The programmer side of your brain may not be able to imagine how to meet these expectations.

Meeting these expectations is one of the primary goals of Agile development. The principles and practices of Agile address most of the expectations on this list quite directly. The behaviors below are what any good chief technology officer (CTO) should expect from their staff. Indeed, to drive this point home, I want you to think of me as your CTO. Here is what I expect.

We Will Not Ship Shyt!

It is an unfortunate aspect of our industry that this expectation even has to be mentioned. But it does. I’m sure, dear readers, that many of you have fallen afoul of this expectation on one or more occasions. I certainly have.

To understand just how severe this problem is, consider the shutdown of the Air Traffic Control network over Los Angeles due to the rollover of a 32-bit clock. Or the shutdown of all the power generators on board the Boeing 787 for the same reason. Or the hundreds of people killed by the 737 Max MCAS software.

Or how about my own experience with the early days of healthcare.gov? After initial login, like so many systems nowadays, it asked for a set of security questions. One of those was “A memorable date.” I entered 7/21/73, my wedding anniversary. The system responded with Invalid Entry.

I’m a programmer. I know how programmers think. So I tried many different date formats: 07/21/1973, 07-21-1973, 21 July, 1973, 07211973, etc. All gave me the same result. Invalid Entry. This was frustrating. What date format did the blasted thing want?

Then it occurred to me. The programmer who wrote this didn’t know what questions would be asked. He or she was just pulling the questions from a database and storing the answers. That programmer was probably also disallowing special characters and numbers in those answers. So I typed: Wedding Anniversary. This was accepted.

I think it’s fair to say that any system that requires its users to think like programmers in order to enter data in the expected format is crap.

I could fill this section with anecdotes about crappy software like this. But others have done this far better than I could. If you want to get a much better idea of the scope of this issue, read Gojko Adzic’s book Humans vs. Computers3 and Matt Parker’s Humble Pi.4

It is perfectly reasonable for our managers, customers, and users to expect that we will provide systems for them that are high in quality and low in defect. Nobody expects to be handed crap—especially when they pay good money for it.

Note that Agile’s emphasis on Testing, Refactoring, Simple Design, and customer feedback is the obvious remedy for shipping bad code.

Continuous Technical Readiness

The last thing that customers and managers expect is that we, programmers, will create artificial delays to shipping the system. But such artificial delays are common in software teams. The cause of such delays is often the attempt to build all features simultaneously instead of the most important features first. So long as there are features that are half done, or half tested, or half documented, the system cannot be deployed.

Another source of artificial delays is the notion of stabilization. Teams will frequently set aside a period of continuous testing during which they watch the system to see if it fails. If no failures are detected after X days, the developers feel safe to recommend the system for deployment.

Agile resolves these issues with the simple rule that the system should be technically deployable at the end of every iteration. Technically deployable means that from the developers’ points of view the system is technically solid enough to be deployed. The code is clean and the tests all pass.

This means that the work completed in the iteration includes all the coding, all the testing, all the documentation, and all the stabilization for the stories implemented in that iteration.

If the system is technically ready to deploy at the end of every iteration, then deployment is a business decision, not a technical decision. The business may decide there aren’t enough features to deploy, or they may decide to delay deployment for market reasons or training reasons. In any case, the system quality meets the technical bar for deployability.

Is it possible for the system to be technically deployable every week or two? Of course it is. The team simply has to pick a batch of stories that is small enough to allow them to complete all the deployment readiness tasks before the end of the iteration. They’d better be automating the vast majority of their testing, too.

From the point of view of the business and the customers, continuous technical readiness is simply expected. When the business sees a feature work, they expect that feature is done. They don’t expect to be told that they have to wait a month for QA stabilization. They don’t expect that the feature only worked because the programmers driving the demo bypassed all the parts that don’t work.

Stable Productivity

You may have noticed that programming teams can often go very fast in the first few months of a greenfield project. When there’s no existing code base to slow you down, you can get a lot of code working in a short time.

Unfortunately, as time passes, the messes in the code can accumulate. If that code is not kept clean and orderly, it will put a back pressure on the team that slows progress. The bigger the mess, the higher the back pressure, and the slower the team. The slower the team, the greater the schedule pressure, and the greater the incentive to make an even bigger mess. That positive-feedback loop can drive a team to near immobility.

Managers, puzzled by this slowdown, may finally decide to add human resources to the team in order to increase productivity. But as we saw in the previous chapter, adding personnel actually slows down the team for a few weeks.

The hope is that after those weeks the new people will come up to speed and help to increase the velocity. But who is training the new people? The people who made the mess in the first place. The new people will certainly emulate that established behavior.

Worse, the existing code is an even more powerful instructor. The new people will look at the old code and surmise how things are done in this team, and they will continue the practice of making messes. So the productivity continues to plummet despite the addition of the new folks.

Management might try this a few more times because repeating the same thing and expecting different results is the definition of management sanity in some organizations. In the end, however, the truth will be clear. Nothing that managers do will stop the inexorable plunge towards immobility.

In desperation, the managers ask the developers what can be done to increase productivity. And the developers have an answer. They have known for some time what needs to be done. They were just waiting to be asked.

“Redesign the system from scratch.” The developers say.

Imagine the horror of the managers. Imagine the money and time that has been invested so far into this system. And now the developers are recommending that the whole thing be thrown away and redesigned from scratch!

Do those managers believe the developers when they promise, “This time things will be different”? Of course they don’t. They’d have to be fools to believe that. Yet, what choice do they have? Productivity is on the floor. The business isn’t sustainable at this rate. So, after much wailing and gnashing of teeth, they agree to the redesign.

A cheer goes up from the developers. “Hallelujah! We are all going back to the beginning when life is good and code is clean!” Of course, that’s not what happens at all. What really happens is that the team is split in two. The ten best, The Tiger Team—the guys who made the mess in the first place—are chosen and moved into a new room. They will lead the rest of us into the golden land of a redesigned system. The rest of us hate those guys because now we’re stuck maintaining the old crap.

From where does the Tiger Team get their requirements? Is there an up-to-date requirements document? Yes. It’s the old code. The old code is the only document that accurately describes what the redesigned system should do.

So now the Tiger Team is poring over the old code trying to figure out just what it does and what the new design ought to be. Meanwhile the rest of us are changing that old code, fixing bugs and adding new features.

Thus, we are in a race. The Tiger Team is trying to hit a moving target. And, as Zeno showed in the parable of Achilles and the tortoise, trying to catch up to a moving target can be a challenge. Every time the Tiger Team gets to where the old system was, the old system has moved on to a new position.

It requires calculus to prove that Achilles will eventually pass the tortoise. In software, however, that math doesn’t always work. I worked at a company where ten years later the new system had not yet been deployed. The customers had been promised a new system eight years before. But the new system never had enough features for those customers; the old system always did more than the new system. So the customers refused to take the new system.

After a few years, customers simply ignored the promise of the new system. From their point of view that system didn’t exist, and it never would.

Meanwhile, the company was paying for two development teams: the Tiger Team and the maintenance team. Eventually, management got so frustrated that they told their customers they were deploying the new system despite their objections. The customers threw a fit over this, but it was nothing compared to the fit thrown by the developers on the Tiger Team—or, should I say, the remnants of the Tiger Team. The original developers had all been promoted and gone off to management positions. The current members of the team stood up in unison and said, “You can’t ship this, it’s crap. It needs to be redesigned.”

OK, yes, another hyperbolic story told by Uncle Bob. The story is based on truth, but I did embellish it for effect. Still, the underlying message is entirely true. Big redesigns are horrifically expensive and seldom are deployed.

Customers and managers don’t expect software teams to slow down with time. Rather, they expect that a feature similar to one that took two weeks at the start of a project will take two weeks a year later. They expect productivity to be stable over time.

Developers should expect no less. By continuously keeping the architecture, design, and code as clean as possible, they can keep their productivity high and prevent the otherwise inevitable spiral into low productivity and redesign.

As we will show, the Agile practices of Testing, Pairing, Refactoring, and Simple Design are the technical keys to breaking that spiral. And the Planning Game is the antidote to the schedule pressure that drives that spiral.

Inexpensive Adaptability

Software is a compound word. The word “ware” means “product.” The word “soft” means easy to change. Therefore, software is a product that is easy to change. Software was invented because we wanted a way to quickly and easily change the behavior of our machines. Had we wanted that behavior to be hard to change, we would have called it hardware.

Developers often complain about changing requirements. I have often heard statements like, “That change completely thwarts our architecture.” I’ve got some news for you, sunshine. If a change to the requirements breaks your architecture, then your architecture sucks.

We developers should celebrate change because that’s why we are here. Changing requirements is the name of the whole game. Those changes are the justification for our careers and our salaries. Our jobs depend on our ability to accept and engineer changing requirements and to make those changes relatively inexpensive.

To the extent that a team’s software is hard to change, that team has thwarted the very reason for that software’s existence. Customers, users, and managers expect that software systems will be easy to change and that the cost of such changes will be small and proportionate.

We will show how the Agile practices of TDD, Refactoring, and Simple Design all work together to make sure that software systems can be safely changed with a minimum of effort.

Continuous Improvement

Humans make things better with time. Painters improve their paintings, songwriters improve their songs, and homeowners improve their homes. The same should be true for software. The older a software system is, the better it should be.

The design and architecture of a software system should get better with time. The structure of the code should improve, and so should the efficiency and throughput of the system. Isn’t that obvious? Isn’t that what you would expect from any group of humans working on anything?

It is the single greatest indictment of the software industry, the most obvious evidence of our failure as professionals, that we make things worse with time. The fact that we developers expect our systems to get messier, cruftier, and more brittle and fragile with time is, perhaps, the most irresponsible attitude possible.

Continuous, steady improvement is what users, customers, and managers expect. They expect that early problems will fade away and that the system will get better and better with time. The Agile practices of Pairing, TDD, Refactoring, and Simple Design strongly support this expectation.

Fearless Competence

Why don’t most software systems improve with time? Fear. More specifically, fear of change.

Imagine you are looking at some old code on your screen. Your first thought is, “This is ugly code, I should clean it.” Your next thought is, “I’m not touching it!” Because you know if you touch it, you will break it; and if you break it, it will become yours. So you back away from the one thing that might improve the code: cleaning it.

This is a fearful reaction. You fear the code, and this fear forces you to behave incompetently. You are incompetent to do the necessary cleaning because you fear the outcome. You have allowed this code, which you created, to go so far out of your control that you fear any action to improve it. This is irresponsible in the extreme.

Customers, users, and managers expect fearless competence. They expect that if you see something wrong or dirty, you will fix and clean it. They don’t expect you to allow problems to fester and grow; they expect you to stay on top of the code, keeping it as clean and clear as possible.

So how do you eliminate that fear? Imagine that you own a button that controls two lights: one red, the other green. Imagine that when you push this button, the green light is lit if the system works, and the red light is lit if the system is broken. Imagine that pushing that button and getting the result takes just a few seconds. How often would you push that button? You’d never stop. You’d push that button all the time. Whenever you made any change to the code, you’d push that button to make sure you hadn’t broken anything.

Now imagine that you are looking at some ugly code on your screen. Your first thought is, “I should clean it.” And then you simply start to clean it, pushing the button after each small change to make sure you haven’t broken anything.

The fear is gone. You can clean the code. You can use the Agile practices of Refactoring, Pairing, and Simple Design to improve the system.

How do you get that button? The Agile practice of TDD provides that button for you. If you follow that practice with discipline and determination, you will have that button, and you will be fearlessly competent.

QA Should Find Nothing

QA should find no faults with the system. When QA runs their tests, they should come back saying that everything works as required. Any time QA finds a problem, the development team should find out what went wrong in their process and fix it so that next time QA will find nothing.

QA should wonder why they are stuck at the back end of the process checking systems that always work. And, as we shall see, there is a much better place for QA to be positioned.

The Agile practices of Acceptance Tests, TDD, and Continuous Integration support this expectation.

Test Automation

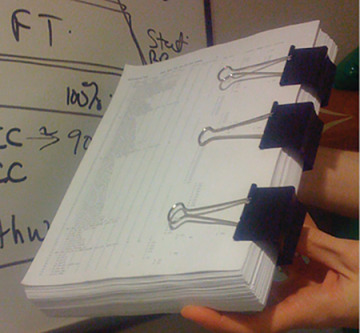

The hands you see in the picture in Figure 2.1 are the hands of a QA manager. The document that manager is holding is the table of contents for a manual test plan. It lists 80,000 manual tests that must be run every six months by an army of testers in India. It costs over a million dollars to run those tests.

Figure 2.1 Table of contents for the manual test plan

The QA manager is holding this document out to me because he just got back from his boss’ office. His boss just got back from the CFO’s office. The year is 2008. The great recession has begun. The CFO cut that million dollars in half every six months. The QA manager is holding this document out to me asking me which half of these tests he shouldn’t run.

I told him that no matter how he decided to cut the tests, he wouldn’t know if half of his system was working.

This is the inevitable result of manual testing. Manual tests are always eventually lost. What you just heard was the first and most obvious mechanism for losing manual tests: manual tests are expensive and so are always a target for reduction.

However, there is a more insidious mechanism for losing manual tests. Developers seldom deliver to QA on time. This means that QA has less time than planned to run the tests they need to run. So, QA must choose which tests they believe are most appropriate to run in order to make the shipment deadline. And so some tests aren’t run. They are lost.

And besides, humans are not machines. Asking humans to do what machines can do is expensive, inefficient, and immoral. There is a much better activity for which QA should be employed—an activity that uses their human creativity and imagination. But we’ll get to that.

Customers and users expect that every new release is thoroughly tested. No one expects the development team to bypass tests just because they ran out of time or money. So every test that can feasibly be automated must be automated. Manual testing should be limited to those things that cannot be automatically validated and to the creative discipline of Exploratory Testing.5

The Agile practices of TDD, Continuous Integration, and Acceptance Testing support this expectation.

We Cover for Each Other

As CTO, I expect development teams to behave like teams. How do teams behave? Imagine a team of players moving the ball down the field. One of the players trips and falls. What do the other players do? They cover the open hole left behind by the fallen team member and continue to move the ball down the field.

On board a ship, everyone has a job. Everyone also knows how to do someone else’s job. Because on board the ship, all jobs must get done.

In a software team, if Bob gets sick, Jill steps in to finish Bob’s tasks. This means that Jill had better know what Bob was working on and where Bob keeps all the source files, and scripts, etc.

I expect that the members of each software team will cover for each other. I expect that each individual member of a software team makes sure that there is someone who can cover for him if he goes down. It is your responsibility to make sure that one or more of your teammates can cover for you.

If Bob is the database guy, and Bob gets sick, I don’t expect progress on the project to grind to a halt. Someone else, even though she isn’t “the database guy,” should pick up the slack. I don’t expect the team to keep knowledge in silos; I expect knowledge to be shared. If I need to reassign half the members of the team to a new project, I do not expect that half the knowledge will be removed from the team.

The Agile practices of Pair Programming, Whole Team, and Collective Ownership support these expectations.

Honest Estimates

I expect estimates, and I expect them to be honest. The most honest estimate is “I don’t know.” However, that estimate is not complete. You may not know everything, but there are some things you do know. So I expect you to provide estimates based on what you do and don’t know.

For example, you may not know how long something will take, but you can compare one task to another in relative terms. You may not know how long it will take to build the Login page, but you might be able to tell me that the Change Password page will take about half the time as Login. Relative estimates like that are immensely valuable, as we will see in a later chapter.

Or, instead of estimating in relative terms, you may be able to give me a range of probabilities. For example, you might tell me that the Login page will take anywhere from 5 to 15 days to complete with an average completion time of 12 days. Such estimates combine what you do and don’t know into an honest probability for managers to manage.

The Agile practices of the Planning Game and Whole Team support this expectation.

You Need to Say “No”

While it is important to strive to find solutions to problems, I expect you to say “no” when no such solution can be found. You need to realize that you were hired more for your ability to say “no” than for your ability to code. You, programmers, are the ones who know whether something is possible. As your CTO, I am counting on you to inform us when we are headed off a cliff. I expect that, no matter how much schedule pressure you feel, no matter how many managers are demanding results, you will say “no” when the answer really is “no.”

The Agile practice of Whole Team supports this expectation.

Continuous Aggressive Learning

As CTO, I expect you to keep learning. Our industry changes quickly. We must be able to change with it. So learn, learn, learn! Sometimes the company can afford to send you to courses and conferences. Sometimes the company can afford to buy books and training videos. But if not, then you must find ways to continue learning without the company’s help.

The Agile practice of Whole Team supports this expectation.

Mentoring

As CTO I expect you to teach. Indeed, the best way to learn is to teach. So when new people join the team, teach them. Learn to teach each other.

Again, the Agile practice of Whole Team supports this expectation.