- Host Memory Management

- Resources

- Device Memory Management

- Summary

Device Memory Management

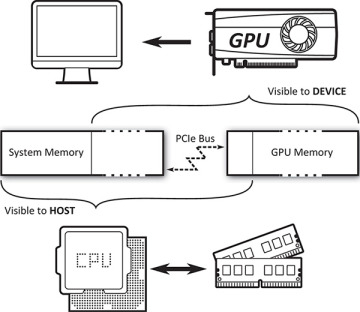

When the Vulkan device operates on data, the data must be stored in device memory. This is memory that is accessible to the device. In a Vulkan system there are four classes of memory. Some systems may have only a subset of these, and some may only have two. Given a host (the processor upon which your application is running) and a device (the processor that executes your Vulkan commands), there could be separate memory physically attached to each. In addition, some regions of the physical memory attached to each processor might be accessible to the other processor or processors in the system.

In some cases, the visible region of shared memory might be relatively small, and in other cases, there may actually be only one physical piece of memory, which is shared between the host and the device. Figure 2.5 demonstrates the memory map of a host and device with physically separate memories.

Figure 2.5: Host and Device Memory

Any memory that is accessible to the device is known as device memory, even if that memory is physically attached to the host. In this case, it is host local device memory. This is distinct from host memory, which might also be known as system memory, which is regular memory allocated with a function such as malloc or new. Device memory may also be accessible to the host through a mapping.

A typical discrete GPU as found on an add-in card plugged into a PCI-Express slot will have an amount of dedicated memory physically attached to its circuit board. Some part of this memory may be accessible only to the device, and some part of the memory may be accessible to the host through some form of window. In addition, the GPU will have access to some or all of the host’s system memory. All of these pools of memory will appear as a heap to the host, and memory will be mapped into those heaps via the various types of memory.

On the other hand, a typical embedded GPU—such as those found in embedded systems, mobile devices, or even laptop processors—may share memory controller and subsystem with the host processor. In this case, it is likely that access to main system memory is coherent and the device will expose fewer heaps—perhaps only one. This is considered a unified memory architecture.

Allocating Device Memory

A device memory allocation is represented as a VkDeviceMemory object that is created using the vkAllocateMemory() function, the prototype of which is

VkResult vkAllocateMemory (

VkDevice device,

const VkMemoryAllocateInfo* pAllocateInfo,

const VkAllocationCallbacks* pAllocator,

VkDeviceMemory* pMemory);

The device that will use the memory is passed in device. pAllocateInfo describes the new device memory object which, if the allocation is successful, will be placed in the variable pointed to by pMemory. pAllocateInfo points to an instance of the VkMemoryAllocateInfo structure, the definition of which is

typedef struct VkMemoryAllocateInfo {

VkStructureType sType;

const void* pNext;

VkDeviceSize allocationSize;

uint32_t memoryTypeIndex;

} VkMemoryAllocateInfo;

This is a simple structure containing only the size and the memory type to be used for the allocation. sType should be set to VK_STRUCTURE_TYPE_MEMORY_ALLOCATE_INFO, and pNext should be set to nullptr unless an extension is in use that requires more information about the allocation. The size of the allocation is passed in allocationSize and is measured in bytes. The memory type, passed in memoryTypeIndex, is an index into the memory type array returned from a call to vkGetPhysicalDeviceMemoryProperties(), as described in “Physical Device Memory” in Chapter 1, “Overview of Vulkan.”

Once you have allocated device memory, it can be used to back resources such as buffers and images. Vulkan may use device memory for other purposes, such as other types of device objects, internal allocations and data structures, scratch storage, and so on. These allocations are managed by the Vulkan driver, as the requirements may vary quite widely between implementations.

When you are done with a memory allocation, you need to free it. To do this, call vkFreeMemory(), the prototype of which is

void vkFreeMemory (

VkDevice device,

VkDeviceMemory memory,

const VkAllocationCallbacks* pAllocator);

vkFreeMemory() takes the memory object directly in memory. It is your responsibility to ensure that there is no work queued up to a device that might use the memory object before you free it. Vulkan will not track this for you. If a device attempts to access memory after it’s been freed, the results can be unpredictable and can easily crash your application.

Further, access to memory must be externally synchronized. Attempting to free device memory with a call to vkFreeMemory() while another command is executing in another thread will produce undefined behavior and possibly crash your application.

On some platforms, there may be an upper bound to the total number of memory allocations that can exist within a single process. If you try to create more allocations than this limit, allocation could fail. This limit can be determined by calling vkGetPhysicalDeviceProperties() and inspecting the maxMemoryAllocationCount field of the returned VkPhysicalDeviceLimits structure. The limit is guaranteed to be at least 4,096 allocations, though some platforms may report a much higher limit. Although this may seem low, the intention is that you create a small number of large allocations and then suballocate from them to place many resources in the same allocation. There is no upper limit to the total number of resources can be created, memory allowing.

Normally, when you allocate memory from a heap, that memory is permanently assigned to the returned VkDeviceMemory object until that object is destroyed by calling vkFreeMemory(). In some cases, you (or even the Vulkan implementation) may not know exactly how much memory is required for certain operations, or indeed whether any memory is required at all.

In particular, this is often the case for images that are used for intermediate storage of data during rendering. When the image is created, if the VK_IMAGE_USAGE_TRANSIENT_ATTACHMENT_BIT is included in the VkImageCreateInfo structure, then Vulkan knows that the data in the image will live for a short time, and therefore, it’s possible that it may never need to be written out to device memory.

In this case, you can ask Vulkan to be lazy with its allocation of the memory object to defer true allocation until Vulkan can determine that the physical storage for data is really needed. To do this, choose a memory type with the VK_MEMORY_PROPERTY_LAZILY_ALLOCATED_BIT set. Choosing an otherwise-appropriate memory type that does not have this bit set will still work correctly but will always allocate the memory up front, even if it never ends up being used.

If you want to know whether a memory allocation is physically backed and how much backing has actually been allocated for a memory object, call vkGetDeviceMemoryCommitment(), the prototype of which is

void vkGetDeviceMemoryCommitment (

VkDevice device,

VkDeviceMemory memory,

VkDeviceSize* pCommittedMemoryInBytes);

The device that owns the memory allocation is passed in device and the memory allocation to query is passed in memory. pCommittedMemoryInBytes is a pointer to a variable that will be overwritten with the number of bytes actually allocated for the memory object. That commitment will always come from the heap associated with the memory type used to allocate the memory object.

For memory objects allocated with memory types that don’t include VK_MEMORY_PROPERTY_LAZILY_ALLOCATED_BIT, or if the memory object ended up fully committed, vkGetDeviceMemoryCommitment() will always return the full size of the memory object. The commitment returned from vkGetDeviceMemoryCommitment() is informational at best. In many cases, the information could be out of date, and there’s not much you can do with the information anyway.

Host Access to Device Memory

As discussed earlier in this chapter, device memory is divided into multiple regions. Pure device memory is accessible only to the device. However, there are regions of memory that are accessible to both the host and the device. The host is the processor upon which your application is running, and it is possible to ask Vulkan to give you a pointer to memory allocated from host-accessible regions. This is known as mapping memory.

To map device memory into the host’s address space, the memory object to be mapped must have been allocated from a heap that has the VK_MEMORY_PROPERTY_HOST_VISIBLE_BIT flag set in its heap properties. Assuming that this is the case, mapping the memory to obtain a pointer usable by the host is achieved by calling vkMapMemory(), the prototype of which is

VkResult vkMapMemory (

VkDevice device,

VkDeviceMemory memory,

VkDeviceSize offset,

VkDeviceSize size,

VkMemoryMapFlags flags,

void** ppData);

The device that owns the memory object to be mapped is passed in device, and the handle to the memory object being mapped is passed in memory. Access to the memory object must be externally synchronized. To map a range of a memory object, specify the starting offset in offset and the size of the region in size. If you want to map the entire memory object, set offset to 0 and size to VK_WHOLE_SIZE. Setting offset to a nonzero value and size to VK_WHOLE_SIZE will map the memory object starting from offset to the end. offset and size are both specified in bytes. You should not attempt to map a region of the memory object that extends beyond its bounds.

The flags parameter is reserved for future use and should be set to zero.

If vkMapMemory() is successful, a pointer to the mapped region is written into the variable pointed to by ppData. This pointer can then be cast to the appropriate type in your application and dereferenced to directly read and write the device memory. Vulkan guarantees that pointers returned from vkMapMemory() are aligned to an integer multiple of the device’s minimum memory mapping alignment when offset is subtracted from them.

This value is reported in the minMemoryMapAlignment field of the VkPhysicalDeviceLimits structure returned from a call to vkGetPhysicalDeviceProperties(). It is guaranteed to be at least 64 bytes but could be any higher power of two. On some CPU architectures, much higher performance can be achieved by using memory load and store instructions that assume aligned addresses. minMemoryMapAlignment will often match a cache line size or the natural alignment of the machine’s widest register, for example, to facilitate this. Some host CPU instructions will fault if passed an unaligned address. Therefore, you can check minMemoryMapAlignment once and decide whether to use optimized functions that assume aligned addressing or fallback functions that can handle unaligned addresses at the expense of performance.

When you’re done with the pointer to the mapped memory range, it can be unmapped by calling vkUnmapMemory(), the prototype of which is

void vkUnmapMemory (

VkDevice device,

VkDeviceMemory memory);

The device that owns the memory object is passed in device, and the memory object to be unmapped is passed in memory. As with vkMapMemory(), access to the memory object must be externally synchronized.

It’s not possible to map the same memory object more than once at the same time. That is, you can’t call vkMapMemory() on the same memory object with different memory ranges, whether they overlap or not, without unmapping the memory object in between. The range isn’t needed when unmapping the object because Vulkan knows the range that was mapped.

As soon as the memory object is unmapped, any pointer received from a call to vkMapMemory() is invalid and should not be used. Also, if you map the same range of the same memory object over and over, you shouldn’t assume that the pointer you get back will be the same.

When device memory is mapped into host address space, there are effectively two clients of that memory, which may both perform writes into it. There is likely to be a cache hierarchy on both the host and the device sides of the mapping, and those caches may or may not be coherent. In order to ensure that both the host and the device see a coherent view of data written by the other client, it is necessary to force Vulkan to flush caches that might contain data written by the host but not yet made visible to the device or to invalidate a host cache that might hold stale data that has been overwritten by the device.

Each memory type advertised by the device has a number of properties, one of which might be VK_MEMORY_PROPERTY_HOST_COHERENT_BIT. If this is the case, and a mapping is made from a region with this property set, then Vulkan will take care of coherency between caches. In some cases, the caches are automatically coherent because they are either shared between host and device or have some form of coherency protocol to keep them in sync. In other cases, a Vulkan driver might be able to infer when caches need to be flushed or invalidated and then perform these operations behind the scenes.

If VK_MEMORY_PROPERTY_HOST_COHERENT_BIT is not set in the memory properties of a mapped memory region, then it is your responsibility to explicitly flush or invalidate caches that might be affected by the mapping. To flush host caches that might contain pending writes, call vkFlushMappedMemoryRanges(), the prototype of which is

VkResult vkFlushMappedMemoryRanges (

VkDevice device,

uint32_t memoryRangeCount,

const VkMappedMemoryRange* pMemoryRanges);

The device that owns the mapped memory objects is specified in device. The number of ranges to flush is specified in memoryRangeCount, and the details of each range are passed in an instance of the VkMappedMemoryRange structure. A pointer to an array of memoryRangeCount of these structures is passed through the pMemoryRanges parameter. The definition of VkMappedMemoryRange is

typedef struct VkMappedMemoryRange {

VkStructureType sType;

const void* pNext;

VkDeviceMemory memory;

VkDeviceSize offset;

VkDeviceSize size;

} VkMappedMemoryRange;

The sType field of VkMappedMemoryRange should be set to VK_STRUCTURE_TYPE_MAPPED_MEMORY_RANGE, and pNext should be set to nullptr. Each memory range refers to a mapped memory object specified in the memory field and a mapped range within that object, specified by offset and size. You don’t have to flush the entire mapped region of the memory object, so offset and size don’t need to match the parameters used in vkMapMemory(). Also, if the memory object is not mapped, or if offset and size specify a region of the object that isn’t mapped, then the flush command has no effect. To just flush any existing mapping on a memory object, set offset to zero and size to VK_WHOLE_SIZE.

A flush is necessary if the host has written to a mapped memory region and needs the device to see the effect of those writes. However, if the device writes to a mapped memory region and you need the host to see the effect of the device’s writes, you need to invalidate any caches on the host that might now hold stale data. To do this, call vkInvalidateMappedMemoryRanges(), the prototype of which is

VkResult vkInvalidateMappedMemoryRanges (

VkDevice device,

uint32_t memoryRangeCount,

const VkMappedMemoryRange* pMemoryRanges);

As with vkFlushMappedMemoryRanges(), device is the device that owns the memory objects whose mapped regions are to be invalidated. The number of regions is specified in memoryRangeCount, and a pointer to an array of memoryRangeCount VkMappedMemoryRange structures is passed in pMemoryRanges. The fields of the VkMappedMemoryRange structures are interpreted exactly as they are in vkFlushMappedMemoryRanges(), except that the operation performed is an invalidation rather than a flush.

vkFlushMappedMemoryRanges() and vkInvalidateMappedMemoryRanges() affect only caches and coherency of access by the host and have no effect on the device. Regardless of whether a memory mapping is coherent or not, access by the device to memory that has been mapped must still be synchronized using barriers, which will be discussed later in this chapter.

Binding Memory to Resources

Before a resource such as a buffer or image can be used by Vulkan to store data, memory must be bound to it. Before memory is bound to a resource, you should determine what type of memory and how much of it the resource requires. There is a different function for buffers and for textures. They are vkGetBufferMemoryRequirements() and vkGetImageMemoryRequirements(), and their prototypes are

void vkGetBufferMemoryRequirements (

VkDevice device,

VkBuffer buffer,

VkMemoryRequirements* pMemoryRequirements);

and

void vkGetImageMemoryRequirements (

VkDevice device,

VkImage image,

VkMemoryRequirements* pMemoryRequirements);

The only difference between these two functions is that vkGetBufferMemoryRequirements() takes a handle to a buffer object and vkGetImageMemoryRequirements() takes a handle to an image object. Both functions return the memory requirements for the resource in an instance of the VkMemoryRequirements structure, the address of which is passed in the pMemoryRequirements parameter. The definition of VkMemoryRequirements is

typedef struct VkMemoryRequirements {

VkDeviceSize size;

VkDeviceSize alignment;

uint32_t memoryTypeBits;

} VkMemoryRequirements;

The amount of memory needed by the resource is placed in the size field, and the alignment requirements of the object are placed in the alignment field. When you bind memory to the object (which we will get to in a moment), you need to ensure that the offset from the start of the memory object meets the alignment requirements of the resource and that there is sufficient space in the memory object to store the object.

The memoryTypeBits field is populated with all the memory types that the resource can be bound to. One bit is turned on, starting from the least significant bit, for each type that can be used with the resource. If you have no particular requirements for the memory, simply find the lowest-set bit and use its index to choose the memory type, which is then used as the memoryTypeIndex field in the allocation info passed to a call to vkAllocateMemory(). If you do have particular requirements or preferences for the memory—if you want to be able to map the memory or prefer that it be host local, for example—look for a type that includes those bits and is supported by the resource.

Listing 2.5 shows an example of an appropriate algorithm for choosing the memory type for an image resource.

Listing 2.5: Choosing a Memory Type for an Image

uint32_t application::chooseHeapFromFlags(

const VkMemoryRequirements& memoryRequirements,

VkMemoryPropertyFlags requiredFlags,

VkMemoryPropertyFlags preferredFlags)

{

VkPhysicalDeviceMemoryProperties deviceMemoryProperties;

vkGetPhysicalDeviceMemoryProperties(m_physicalDevices[0],

&deviceMemoryProperties);

uint32_t selectedType = ~0u;

uint32_t memoryType;

for (memoryType = 0; memoryType < 32; ++memoryType)

{

if (memoryRequirements.memoryTypeBits & (1 << memoryType))

{

const VkMemoryType& type =

deviceMemoryProperties.memoryTypes[memoryType];

// If it exactly matches my preferred properties, grab it.

if ((type.propertyFlags & preferredFlags) == preferredFlags)

{

selectedType = memoryType;

break;

}

}

}

if (selectedType != ~0u)

{

for (memoryType = 0; memoryType < 32; ++memoryType)

{

if (memoryRequirements.memoryTypeBits & (1 << memoryType))

{

const VkMemoryType& type =

deviceMemoryProperties.memoryTypes[memoryType];

// If it has all my required properties, it'll do.

if ((type.propertyFlags & requiredFlags) == requiredFlags)

{

selectedType = memoryType;

break;

}

}

}

}

return selectedType;

}

The algorithm shown in Listing 2.5 chooses a memory type given the memory requirements for an object, a set of hard requirements, and a set of preferred requirements. First, it iterates through the device’s supported memory types and checks each for the set of preferred flags. If there is a memory type that contains all of the flags that the caller prefers, then it immediately returns that memory type. If none of the device’s memory types exactly matches the preferred flags, then it iterates again, this time returning the first memory type that meets all of the requirements.

Once you have chosen the memory type for the resource, you can bind a piece of a memory object to that resource by calling either vkBindBufferMemory() for buffer objects or vkBindImageMemory() for image objects. Their prototypes are

VkResult vkBindBufferMemory (

VkDevice device,

VkBuffer buffer,

VkDeviceMemory memory,

VkDeviceSize memoryOffset);

and

VkResult vkBindImageMemory (

VkDevice device,

VkImage image,

VkDeviceMemory memory,

VkDeviceSize memoryOffset);

Again, these two functions are identical in declaration except that vkBindBufferMemory() takes a VkBuffer handle and vkBindImageMemory() takes a VkImage handle. In both cases, device must own both the resource and the memory object, whose handle is passed in memory. This is the handle of a memory allocation created through a call to vkAllocateMemory().

Access to buffer and image from vkBindBufferMemory() and vkBindImageMemory(), respectively, must be externally synchronized. Once memory has been bound to a resource object, the memory binding cannot be changed again. If two threads attempt to execute vkBindBufferMemory() or vkBindImageMemory() concurrently, then which thread’s binding takes effect and which one is invalid is subject to a race condition. Even resolving the race condition would not produce a legal command sequence, so this should be avoided.

The memoryOffset parameter specifies where in the memory object the resource will live. The amount of memory consumed by the object is determined from the size of the object’s requirements, as discovered with a call to vkGetBufferMemoryRequirements() or vkGetImageMemoryRequirements().

It is very strongly recommended that rather than simply creating a new memory allocation for each resource, you create a pool of a small number of relatively large memory allocations and place multiple resources in each one at different offsets. It is possible for two resources to overlap in memory. In general, aliasing data like this is not well defined, but if you can be sure that two resources are not used at the same time, this can be a good way to reduce the memory requirements of your application.

An example of a device memory allocator is included with the book’s source code.

Sparse Resources

Sparse resources are a special type of resource that can be partially backed by memory and can have their memory backing changed after they have been created and even used in the application. A sparse resource must still be bound to memory before it can be used, although that binding can be changed. Additionally, an image or buffer can support sparse residency, which allows parts of the image to not be backed by memory at all.

To create a sparse image, set the VK_IMAGE_CREATE_SPARSE_BINDING_BIT in the flags field of the VkImageCreateInfo structure used to create the image. Likewise, to create a sparse buffer, set the VK_BUFFER_CREATE_SPARSE_BINDING_BIT in the flags field of the VkBufferCreateInfo structure used to create the buffer.

If an image was created with the VK_IMAGE_CREATE_SPARSE_BINDING_BIT bit set, your application should call vkGetImageSparseMemoryRequirements() to determine the additional requirements that the image needs. The prototype of vkGetImageSparseMemoryRequirements() is

void vkGetImageSparseMemoryRequirements (

VkDevice device,

VkImage image,

uint32_t* pSparseMemoryRequirementCount,

VkSparseImageMemoryRequirements* pSparseMemoryRequirements);

The device that owns the image should be passed in device, and the image whose requirements to query should be passed in image. The pSparseMemoryRequirements parameter points to an array of VkSparseImageMemoryRequirements structures that will be filled with the requirements of the image.

If pSparseMemoryRequirements is nullptr, then the initial value of the variable pointed to by pSparseMemoryRequirementCount is ignored and is overwritten with the number of requirements of the image. If pSparseMemoryRequirements is not nullptr, then the initial value of the variable pointed to by pSparseMemoryRequirementCount is the number of elements in the pSparseMemoryRequirements array and is overwritten with the number of requirements actually written to the array.

The definition of VkSparseImageMemoryRequirements is

typedef struct VkSparseImageMemoryRequirements {

VkSparseImageFormatProperties formatProperties;

uint32_t imageMipTailFirstLod;

VkDeviceSize imageMipTailSize;

VkDeviceSize imageMipTailOffset;

VkDeviceSize imageMipTailStride;

} VkSparseImageMemoryRequirements;

The first field of VkSparseImageMemoryRequirements is an instance of the VkSparseImageFormatProperties structure that provides general information about how the image is laid out in memory with respect to binding.

typedef struct VkSparseImageFormatProperties {

VkImageAspectFlags aspectMask;

VkExtent3D imageGranularity;

VkSparseImageFormatFlags flags;

} VkSparseImageFormatProperties;

The aspectMask field of VkSparseImageFormatProperties is a bitfield indicating the image aspects to which the properties apply. This will generally be all of the aspects in the image. For color images, it will be VK_IMAGE_ASPECT_COLOR_BIT, and for depth, stencil, and depth-stencil images, it will be either or both of VK_IMAGE_ASPECT_DEPTH_BIT and VK_IMAGE_ASPECT_STENCIL_BIT.

When memory is bound to a sparse image, it is bound in blocks rather than to the whole resource at once. Memory has to be bound in implementation-specific sized blocks, and the imageGranularity field of VkSparseImageFormatProperties contains this size.

Finally, the flags field contains some addional flags describing further behavior of the image. The flags that may be included are

VK_SPARSE_IMAGE_FORMAT_SINGLE_MIPTAIL_BIT: If this bit is set and the image is an array, then the mip tail shares a binding shared by all array layers. If the bit is not set, then each array layer has its own mip tail that can be bound to memory independently of others.

VK_SPARSE_IMAGE_FORMAT_ALIGNED_MIP_SIZE_BIT: If this bit is set, it is an indicator that the mip tail begins with the first level that is not a multiple of the image’s binding granularity. If the bit is not set, then the tail begins at the first level that is smaller than the image’s binding granularity.

VK_SPARSE_IMAGE_FORMAT_NONSTANDARD_BLOCK_SIZE_BIT: If this bit is set, then the image’s format does support sparse binding, but not with the standard block sizes. The values reported in imageGranularity are still correct for the image but don’t necessarily match the standard block for the format.

Unless VK_SPARSE_IMAGE_FORMAT_NONSTANDARD_BLOCK_SIZE_BIT is set in flags, then the values in imageGranularity match a set of standard block sizes for the format. The size, in texels, of various formats is shown in Table 2.1.

Table 2.1: Sparse Texture Block Sizes

Texel Size |

2D Block Shape |

3D Block Shape |

8-bit |

256 × 256 |

64 × 32 × 32 |

16-bit |

256 × 128 |

32 × 32 × 32 |

32-bit |

128 × 128 |

32 × 32 × 16 |

64-bit |

128 × 64 |

32 × 16 × 16 |

128-bit |

64 × 64 |

16 × 16 × 16 |

The remaining fields of VkSparseImageMemoryRequirements describe how the format used by the image behaves in the mip tail. The mip tail is the region of the mipmap chain beginning from the first level that cannot be sparsely bound to memory. This is typically the first level that is smaller than the size of the format’s granularity. As memory must be bound to sparse resources in units of the granularity, the mip tail presents an all-or-nothing binding opportunity. Once any level of the mipmap’s tail is bound to memory, all levels within the tail become bound.

The mip tail begins at the level reported in the imageMipTailFirstLod field of VkSparseImageMemoryRequirements. The size of the tail, in bytes, is contained in imageMipTailSize, and it begins at imageMipTailOffset bytes into the image’s memory binding region. If the image does not have a single mip tail binding for all array layers (as indicated by the presence of VK_SPARSE_IMAGE_FORMAT_SINGLE_MIPTAIL_BIT in the aspectMask field of VkSparseImageFormatProperties), then imageMipTailStride is the distance, in bytes, between the start of the memory binding for each mip tail level.

The properties of a specific format can also be determined by calling vkGetPhysicalDeviceSparseImageFormatProperties(), which, given a specific format, will return a VkSparseImageFormatProperties describing that format’s sparse image requirements without the need to create an image and query it. The prototype of vkGetPhysicalDeviceSparseImageFormatProperties() is

void vkGetPhysicalDeviceSparseImageFormatProperties (

VkPhysicalDevice physicalDevice,

VkFormat format,

VkImageType type,

VkSampleCountFlagBits samples,

VkImageUsageFlags usage,

VkImageTiling tiling,

uint32_t* pPropertyCount,

VkSparseImageFormatProperties* pProperties);

As you can see, vkGetPhysicalDeviceSparseImageFormatProperties() takes as parameters many of the properties that would be used to construct an image. Sparse image properties are a function of a physical device, a handle to which should be passed in physicalDevice. The format of the image is passed in format, and the type of image (VK_IMAGE_TYPE_1D, VK_IMAGE_TYPE_2D, or VK_IMAGE_TYPE_3D) is passed in type. If multisampling is required, the number of samples (represented as one of the members of the VkSampleCountFlagBits enumeration) is passed in samples.

The intended usage for the image is passed in usage. This should be a bitfield containing the flags specifying how an image with this format will be used. Be aware that sparse images may not be supported at all under certain use cases, so it’s best to set this field conservatively and accurately rather than just turning on every bit and hoping for the best. Finally, the tiling mode to be used for the image is specified in tiling. Again, standard block sizes may be supported only in certain tiling modes. For example, it’s very unlikely that an implementation would support standard (or even reasonable) block sizes when LINEAR tiling is used.

Just as with vkGetPhysicalDeviceImageFormatProperties(), vkGetPhysicalDeviceSparseImageFormatProperties() can return an array of properties. The pPropertyCount parameter points to a variable that will be overwritten with the number of properties reported for the format. If pProperties is nullptr, then the initial value of the variable pointed to by pPropertyCount is ignored and the total number of properties is written into it. If pProperties is not nullptr, then it should be a pointer to an array of VkSparseImageFormatProperties structures that will receive the properties of the image. In this case, the initial value of the variable pointed to by pPropertyCount is the number of elements in the array, and it is overwritten with the number of items populated in the array.

Because the memory binding used to back sparse images can be changed, even after the image is in use, the update to the binding properties of the image is pipelined along with that work. Unlike vkBindImageMemory() and vkBindBufferMemory(), which are operations likely carried out by the host, memory is bound to a sparse resource using an operation on the queue, allowing the device to execute them. The command to bind memory to a sparse resource is vkQueueBindSparse(), the prototype of which is

VkResult vkQueueBindSparse (

VkQueue queue,

uint32_t bindInfoCount,

const VkBindSparseInfo* pBindInfo,

VkFence fence);

The queue that will execute the binding operation is specified in queue. Several binding operations can be performed by a single call to vkQueueBindSparse(). The number of operations to perform is passed in bindInfoCount, and pBindInfo is a pointer to an array of bindInfoCount VkBindSparseInfo structures, each describing one of the bindings. The definition of VkBindSparseInfo is

typedef struct VkBindSparseInfo {

VkStructureType sType;

const void* pNext;

uint32_t waitSemaphoreCount;

const VkSemaphore* pWaitSemaphores;

uint32_t bufferBindCount;

const VkSparseBufferMemoryBindInfo* pBufferBinds;

uint32_t imageOpaqueBindCount;

const VkSparseImageOpaqueMemoryBindInfo* pImageOpaqueBinds;

uint32_t imageBindCount;

const VkSparseImageMemoryBindInfo* pImageBinds;

uint32_t signalSemaphoreCount;

const VkSemaphore* pSignalSemaphores;

} VkBindSparseInfo;

The act of binding memory to sparse resources is actually pipelined with other work performed by the device. As you read in Chapter 1, “Overview of Vulkan,” work is performed by submitting it to queues. The binding is then performed along with the execution of commands submitted to the same queue. Because vkQueueBindSparse() behaves a lot like a command submission, VkBindSparseInfo contains many fields related to synchronization.

The sType field of VkBindSparseInfo should be set to VK_STRUCTURE_TYPE_BIND_SPARSE_INFO, and pNext should be set to nullptr. As with VkSubmitInfo, each sparse binding operation can optionally wait for one or more semaphores to be signaled before performing the operation and can signal one or more semaphores when it is done. This allows updates to the sparse resource’s bindings to be synchronized with other work performed by the device.

The number of semaphores to wait on is specified in waitSemaphoreCount, and the number of semaphores to signal is specified in signalSemaphoreCount. The pWaitSemaphores field is a pointer to an array of waitSemaphoreCount semaphore handles to wait on, and pSignalSemaphores is a pointer to an array of signalSemaphoreCount semaphores to signal. Semaphores are covered in some detail in Chapter 11, “Synchronization.”

Each binding operation can include updates to buffers and images. The number of buffer binding updates is specified in bufferBindCount and pBufferBinds is a pointer to an array of bufferBindCount VkSparseBufferMemoryBindInfo structures, each describing one of the buffer memory binding operations. The definition of VkSparseBufferMemoryBindInfo is

typedef struct VkSparseBufferMemoryBindInfo {

VkBuffer buffer;

uint32_t bindCount;

const VkSparseMemoryBind* pBinds;

} VkSparseBufferMemoryBindInfo;

Each instance of VkSparseBufferMemoryBindInfo contains the handle of the buffer to which memory will be bound. A number of regions of memory can be bound to the buffer at different offsets. The number of memory regions is specified in bindCount, and each binding is described by an instance of the VkSparseMemoryBind structure. pBinds is a pointer to an array of bindCount VkSparseMemoryBind structures. The definition of VkSparseMemoryBind is

typedef struct VkSparseMemoryBind {

VkDeviceSize resourceOffset;

VkDeviceSize size;

VkDeviceMemory memory;

VkDeviceSize memoryOffset;

VkSparseMemoryBindFlags flags;

} VkSparseMemoryBind;

The size of the block of memory to bind to the resource is contained in size. The offsets of the block in the resource and in the memory object are contained in resourceOffset and memoryOffset, respectively, and are both expressed in units of bytes. The memory object that is the source of storage for the binding is specified in memory. When the binding is executed, the block of memory, size bytes long and starting at memoryOffset bytes into the memory object specified in memory, will be bound into the buffer specified in the buffer field of the VkSparseBufferMemoryBindInfo structure.

The flags field contains additional flags that can be used to further control the binding. No flags are used for buffer resources. However, image resources use the same VkSparseMemoryBind structure to affect memory bindings directly to images. This is known as an opaque image memory binding, and the opaque image memory bindings to be performed are also passed through the VkBindSparseInfo structure. The pImageOpaqueBinds member of VkBindSparseInfo points to an array of imageOpaqueBindCount VkSparseImageOpaqueMemoryBindInfo structures defining the opaque memory bindings. The definition of VkSparseImageOpaqueMemoryBindInfo is

typedef struct VkSparseImageOpaqueMemoryBindInfo {

VkImage image;

uint32_t bindCount;

const VkSparseMemoryBind* pBinds;

} VkSparseImageOpaqueMemoryBindInfo;

Just as with VkSparseBufferMemoryBindInfo, VkSparseImageOpaqueMemoryBindInfo contains a handle to the image to which to bind memory in image and a pointer to an array of VkSparseMemoryBind structures in pBinds, which is bindCount elements long. This is the same structure used for buffer memory bindings. However, when this structure is used for images, you can set the flags field of each VkSparseMemoryBind structure to include the VK_SPARSE_MEMORY_BIND_METADATA_BIT flag in order to explicitly bind memory to the metadata associated with the image.

When memory is bound opaquely to a sparse image, the blocks of memory have no defined correlation with texels in the image. Rather, the backing store of the image is treated as a large, opaque region of memory with no information about how texels are laid out in it provided to the application. However, so long as memory is bound to the entire image when it is used, results will still be well-defined and consistent. This allows sparse images to be backed by multiple, smaller memory objects, potentially easing pool allocation strategies, for example.

To bind memory to an explicit region of an image, you can perform a nonopaque image memory binding by passing one or more VkSparseImageMemoryBindInfo structures through the VkBindSparseInfo structures passed to vkQueueBindSparse(). The definition of VkSparseImageMemoryBindInfo is

typedef struct VkSparseImageMemoryBindInfo {

VkImage image;

uint32_t bindCount;

const VkSparseImageMemoryBind* pBinds;

} VkSparseImageMemoryBindInfo;

Again, the VkSparseImageMemoryBindInfo structure contains a handle to the image to which to bind memory in image, a count of the number of bindings to perform in bindCount, and a pointer to an array of structures describing the bindings in pBinds. This time, however, pBinds points to an array of bindCount VkSparseImageMemoryBind structures, the definition of which is

typedef struct VkSparseImageMemoryBind {

VkImageSubresource subresource;

VkOffset3D offset;

VkExtent3D extent;

VkDeviceMemory memory;

VkDeviceSize memoryOffset;

VkSparseMemoryBindFlags flags;

} VkSparseImageMemoryBind;

The VkSparseImageMemoryBind structure contains much more information about how the memory is to be bound to the image resource. For each binding, the image subresource to which the memory is to be bound is specified in subresource, which is an instance of the VkImageSubresource, the definition of which is

typedef struct VkImageSubresource {

VkImageAspectFlags aspectMask;

uint32_t mipLevel;

uint32_t arrayLayer;

} VkImageSubresource;

The VkImageSubresource allows you to specify the aspect of the image (VK_IMAGE_ASPECT_COLOR_BIT, VK_IMAGE_ASPECT_DEPTH_BIT, or VK_IMAGE_ASPECT_STENCIL_BIT, for example) in aspectMask, the mipmap level to which you want to bind memory in mipLevel, and the array layer where the memory should be bound in arrayLayer. For nonarray images, arrayLayer should be set to zero.

Within the subresource, the offset and extent fields of the VkSparseImageMemoryBind structure define the offset and size of the region of texels to bind the image data to. This must be aligned to the tile-size boundaries, which are either the standard sizes as shown in Table 2.1 or the per-format block size that can be retrieved from vkGetPhysicalDeviceSparseImageFormatProperties().

Again, the memory object from which to bind memory is specified in memory, and the offset within the memory where the backing store resides is specified in memoryOffset. The same flags are available in the flags field of VkSparseImageMemoryBind.