Resources

Vulkan operates on data. Everything else is really secondary to this. Data is stored in resources, and resources are backed by memory. There are two fundamental types of resources in Vulkan: buffers and images. A buffer is a simple, linear chunk of data that can be used for almost anything—data structures, raw arrays, and even image data, should you choose to use them that way. Images, on the other hand, are structured and have type and format information, can be multidimensional, form arrays of their own, and support advanced operations for reading and writing data from and to them.

Both types of resources are constructed in two steps: first the resource itself is created, and then the resource needs to be backed by memory. The reason for this is to allow the application to manage memory itself. Memory management is complex, and it is very difficult for a driver to get it right all the time. What works well for one application might not work well for another. Therefore, it is expected that applications can do a better job of managing memory than drivers can. For example, an application that uses a small number of very large resources and keeps them around for a long time might use one strategy in its memory allocator, while another application that continually creates and destroys small resources might implement another.

Although images are more complex structures, the procedure for creating them is similar to buffers. This section looks at buffer creation first and then moves on to discuss images.

Buffers

Buffers are the simplest type of resource but have a wide variety of uses in Vulkan. They are used to store linear structured or unstructured data, which can have a format or be raw bytes in memory. The various uses for buffer objects will be discussed as we introduce those topics. To create a new buffer object, call vkCreateBuffer(), the prototype of which is

VkResult vkCreateBuffer (

VkDevice device,

const vkbuffercreateinfo* pCreateInfo,

const VkAllocationCallbacks* pAllocator,

VkBuffer* pBuffer);

As with most functions in Vulkan that consume more than a couple of parameters, those parameters are bundled up in a structure and passed to Vulkan via a pointer. Here, the pCreateInfo parameter is a pointer to an instance of the VkBufferCreateInfo structure, the definition of which is

typedef struct VkBufferCreateInfo {

VkStructureType sType;

const void* pNext;

VkBufferCreateFlags flags;

VkDeviceSize size;

VkBufferUsageFlags usage;

VkSharingMode sharingMode;

uint32_t queueFamilyIndexCount;

const uint32_t* pQueueFamilyIndices;

} VkBufferCreateInfo;

The sType for VkBufferCreateInfo should be set to VK_STRUCTURE_TYPE_BUFFER_CREATE_INFO, and the pNext member should be set to nullptr unless you’re using an extension. The flags field of the structure gives Vulkan some information about the properties of the new buffer. In the current version of Vulkan, the only bits defined for use in the flags field are related to sparse buffers, which we will cover later in this chapter. For now, flags can be set to zero.

The size field of VkBufferCreateInfo specifies the size of the buffer, in bytes. The usage field tells Vulkan how you’re going to use the buffer and is a bitfield made up of a combination of members of the VkBufferUsageFlagBits enumeration. On some architectures, the intended usage of the buffer can have an effect on how it’s created. The currently defined bits along with the sections where we’ll discuss them are as follows:

VK_BUFFER_USAGE_TRANSFER_SRC_BIT and VK_BUFFER_USAGE_TRANSFER_DST_BIT mean that the buffer can be the source or destination, respectively, of transfer commands. Transfer operations are operations that copy data from a source to a destination. They are covered in Chapter 4, “Moving Data.”

VK_BUFFER_USAGE_UNIFORM_TEXEL_BUFFER_BIT and VK_BUFFER_USAGE_STORAGE_TEXEL_BUFFER_BIT mean that the buffer can be used to back a uniform or storage texel buffer, respectively. Texel buffers are formatted arrays of texels that can be used as the source or destination (in the case of storage buffers) of reads and writes by shaders running on the device. Texel buffers are covered in Chapter 6, “Shaders and Pipelines.”

VK_BUFFER_USAGE_UNIFORM_BUFFER_BIT and VK_BUFFER_USAGE_STORAGE_BUFFER_BIT mean that the buffer can be used to back uniform or storage buffers, respectively. As opposed to texel buffers, regular uniform and storage buffers have no format associated with them and can therefore be used to store arbitrary data and data structures. They are covered in Chapter 6, “Shaders and Pipelines.”

VK_BUFFER_USAGE_INDEX_BUFFER_BIT and VK_BUFFER_USAGE_VERTEX_BUFFER_BIT mean that the buffer can be used to store index or vertex data, respectively, used in drawing commands. You’ll learn more about drawing commands, including indexed drawing commands, in Chapter 8, “Drawing.”

VK_BUFFER_USAGE_INDIRECT_BUFFER_BIT means that the buffer can be used to store parameters used in indirect dispatch and drawing commands, which are commands that take their parameters directly from buffers rather than from your program. These are covered in Chapter 6, “Shaders and Pipelines,” and Chapter 8, “Drawing.”

The sharingMode field of VkBufferCreateInfo indicates how the buffer will be used on the multiple command queues supported by the device. Because Vulkan can execute many operations in parallel, some implementations need to know whether the buffer will essentially be used by a single command at a time or potentially by many. Setting sharingMode to VK_SHARING_MODE_EXCLUSIVE says that the buffer will only be used on a single queue, whereas setting sharingMode to VK_SHARING_MODE_CONCURRENT indicates that you plan to use the buffer on multiple queues at the same time. Using VK_SHARING_MODE_CONCURRENT might result in lower performance on some systems, so unless you need this, set sharingMode to VK_SHARING_MODE_EXCLUSIVE.

If you do set sharingMode to VK_SHARING_MODE_CONCURRENT, you need to tell Vulkan which queues you’re going to use the buffer on. This is done using the pQueueFamilyIndices member of VkBufferCreateInfo, which is a pointer to an array of queue families that the resource will be used on. queueFamilyIndexCount contains the length of this array—the number of queue families that the buffer will be used with. When sharingMode is set to VK_SHARING_MODE_EXCLUSIVE, queueFamilyCount and pQueueFamilies are both ignored.

Listing 2.3 demonstrates how to create a buffer object that is 1MiB in size, usable as the source or destination of transfer operations, and used on only one queue family at a time.

Listing 2.3: Creating a Buffer Object

static const VkBufferCreateInfo bufferCreateInfo =

{

VK_STRUCTURE_TYPE_BUFFER_CREATE_INFO, nullptr,

0,

1024 * 1024,

VK_BUFFER_USAGE_TRANSFER_SRC_BIT | VK_BUFFER_USAGE_TRANSFER_DST_BIT,

VK_SHARING_MODE_EXCLUSIVE,

0, nullptr

};

VkBuffer buffer = VK_NULL_HANDLE;

vkCreateBuffer(device, &bufferCreateInfo, &buffer);

After the code in Listing 2.3 has run, a new VkBuffer handle is created and placed in the buffer variable. The buffer is not yet fully usable because it first needs to be backed with memory. This operation is covered in “Device Memory Management” later in this chapter.

Formats and Support

While buffers are relatively simple resources and do not have any notion of the format of the data they contain, images and buffer views (which we will introduce shortly) do include information about their content. Part of that information describes the format of the data in the resource. Some formats have special requirements or restrictions on their use in certain parts of the pipeline. For example, some formats might be readable but not writable, which is common with compressed formats.

In order to determine the properties and level of support for various formats, you can call vkGetPhysicalDeviceFormatProperties(), the prototype of which is

void vkGetPhysicalDeviceFormatProperties (

VkPhysicalDevice physicalDevice,

VkFormat format,

VkFormatProperties* pFormatProperties);

Because support for particular formats is a property of a physical device rather than a logical one, the physical device handle is specified in physicalDevice. If your application absolutely required support for a particular format or set of formats, you could check for support before even creating the logical device and reject particular physical devices from consideration early in application startup, for example. The format for which to check support is specified in format. If the device recognizes the format, it will write its level of support into the instance of the VkFormatProperties structure pointed to by pFormatProperties. The definition of the VkFormatProperties structure is

typedef struct VkFormatProperties {

VkFormatFeatureFlags linearTilingFeatures;

VkFormatFeatureFlags optimalTilingFeatures;

VkFormatFeatureFlags bufferFeatures;

} VkFormatProperties;

All three fields in the VkFormatProperties structure are bitfields made up from members of the VkFormatFeatureFlagBits enumeration. An image can be in one of two primary tiling modes: linear, in which image data is laid out linearly in memory, first by row, then by column, and so on; and optimal, in which image data is laid out in highly optimized patterns that make efficient use of the device’s memory subsystem. The linearTilingFeatures field indicates the level of support for a format in images in linear tiling, the optimalTilingFeatures field indicates the level of support for a format in images in optimal tiling, and the bufferFeatures field indicates the level of support for the format when used in a buffer.

The various bits that might be included in these fields are defined as follows:

VK_FORMAT_FEATURE_SAMPLED_IMAGE_BIT: The format may be used in read-only images that will be sampled by shaders.

VK_FORMAT_FEATURE_SAMPLED_IMAGE_FILTER_LINEAR_BIT: Filter modes that include linear filtering may be used when this format is used for a sampled image.

VK_FORMAT_FEATURE_STORAGE_IMAGE_BIT: The format may be used in read-write images that will be read and written by shaders.

VK_FORMAT_FEATURE_STORAGE_IMAGE_ATOMIC_BIT: The format may be used in read-write images that also support atomic operations performed by shaders.

VK_FORMAT_FEATURE_UNIFORM_TEXEL_BUFFER_BIT: The format may be used in a read-only texel buffer that will be read from by shaders.

VK_FORMAT_FEATURE_STORAGE_TEXEL_BUFFER_BIT: The format may be used in read-write texel buffers that may be read from and written to by shaders.

VK_FORMAT_FEATURE_STORAGE_TEXEL_BUFFER_ATOMIC_BIT: The format may be used in read-write texel buffers that also support atomic operations performed by shaders.

VK_FORMAT_FEATURE_VERTEX_BUFFER_BIT: The format may be used as the source of vertex data by the vertex-assembly stage of the graphics pipeline.

VK_FORMAT_FEATURE_COLOR_ATTACHMENT_BIT: The format may be used as a color attachment in the color-blend stage of the graphics pipeline.

VK_FORMAT_FEATURE_COLOR_ATTACHMENT_BLEND_BIT: Images with this format may be used as color attachments when blending is enabled.

VK_FORMAT_FEATURE_DEPTH_STENCIL_ATTACHMENT_BIT: The format may be used as a depth, stencil, or depth-stencil attachment.

VK_FORMAT_FEATURE_BLIT_SRC_BIT: The format may be used as the source of data in an image copy operation.

VK_FORMAT_FEATURE_BLIT_DST_BIT: The format may be used as the destination of an image copy operation.

Many formats will have a number of format support bits turned on. In fact, many formats are compulsory to support. A complete list of the mandatory formats is contained in the Vulkan specification. If a format is on the mandatory list, then it’s not strictly necessary to test for support. However, for completeness, implementations are expected to accurately report capabilities for all supported formats, even mandatory ones.

The vkGetPhysicalDeviceFormatProperties() function really returns only a coarse set of flags indicating whether a format may be used at all under particular scenarios. For images especially, there may be more complex interactions between a specific format and its effect on the level of support within an image. Therefore, to retrieve even more information about the support for a format when used in images, you can call vkGetPhysicalDeviceImageFormatProperties(), the prototype of which is

VkResult vkGetPhysicalDeviceImageFormatProperties (

VkPhysicalDevice physicalDevice,

VkFormat format,

VkImageType type,

VkImageTiling tiling,

VkImageUsageFlags usage,

VkImageCreateFlags flags,

VkImageFormatProperties* pImageFormatProperties);

Like vkGetPhysicalDeviceFormatProperties(), vkGetPhysicalDeviceImageFormatProperties() takes a VkPhysicalDevice handle as its first parameter and reports support for the format for the physical device rather than for a logical one. The format you’re querying support for is passed in format.

The type of image that you want to ask about is specified in type. This should be one of the image types: VK_IMAGE_TYPE_1D, VK_IMAGE_TYPE_2D, or VK_IMAGE_TYPE_3D. Different image types might have different restrictions or enhancements. The tiling mode for the image is specified in tiling and can be either VK_IMAGE_TILING_LINEAR or VK_IMAGE_TILING_OPTIMAL, indicating linear or optimal tiling, respectively.

The intended use for the image is specified in the usage parameter. This is a bitfield indicating how the image is to be used. The various uses for an image are discussed later in this chapter. The flags field should be set to the same value that will be used when creating the image that will use the format.

If the format is recognized and supported by the Vulkan implementation, then it will write information about the level of support into the VkImageFormatProperties structure pointed to by pImageFormatProperties. The definition of VkImageFormatProperties is

typedef struct VkImageFormatProperties {

VkExtent3D maxExtent;

uint32_t maxMipLevels;

uint32_t maxArrayLayers;

VkSampleCountFlags sampleCounts;

VkDeviceSize maxResourceSize;

} VkImageFormatProperties;

The extent member of VkImageFormatProperties reports the maximum size of an image that can be created with the specified format. For example, formats with fewer bits per pixel may support creating larger images than those with wider pixels. extent is an instance of the VkExtent3D structure, the definition of which is

typedef struct VkExtent3D {

uint32_t width;

uint32_t height;

uint32_t depth;

} VkExtent3D;

The maxMipLevels field reports the maximum number of mipmap levels supported for an image of the requested format along with the other parameters passed to vkGetPhysicalDeviceImageFormatProperties(). In most cases, maxMipLevels will either report log2 (max (extent.x, extent.y, extent.z)) for the image when mipmaps are supported or 1 when mipmaps are not supported.

The maxArrayLayers field reports the maximum number of array layers supported for the image. Again, this is likely to be a fairly high number if arrays are supported or 1 if arrays are not supported.

If the image format supports multisampling, then the supported sample counts are reported through the sampleCounts field. This is a bitfield containing one bit for each supported sample count. If bit n is set, then images with 2n samples are supported in this format. If the format is supported at all, at least one bit of this field will be set. It is very unlikely that you will ever see a format that supports multisampling but does not support a single sample per pixel.

Finally, the maxResourceSize field specifies the maximum size, in bytes, that a resource of this format might be. This should not be confused with the maximum extent, which reports the maximum size in each of the dimensions that might be supported. For example, if an implementation reports that it supports images of 16,384 × 16,384 pixels × 2,048 layers with a format containing 128 bits per pixel, then creating an image of the maxium extent in every dimension would produce 8TiB of image data. It’s unlikely that an implementation really supports creating an 8TiB image. However, it might well support creating an 8 × 8 × 2,048 array or a 16,384 × 16,284 nonarray image, either of which would fit into a more moderate memory footprint.

Images

Images are more complex than buffers in that they are multidimensional; have specific layouts and format information; and can be used as the source and destination for complex operations such as filtering, blending, depth or stencil testing, and so on. Images are created using the vkCreateImage() function, the prototype of which is

VkResult vkCreateImage (

VkDevice device,

const VkImageCreateInfo* pCreateInfo,

const VkAllocationCallbacks* pAllocator,

VkImage* pImage);

The device that is used to create the image is passed in the device parameter. Again, the description of the image is passed through a data structure, the address of which is passed in the pCreateInfo parameter. This is a pointer to an instance of the VkImageCreateInfo structure, the definition of which is

typedef struct VkImageCreateInfo {

VkStructureType sType;

const void* pNext;

VkImageCreateFlags flags;

VkImageType imageType;

VkFormat format;

VkExtent3D extent;

uint32_t mipLevels;

uint32_t arrayLayers;

VkSampleCountFlagBits samples;

VkImageTiling tiling;

VkImageUsageFlags usage;

VkSharingMode sharingMode;

uint32_t queueFamilyIndexCount;

const uint32_t* pQueueFamilyIndices;

VkImageLayout initialLayout;

} VkImageCreateInfo;

As you can see, this is a significantly more complex structure than the VkBufferCreateInfo structure. The common fields, sType and pNext, appear at the top, as with most other Vulkan structures. The sType field should be set to VK_STRUCTURE_TYPE_IMAGE_CREATE_INFO.

The flags field of VkImageCreateInfo contains flags describing some of the properties of the image. These are a selection of the VkImageCreateFlagBits enumeration. The first three—VK_IMAGE_CREATE_SPARSE_BINDING_BIT, VK_IMAGE_CREATE_SPARSE_RESIDENCY_BIT, and VK_IMAGE_CREATE_SPARSE_ALIASED_BIT—are used for controlling sparse images, which are covered later in this chapter.

If VK_IMAGE_CREATE_MUTABLE_FORMAT_BIT is set, then you can create views of the image with a different format from the parent. Image views are essentially a special type of image that shares data and layout with its parent but can override parameters such as format. This allows data in the image to be interpreted in multiple ways at the same time. Using image views is a way to create two different aliases for the same data. Image views are covered later in this chapter. If VK_IMAGE_CREATE_CUBE_COMPATIBLE_BIT is set, then the you will be able to create cube map views of it. Cube maps are covered later in this chapter.

The imageType field of the VkImageCreateInfo structure specifies the type of image that you want to create. The image type is essentially the dimensionality of the image and can be one of VK_IMAGE_TYPE_1D, VK_IMAGE_TYPE_2D, or VK_IMAGE_TYPE_3D for a 1D, 2D, or 3D image, respectively.

Images also have a format, which describes how texel data is stored in memory and how it is interpreted by Vulkan. The format of the image is specified by the format field of the VkImageCreateInfo structure and must be one of the image formats represented by a member of the VkFormat enumeration. Vulkan supports a large number of formats—too many to list here. We will use some of the formats in the book examples and explain how they work at that time. For the rest, refer to the Vulkan specification.

The extent of an image is its size in texels. This is specified in the extent field of the VkImageCreateInfo structure. This is an instance of the VkExtent3D structure, which has three members: width, height, and depth. These should be set to the width, height, and depth of the desired image, respectively. For 1D images, height should be set to 1, and for 1D and 2D images, depth should be set to 1. Rather than alias the next-higher dimension as an array count, Vulkan uses an explicit array size, which is set in arrayLayers.

The maximum size of an image that can be created is device-dependent. To determine the largest image size, call vkGetPhysicalDeviceFeatures() and check the maxImageDimension1D, maxImageDimension2D, and maxImageDimension3D fields of the embedded VkPhysicalDeviceLimits structure. maxImageDimension1D contains the maximum supported width for 1D images, maxImageDimension2D the maximum side length for 2D images, and maxImageDimension3D the maximum side length for 3D images. Likewise, the maximum number of layers in an array image is contained in the maxImageArrayLayers field. If the image is a cube map, then the maximum side length for the cube is stored in maxImageDimensionCube.

maxImageDimension1D, maxImageDimension2D, and maxImageDimensionCube are guaranteed to be at least 4,096 texels, and maxImageDimensionCube and maxImageArrayLayers are guaranteed to be at least 256. If the image you want to create is smaller than these dimensions, then there’s no need to check the device features. Further, it’s quite common to find Vulkan implementations that support significantly higher limits. It would be reasonable to make larger image sizes a hard requirement rather than trying to create fallback paths for lower-end devices.

The number of mipmap levels to create in the image is specified in mipLevels. Mipmapping is the process of using a set of prefiltered images of successively lower resolution in order to improve image quality when undersampling the image. The images that make up the various mipmap levels are arranged in a pyramid, as shown in Figure 2.1.

Figure 2.1: Mipmap Image Layout

In a mipmapped texture, the base level is the lowest-numbered level (usually level zero) and has the resolution of the texture. Each successive level is half the size of the level above it until halving the size of the image again in one of the dimensions would result in a single texel in that direction. Sampling from mipmapped textures is covered in some detail in Chapter 6, “Shaders and Pipelines.”

Likewise, the number of samples in the image is specified in samples. This field is somewhat unlike the others. It must be a member of the VkSampleCountFlagBits enumeration, which is actually defined as bits to be used in a bitfield. However, only power-of-two sample counts are currently defined in Vulkan, which means they’re “1-hot” values, so single-bit enumerant values work just fine.

The next few fields describe how the image will be used. First is the tiling mode, specified in the tiling field. This is a member of the VkImageTiling enumeration, which contains only VK_IMAGE_TILING_LINEAR or VK_IMAGE_TILING_OPTIMAL. Linear tiling means that image data is laid out left to right, top to bottom,1 such that if you map the underlying memory and write it with the CPU, it would form a linear image. Meanwhile, optimal tiling is an opaque representation used by Vulkan to lay data out in memory to improve efficiency of the memory subsystem on the device. This is generally what you should choose unless you plan to map and manipulate the image with the CPU. Optimal tiling will likely perform significantly better than linear tiling in most operations, and linear tiling might not be supported at all for some operations or formats, depending on the Vulkan implementation.

The usage field is a bitfield describing where the image will be used. This is similar to the usage field in the VkBufferCreateInfo structure. The usage field here is made up of members of the VkImageUsageFlags enumeration, the members of which are as follows:

VK_IMAGE_USAGE_TRANSFER_SRC_BIT and VK_IMAGE_USAGE_TRANSFER_DST_BIT mean that the image will be the source or destination of transfer commands, respectively. Transfer commands operating on images are covered in Chapter 4, “Moving Data.”

VK_IMAGE_USAGE_SAMPLED_BIT means that the image can be sampled from in a shader.

VK_IMAGE_USAGE_STORAGE_BIT means that the image can be used for general-purpose storage, including writes from a shader.

VK_IMAGE_USAGE_COLOR_ATTACHMENT_BIT means that the image can be bound as a color attachment and drawn into using graphics operations. Framebuffers and their attachments are covered in Chapter 7, “Graphics Pipelines.”

VK_IMAGE_USAGE_DEPTH_STENCIL_ATTACHMENT_BIT means that the image can be bound as a depth or stencil attachment and used for depth or stencil testing (or both). Depth and stencil operations are covered in Chapter 10, “Fragment Processing.”

VK_IMAGE_USAGE_TRANSIENT_ATTACHMENT_BIT means that the image can be used as a transient attachment, which is a special kind of image used to store intermediate results of a graphics operation. Transient attachments are covered in Chapter 13, “Multipass Rendering.”

VK_IMAGE_USAGE_INPUT_ATTACHMENT_BIT means that the image can be used as a special input during graphics rendering. Input images differ from regular sampled or storage images in that only fragment shaders can read from them and only at their own pixel location. Input attachments are also covered in detail in Chapter 13, “Multipass Rendering.”

The sharingMode is identical in function to the similarly named field in the VkBufferCreateInfo structure described in “Buffers” earlier in this chapter. If it is set to VK_SHARING_MODE_EXCLUSIVE, the image will be used with only a single queue family at a time. If it is set to VK_SHARING_MODE_CONCURRENT, then the image may be accessed by multiple queues concurrently. Likewise, queueFamilyIndexCount and pQueueFamilyIndices provide similar function and are used when sharingMode is VK_SHARING_MODE_CONCURRENT.

Finally, images have a layout, which specifies in part how it will be used at any given moment. The initialLayout field determines which layout the image will be created in. The available layouts are the members of the VkImageLayout enumeration, which are

VK_IMAGE_LAYOUT_UNDEFINED: The state of the image is undefined. The image must be moved into one of the other layouts before it can be used almost for anything.

VK_IMAGE_LAYOUT_GENERAL: This is the “lowest common denominator” layout and is used where no other layout matches the intended use case. Images in VK_IMAGE_LAYOUT_GENERAL can be used almost anywhere in the pipeline.

VK_IMAGE_LAYOUT_COLOR_ATTACHMENT_OPTIMAL: The image is going to be rendered into using a graphics pipeline.

VK_IMAGE_LAYOUT_DEPTH_STENCIL_ATTACHMENT_OPTIMAL: The image is going to be used as a depth or stencil buffer as part of a graphics pipeline.

VK_IMAGE_LAYOUT_DEPTH_STENCIL_READ_ONLY_OPTIMAL: The image is going to be used for depth testing but will not be written to by the graphics pipeline. In this special state, the image can also be read from in shaders.

VK_IMAGE_LAYOUT_SHADER_READ_ONLY_OPTIMAL: The image will be bound for reading by shaders. This layout is typically used when an image is going to be used as a texture.

VK_IMAGE_LAYOUT_TRANSFER_SRC_OPTIMAL: The image is the source of copy operations.

VK_IMAGE_LAYOUT_TRANSFER_DST_OPTIMAL: The image is the destination of copy operations.

VK_IMAGE_LAYOUT_PREINITIALIZED: The image contains data placed there by an external actor, such as by mapping the underlying memory and writing into it from the host.

VK_IMAGE_LAYOUT_PRESENT_SRC_KHR: The image is used as the source for presentation, which is the act of showing it to the user.

Images can be moved from layout to layout, and we will cover the various layouts as we introduce the topics related to them. However, images must initially be created in either the VK_IMAGE_LAYOUT_UNDEFINED or the VK_IMAGE_LAYOUT_PREINITIALIZED layout. VK_IMAGE_LAYOUT_PREINITIALIZED should be used only when you have data in memory that you will bind to the image resource immediately. VK_IMAGE_LAYOUT_UNDEFINED should be used when you plan to move the resource to another layout before use. Images can be moved out of VK_IMAGE_LAYOUT_UNDEFINED layout at little or no cost at any time.

The mechanism for changing the layout of an image is known as a pipeline barrier, or simply a barrier. A barrier not only serves as a means to change the layout of a resource but can also synchronize access to that resource by different stages in the Vulkan pipeline and even by different queues running concurrently on the same device. As such, a pipeline barrier is fairly complex and quite difficult to get right. Pipeline barriers are discussed in some detail in Chapter 4, “Moving Data,” and are further explained in the sections of the book where they are relevant.

Listing 2.4 shows a simple example of creating an image resource.

Listing 2.4: Creating an Image Object

VkImage image = VK_NULL_HANDLE;

VkResult result = VK_SUCCESS;

static const

VkImageCreateInfo imageCreateInfo =

{

VK_STRUCTURE_TYPE_IMAGE_CREATE_INFO, // sType

nullptr, // pNext

0, // flags

VK_IMAGE_TYPE_2D, // imageType

VK_FORMAT_R8G8B8A8_UNORM, // format

{ 1024, 1024, 1 }, // extent

10, // mipLevels

1, // arrayLayers

VK_SAMPLE_COUNT_1_BIT, // samples

VK_IMAGE_TILING_OPTIMAL, // tiling

VK_IMAGE_USAGE_SAMPLED_BIT, // usage

VK_SHARING_MODE_EXCLUSIVE, // sharingMode

0, // queueFamilyIndexCount

nullptr, // pQueueFamilyIndices

VK_IMAGE_LAYOUT_UNDEFINED // initialLayout

};

result = vkCreateImage(device, &imageCreateInfo, nullptr, &image);

The image created by the code in Listing 2.4 is a 1,024 × 1,024 texel 2D image with a single sample, in VK_FORMAT_R8G8B8A8_UNORM format and optimal tiling. The code creates it in the undefined layout, which means that we can move it to another layout later to place data into it. The image is to be used as a texture in one of our shaders, so we set the VK_IMAGE_USAGE_SAMPLED_BIT usage flag. In our simple applications, we use only a single queue, so we set the sharing mode to exclusive.

Linear Images

As discussed earlier, two tiling modes are available for use in image resources: VK_IMAGE_TILING_LINEAR and VK_IMAGE_TILING_OPTIMAL. The VK_IMAGE_TILING_OPTIMAL mode represents an opaque, implementation-defined layout that is intended to improve the efficiency of the memory subsystem of the device for read and write operations on the image. However, VK_IMAGE_TILING_LINEAR is a transparent layout of the data that is intended to be intuitive. Pixels in the image are laid out left to right, top to bottom. Therefore, it’s possible to map the memory used to back the resource to allow the host to read and write to it directly.

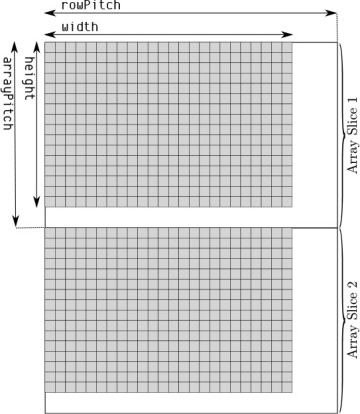

In addition to the image’s width, height, depth, and pixel format, a few pieces of information are needed to enable host access to the underlying image data. These are the row pitch of the image, which is the distance in bytes between the start of each row of the image; the array pitch, which is the distance between array layers; and the depth pitch, which is the distance between depth slices. Of course, the array pitch and depth pitch apply only to array or 3D images, respectively, and the row pitch applies only to 2D or 3D images.

An image is normally made up of several subresources. Some formats have more than one aspect, which is a component of the image such as the depth or stencil component in a depth-stencil image. Mipmap levels and array layers are also considered to be separate subresources. The layout of each subresource within an image may be different and therefore has different layout information. This information can be queried by calling vkGetImageSubresourceLayout(), the prototype of which is

void vkGetImageSubresourceLayout (

VkDevice device,

VkImage image,

const VkImageSubresource* pSubresource,

VkSubresourceLayout* pLayout);

The device that owns the image that is being queried is passed in device, and the image being queried is passed in image. A description of the subresource is passed through an instance of the VkImageSubresource structure, a pointer to which is passed in the pSubresource parameter. The definition of VkImageSubresource is

typedef struct VkImageSubresource {

VkImageAspectFlags aspectMask;

uint32_t mipLevel;

uint32_t arrayLayer;

} VkImageSubresource;

The aspect or aspects of the image that you want to query the layout of is specified in aspectMask. For color images, this should be VK_IMAGE_ASPECT_COLOR_BIT, and for depth, stencil, or depth-stencil images, this should be some combination of VK_IMAGE_ASPECT_DEPTH_BIT, and VK_IMAGE_ASPECT_STENCIL_BIT. The mipmap level for which the parameters are to be returned is specified in mipLevel, and the array layer is specified in arrayLayer. You should normally set arrayLayer to zero, as the parameters of the image aren’t expected to change across layers.

When vkGetImageSubresourceLayout() returns, it will have written the layout parameters of the subresource into the VkSubresourceLayout structure pointed to by pLayout. The definition of VkSubresourceLayout is

typedef struct VkSubresourceLayout {

VkDeviceSize offset;

VkDeviceSize size;

VkDeviceSize rowPitch;

VkDeviceSize arrayPitch;

VkDeviceSize depthPitch;

} VkSubresourceLayout;

The size of the memory region consumed by the requested subresource is returned in size, and the offset within the resource where the subresource begins is returned in offset. The rowPitch, arrayPitch, and depthPitch fields contain the row, array layer, and depth slice pitches, respectively. The unit of these fields is always bytes, regardless of the pixel format of the images. Pixels within a row are always tightly packed. Figure 2.2 illustrates how these parameters represent memory layout of an image. In the figure, the valid image data is represented by the grey grid, and padding around the image is shown as blank space.

Figure 2.2: Memory Layout of LINEAR Tiled Images

Given the memory layout of an image in LINEAR tiling mode, it is possible to trivially compute the memory address for a single texel within the image. Loading image data into a LINEAR tiled image is then simply a case of loading scanlines from the image into memory at the right location. For many texel formats and image dimensions, it is highly likely that the image’s rows are tightly packed in memory—that is, the rowPitch field of the VkSubresourceLayout structure is equal to the subresource’s width. In this case, many image-loading libraries will be able to load the image directly into the mapped memory of the image.

Nonlinear Encoding

You may have noticed that some of the Vulkan image formats include SRGB in their names. This refers to sRGB color encoding, which is a nonlinear encoding that uses a gamma curve approximating that of CRTs. Although CRTs are all but obsolete now, sRGB encoding is still in widespread use for texture and image data.

Because the amount of light energy produced by a CRT is not linear with the amount of electrical energy used to produce the electron beam that excites the phosphor, an inverse mapping must be applied to color signals to make a linear rise in numeric value produce a linear increase in light output. The amount of light output by a CRT is approximately

Lout = Vinγ

The standard value of γ in NTSC television systems (common in North America, parts of South America, and parts of Asia) is 2.2. Meanwhile, the standard value of γ in SECAM and PAL systems (common in Europe, Africa, Australia, and other regions of Asia) is 2.8.

The sRGB curve attempts to compensate for this by applying gamma correction to linear data in memory. The standard sRGB transfer function is not a pure gamma curve but is made up of a short linear section followed by a curved, gamma-corrected section. The function applied to data to go from linear to sRGB space is

if (cl >= 1.0)

{

cs = 1.0;

}

else if (cl <= 0.0)

{

cs = 0.0;

}

else if (cl < 0.0031308)

{

cs = 12.92 * cl;

}

else

{

cs = 1.055 * pow(cl, 0.41666) - 0.055;

}

To go from sRGB space to linear space, the following transform is made:

if (cs >= 1.0)

{

cl = 1.0;

}

else if (cs <= 0.0)

{

cl = 0.0;

}

else if (cs <= 0.04045)

{

cl = cs / 12.92;

}

else

{

cl = pow((cs + 0.0555) / 1.055), 2.4)

}

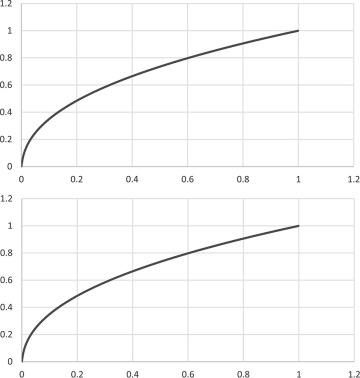

In both code snippets, cs is the sRGB color space value, and cl is the linear value. Figure 2.3 shows a side-by-side comparison of a simple γ = 2.2 curve and the standard sRGB transfer function. As you can see in the figure, the curves for sRGB correction (shown on the top) and a simple power curve (shown on the bottom) are almost identical. While Vulkan implementations are expected to implement sRGB using the official definition, if you need to perform the transformation manually in your shaders, you may be able to get away with a simple power function without accumulating too much error.

Figure 2.3: Gamma Curves for sRGB (Top) and Simple Powers (Bottom)

When rendering to an image in sRGB format, linear values produced by your shaders are transformed to sRGB encoding before being written into the image. When reading from an image in sRGB format, texels are transformed from sRGB format back to linear space before being returned to your shader.

Blending and interpolation always occurs in linear space such that data read from a framebuffer is first transformed from sRGB to linear space and then blended with the source data in linear space, and the final result is transformed back to sRGB encoding before being written into the framebuffer.

Rendering in sRGB space provides more precision in darker colors and can result in less banding artifacts and richer colors. However, for best image quality, including high-dynamic-range rendering, it’s best to choose a floating-point color format and render in a linear space, converting to sRGB as late as possible before display.

Compressed Image Formats

Image resources are likely to be the largest consumers of device memory in your application. For this reason, Vulkan provides the capability for images to be compressed. Image compression provides two significant benefits to an application:

It reduces the total amount of memory consumed by image resources used by the application.

It reduces the total memory bandwidth consumed while accessing those resources.

All currently defined compressed image formats in Vulkan are what are known as block compressed formats. Texels within an image are compressed in small square or rectangular blocks that can be decompressed independently of all others. All formats are lossy, and the compression ratio is not competitive with formats such as JPEG or even PNG. However, decompression is fast and cheap to implement in hardware, and random access to texels is relatively straightforward.

Support for various compressed image formats is optional, but all Vulkan implementations are required to support at least one family of formats. You can determine which family of compressed formats is supported by checking various fields of the device’s VkPhysicalDeviceFeatures structure as returned from a call to vkGetPhysicalDeviceProperties().

If textureCompressionBC is VK_TRUE, then the device supports the block compressed formats, also known as BC formats. The BC family includes

BC1: Made up of the VK_FORMAT_BC1_RGB_UNORM_BLOCK, VK_FORMAT_BC1_RGB_SRGB_BLOCK, VK_FORMAT_BC1_RGBA_UNORM_BLOCK, and VK_FORMAT_BC1_RGBA_SRGB_BLOCK formats, BC1 encodes images in blocks of 4 × 4 texels, with each block represented as a 64-bit quantity.

BC2: Consisting of VK_FORMAT_BC2_UNORM_BLOCK and VK_FORMAT_BC2_SRGB_BLOCK, BC2 encodes images in blocks of 4 × 4 texels, with each block represented as a 128-bit quantity. BC2 images always have an alpha channel. The encoding for the RGB channels is the same as with BC1 RGB formats, and the alpha is stored as 4 bits per texel in a second 64-bit field before the BC1 encoded RGB data.

BC3: The VK_FORMAT_BC3_UNORM_BLOCK and VK_FORMAT_BC3_SRGB_BLOCK formats make up the BC3 family, again encoding texels in 4 × 4 blocks, with each block consuming 128 bits of storage. The first 64-bit quantity stores compressed alpha values, allowing coherent alpha data to be stored with higher precision than BC2. The second 64-bit quantity stores compressed color data in a similar form to BC1.

BC4: VK_FORMAT_BC4_UNORM_BLOCK and VK_FORMAT_BC4_SRGB_BLOCK represent single-channel formats, again encoded as 4 × 4 blocks of texels, with each block consuming 64 bits of storage. The encoding of the single-channel data is essentially the same as that of the alpha channel of a BC3 image.

BC5: Made up of VK_FORMAT_BC5_UNORM_BLOCK and VK_FORMAT_BC5_SRGB_BLOCK, the BC5 family is a two-channel format, with each 4 × 4 block essentially consisting of two BC4 blocks back-to-back.

BC6: The VK_FORMAT_BC6H_SFLOAT_BLOCK and VK_FORMAT_BC6H_UFLOAT_BLOCK formats are signed and unsigned floating-point compressed formats, respectively. Each 4 × 4 block of RGB texels is stored in 128 bits of data.

BC7: VK_FORMAT_BC7_UNORM_BLOCK and VK_FORMAT_BC7_SRGB_BLOCK are four-channel formats with each 4 × 4 block of RGBA texel data stored in a 128-bit component.

If the textureCompressionETC2 member of VkPhysicalDeviceFeatures is VK_TRUE, then the device supports the ETC formats, including ETC2 and EAC. The following formats are included in this family:

VK_FORMAT_ETC2_R8G8B8_UNORM_BLOCK and VK_FORMAT_ETC2_R8G8B8_SRGB_BLOCK: Unsigned formats where 4 × 4 blocks of RGB texels are packed into 64 bits of compressed data.

VK_FORMAT_ETC2_R8G8B8A1_UNORM_BLOCK and VK_FORMAT_ETC2_R8G8B8A1_SRGB_BLOCK: Unsigned formats where 4 × 4 blocks of RGB texels plus a one-bit alpha value per texel are packed into 64 bits of compressed data.

VK_FORMAT_ETC2_R8G8B8A8_UNORM_BLOCK and VK_FORMAT_ETC2_R8G8B8A8_SRGB_BLOCK: Each 4 × 4 block of texels is represented as a 128-bit quantity. Each texel has 4 channels.

VK_FORMAT_EAC_R11_UNORM_BLOCK and VK_FORMAT_EAC_R11_SNORM_BLOCK: Unsigned and signed single-channel formats with each 4 × 4 block of texels represented as a 64-bit quantity.

VK_FORMAT_EAC_R11G11_UNORM_BLOCK and VK_FORMAT_EAC_R11G11_SNORM_BLOCK: Unsigned and signed two-channel formats with each 4 × 4 block of texels represented as a 64-bit quantity.

The final family is the ASTC family. If the textureCompressionASTC_LDR member of VkPhysicalDeviceFeatures is VK_TRUE, then the device supports the ASTC formats. You may have noticed that for all of the formats in the BC and ETC families, the block size is fixed at 4 × 4 texels, but depending on format, the texel format and number of bits used to store the compressed data vary.

ASTC is different here in that the number of bits per block is always 128, and all ASTC formats have four channels. However, the block size in texels can vary. The following block sizes are supported: 4 × 4, 5 × 4, 5 × 5, 6 × 5, 6 × 6, 8 × 5, 8 × 6, 8 × 8, 10 × 5, 10 × 6, 10 × 8, 10 × 10, 12 × 10, and 12 × 12.

The format of the token name for ASTC formats is formulated as VK_FORMAT_ASTC_{N}x{M}_{encoding}_BLOCK, where {N} and {M} represent the width and height of the block, and {encoding} is either UNORM or SRGB, depending on whether the data is linear or encoded as sRGB nonlinear. For example, VK_FORMAT_ASTC_8x6_SRGB_BLOCK is an RGBA ASTC compressed format with 8 × 6 blocks and sRGB encoded data.

For all formats including SRGB, only the R, G, and B channels use nonlinear encoding. The A channel is always stored with linear encoding.

Resource Views

Buffers and images are the two primary types of resources supported in Vulkan. In addition to creating these two resource types, you can create views of existing resources in order to partition them, reinterpret their content, or use them for multiple purposes. Views of buffers, which represent a subrange of a buffer object, are known as buffer views, and views of images, which can alias formats or represent a subresource of another image, are known as image views.

Before a view of a buffer or image can be created, you need to bind memory to the parent object.

Buffer Views

A buffer view is used to interpret the data in a buffer with a specific format. Because the raw data in the buffer is then treated as a sequence of texels, this is also known as a texel buffer view. A texel buffer view can be accessed directly in shaders, and Vulkan will automatically convert the texels in the buffer into the format expected by the shader. One example use for this functionality is to directly fetch the properties of vertices in a vertex shader by reading from a texel buffer rather than using a vertex buffer. While this is more restrictive, it does allow random access to the data in the buffer.

To create a buffer view, call vkCreateBufferView(), the prototype of which is

VkResult vkCreateBufferView (

VkDevice device,

const VkBufferViewCreateInfo* pCreateInfo,

const VkAllocationCallbacks* pAllocator,

VkBufferView* pView);

The device that is to create the new view is passed in device. This should be the same device that created the buffer of which you are creating a view. The remaining parameters of the new view are passed through a pointer to an instance of the VkBufferViewCreateInfo structure, the definition of which is

typedef struct VkBufferViewCreateInfo {

VkStructureType sType;

const void* pNext;

VkBufferViewCreateFlags flags;

VkBuffer buffer;

VkFormat format;

VkDeviceSize offset;

VkDeviceSize range;

} VkBufferViewCreateInfo;

The sType field of VkBufferViewCreateInfo should be set to VK_STRUCTURE_TYPE_BUFFER_VIEW_CREATE_INFO, and pNext should be set to nullptr. The flags field is reserved and should be set to 0. The parent buffer is specified in buffer. The new view will be a “window” into the parent buffer starting at offset bytes and extending for range bytes. When bound as a texel buffer, the data in the buffer is interpreted as a sequence of texels with the format as specified in format.

The maximum number of texels that can be stored in a texel buffer is determined by inspecting the maxTexelBufferElements field of the device’s VkPhysicalDeviceLimits structure, which can be retrieved by calling vkGetPhysicalDeviceProperties(). If the buffer is to be used as a texel buffer, then range divided by the size of a texel in format must be less than or equal to this limit. maxTexelBufferElements is guaranteed to be at least 65,536, so if the view you’re creating contains fewer texels, there’s no need to query this limit.

The parent buffer must have been created with the VK_BUFFER_USAGE_UNIFORM_TEXEL_BUFFER_BIT or VK_BUFFER_USAGE_STORAGE_TEXEL_BUFFER_BIT flags in the usage field of the VkBufferCreateInfo used to create the buffer. The specified format must support the VK_FORMAT_FEATURE_UNIFORM_TEXEL_BUFFER_BIT, VK_FORMAT_FEATURE_STORAGE_TEXEL_BUFFER_BIT, or VK_FORMAT_FEATURE_STORAGE_TEXEL_BUFFER_ATOMIC_BIT as reported by vkGetPhysicalDeviceFormatProperties().

On success, vkCreateBufferView() places the handle to the newly created buffer view in the variable pointed to by pView. If pAllocator is not nullptr, then the allocation callbacks specified in the VkAllocationCallbacks structure it points to are used to allocate any host memory required by the new object.

Image Views

In many cases, the image resource cannot be used directly, as more information about it is needed than is included in the resource itself. For example, you cannot use an image resource directly as an attachment to a framebuffer or bind an image into a descriptor set in order to sample from it in a shader. To satisfy these additional requirements, you must create an image view, which is essentially a collection of properties and a reference to a parent image resource.

An image view also allows all or part of an existing image to be seen as a different format. The resulting view of the parent image must have the same dimensions as the parent, although a subset of the parent’s array layers or mip levels may be included in the view. The format of the parent and child images must also be compatible, which usually means that they have the same number of bits per pixel, even if the data formats are completely different and even if there are a different number of channels in the image.

To create a new view of an existing image, call vkCreateImageView(), the prototype of which is

VkResult vkCreateImageView (

VkDevice device,

const VkImageViewCreateInfo* pCreateInfo,

const VkAllocationCallbacks* pAllocator,

VkImageView* pView);

The device that will be used to create the new view and that should own the parent image is specified in device. The remaining parameters used in the creation of the new view are passed through an instance of the VkImageViewCreateInfo structure, a pointer to which is passed in pCreateInfo. The definition of VkImageViewCreateInfo is

typedef struct VkImageViewCreateInfo {

VkStructureType sType;

const void* pNext;

VkImageViewCreateFlags flags;

VkImage image;

VkImageViewType viewType;

VkFormat format;

VkComponentMapping components;

VkImageSubresourceRange subresourceRange;

} VkImageViewCreateInfo;

The sType field of VkImageViewCreateInfo should be set to VK_STRUCTURE_TYPE_IMAGE_VIEW_CREATE_INFO, and pNext should be set to nullptr. The flags field is reserved for future use and should be set to 0.

The parent image of which to create a new view is specified in image. The type of view to create is specified in viewType. The view type must be compatible with the parent’s image type and is a member of the VkImageViewType enumeration, which is larger than the VkImageType enumeration used in creating the parent image. The image view types are as follows:

VK_IMAGE_VIEW_TYPE_1D, VK_IMAGE_VIEW_TYPE_2D, and VK_IMAGE_VIEW_TYPE_3D are the “normal” 1D, 2D, and 3D image types.

VK_IMAGE_VIEW_TYPE_CUBE and VK_IMAGE_VIEW_TYPE_CUBE_ARRAY are cube map and cube map array images.

VK_IMAGE_VIEW_TYPE_1D_ARRAY and VK_IMAGE_VIEW_TYPE_2D_ARRAY are 1D and 2D array images.

Note that all images are essentially considered array images, even if they only have one layer. It is, however, possible to create nonarray views of parent images that refer to one of the layers of the image.

The format of the new view is specified in format. This must be a format that is compatible with that of the parent image. In general, if two formats have the same number of bits per pixel, then they are considered compatible. If either or both of the formats is a block compressed image format, then one of two things must be true:

If both images have compressed formats, then the number of bits per block must match between those formats.

If only one image is compressed and the other is not, then bits per block in the compressed image must be the same as the number of bits per texel in the uncompressed image.

By creating an uncompressed view of a compressed image, you give access to the raw, compressed data, making it possible to do things like write compressed data from a shader into the image or interpret the compressed data directly in your application. Note that while all block-compressed formats encode blocks either as 64-bit or 128-bit quantities, there are no uncompressed, single-channel 64-bit or 128-bit image formats. To alias a compressed image as an uncompressed format, you need to choose an uncompressed format with the same number of bits per texel and then aggregate the bits from the different image channels within your shader to extract the individual fields from the compressed data.

The component ordering in the view may be different from that in the parent. This allows, for example, an RGBA view of a BGRA format image to be created. This remapping is specified using an instance of VkComponentMapping, the definition of which is simply

typedef struct VkComponentMapping {

VkComponentSwizzle r;

VkComponentSwizzle g;

VkComponentSwizzle b;

VkComponentSwizzle a;

} VkComponentMapping;

Each member of VkComponentMapping specifies the source of data in the parent image that will be used to fill the resulting texel fetched from the child view. They are members of the VkComponentSwizzle enumeration, the members of which are as follows:

VK_COMPONENT_SWIZZLE_R, VK_COMPONENT_SWIZZLE_G, VK_COMPONENT_SWIZZLE_B, and VK_COMPONENT_SWIZZLE_A indicate that the source data should be read from the R, G, B, or A channels of the parent image, respectively.

VK_COMPONENT_SWIZZLE_ZERO and VK_COMPONENT_SWIZZLE_ONE indicate that the data in the child image should be read as zero or one, respectively, regardless of the content of the parent image.

VK_COMPONENT_SWIZZLE_IDENTITY indicates that the data in the child image should be read from the corresponding channel in the parent image. Note that the numeric value of VK_COMPONENT_SWIZZLE_IDENTITY is zero, so simply setting the entire VkComponentMapping structure to zero will result in an identity mapping between child and parent images.

The child image can be a subset of the parent image. This subset is specified using the embedded VkImageSubresourceRange structure in subresourceRange. The definition of VkImageSubresourceRange is

typedef struct VkImageSubresourceRange {

VkImageAspectFlags aspectMask;

uint32_t baseMipLevel;

uint32_t levelCount;

uint32_t baseArrayLayer;

uint32_t layerCount;

} VkImageSubresourceRange;

The aspectMask field is a bitfield made up from members of the VkImageAspectFlagBits enumeration specifying which aspects of the image are affected by the barrier. Some image types have more than one logical part, even though the data itself might be interleaved or otherwise related. An example of this is depth-stencil images, which have both a depth component and a stencil component. Each of these two components may be viewable as a separate image in its own right, and these subimages are known as aspects. The flags that can be included in aspectMask are

VK_IMAGE_ASPECT_COLOR_BIT: The color part of an image. There is usually only a color aspect in color images.

VK_IMAGE_ASPECT_DEPTH_BIT: The depth aspect of a depth-stencil image.

VK_IMAGE_ASPECT_STENCIL_BIT: The stencil aspect of a depth-stencil image.

VK_IMAGE_ASPECT_METADATA_BIT: Any additional information associated with the image that might track its state and is used, for example, in various compression techniques.

When you create the new view of the parent image, that view can refer to only one aspect of the parent image. Perhaps the most common use case of this is to create a depth- or stencil-only view of a combined depth-stencil format image.

To create a new image view that corresponds only to a subset of the parent’s mip chain, use the baseMipLevel and levelCount to specify where in the mip chain the view begins and how many mip levels it will contain. If the parent image does not have mipmaps, these fields should be set to zero and one, respectively.

Likewise, to create an image view of a subset of a parent’s array layers, use the baseArrayLayer and layerCount fields to specify the starting layer and number of layers, respectively. Again, if the parent image is not an array image, then baseArrayLayer should be set to zero and layerCount should be set to one.

Image Arrays

The defined image types (VkImageType) include only VK_IMAGE_TYPE_1D, VK_IMAGE_TYPE_2D, or VK_IMAGE_TYPE_3D, which are used to create 1D, 2D, and 3D images, respectively. However, in addition to their sizes in each of the x, y, and z dimensions, all images have a layer count, contained in the arrayLayers field of their VkImageCreateInfo structure.

Images can be aggregated into arrays, and each element of an array image is known as a layer. Array images allow images to be grouped into single objects, and sampling from multiple layers of the same array image is often more performant than sampling from several loose array objects. Because all Vulkan images have a layerCount field, they are all technically array images. However, in practice, we only refer to images with a layerCount greater than 1 as an array image.

When views are created of images, the view is explicitly marked as either an array or a nonarray. A nonarray view implicitly has only one layer whereas an array view has multiple layers. Sampling from a nonarray view may perform better than sampling from a single layer of an array image, simply because the device needs to perform fewer indirections and parameter lookups.

A 1D array texture is conceptually different from a 2D texture, and a 2D array texture is different from a 3D texture. The primary difference is that linear filtering can be performed in the y direction of a 2D texture and in the z direction in a 3D texture, whereas filtering cannot be performed across multiple layers in an array image. Notice that there is no 3D array image view type included in VkImageViewType, and most Vulkan implementations will not allow you to create a 3D image with an arrayLayers field greater than 1.

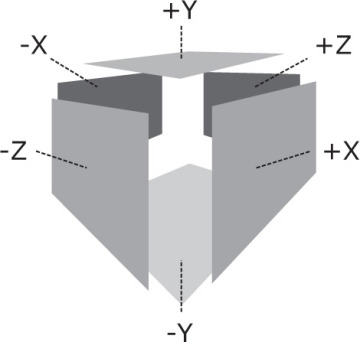

In addition to image arrays, a cube map is a special type of image that allows groups of six layers of an array image to be interpreted as the sides of a cube. Imagine standing in the center of a cube-shaped room. The room has four walls, a floor, and a ceiling. To your left and right are considered the negative and positive X directions, behind and in front of you are the negative and positive Z directions, and the floor and ceiling are the negative and positive Y directions. These faces are often notated as the -X, +X, -Y, +Y, -Z, and +Z faces. These are the six faces of a cube map, and a group of six consecutive array layers can be interpreted in that order.

A cube map is sampled using a 3D coordinate. This coordinate is interpreted as a vector pointing from the center of the cube map outward, and the point sampled in the cube-map is the point where the vector meets the cube. Again, put yourself back into the cube-map room and imagine you have a laser pointer. As you point the laser in different directions, the spot on the wall or ceiling is the point from which texture data is taken when the cube map is sampled.

Figure 2.4 shows this pictorially. As you can see in the figure, the cube map is constructed from a selection of six consecutive elements from the parent texture. To create a cube-map view, first create a 2D array image with at least six faces. The imageType field of the VkImageCreateInfo structure should be set to VK_IMAGE_TYPE_2D and the arrayLayers field should be at least 6. Note that the number of layers in the parent array doesn’t have to be a multiple of 6, but it has to be at least 6.

Figure 2.4: Cube Map Construction

The flags field of the parent image’s VkImageCreateInfo structure must have the VK_IMAGE_CREATE_CUBE_COMPATIBLE_BIT set, and the image must be square (because the faces of a cube are square).

Next, we create a view of the 2D array parent, but rather than creating a normal 2D (array) view of the image, we create a cube-map view. To do this, set the viewType field of the VkImageViewCreateInfo structure used to create the view to VK_IMAGE_VIEW_TYPE_CUBE. In the embedded subresourceRange field, the baseArrayLayer and layerCount fields are used to determine where in the array the cube map begins. To create a single cube, layerCount should be set to 6.

The first element of the array (at the index specified in the baseArrayLayer field) becomes the -X face, and the next five layers become the +X, -Y, +Y, -Z, and +Z faces, in that order.

Cube maps can also form arrays of their own. This is simply a concatenation of an integer multiple of six faces, with each group of six forming a separate cube. To create a cube-map array image, set the viewType field of VkImageViewCreateInfo to VK_IMAGE_VIEW_TYPE_CUBE_ARRAY, and set the layerCount to a multiple of 6. The number of cubes in the array is therefore the layerCount for the array divided by 6. The number of layers in the parent image must be at least as many layers as are referenced by the cube-map view.

When data is placed in a cube map or cube-map array image, it is treated identically to an array image. Each array layer is laid out consecutively, and commands such as vkCmdCopyBufferToImage() (which is covered in Chapter 4, “Moving Data”) can be used to write into the image. The image can be bound as a color attachment and rendered to. Using layered rendering, you can even write to multiple faces of a cube map in a single drawing command.

Destroying Resources

When you are done with buffers, images, and other resources, it is important to destroy them cleanly. Before destroying a resource, you must make sure that it is not in use and that no work is pending that might access it. Once you are certain that this is the case, you can destroy the resource by calling the appropriate destruction function. To destroy a buffer resource, call vkDestroyBuffer(), the prototype of which is

void vkDestroyBuffer (

VkDevice device,

VkBuffer buffer,

const VkAllocationCallbacks* pAllocator);

The device that owns the buffer object should be specified in device, and the handle to the buffer object should be specified in buffer. If a host memory allocator was used to create the buffer object, a compatible allocator should be specified in pAllocator; otherwise, pAllocator should be set to nullptr.

Note that destroying a buffer object for which other views exist will also invalidate those views. The view objects themselves must still be destroyed explicitly, but it is not legal to access a view of a buffer that has been destroyed. To destroy a buffer view, call vkDestroyBufferView(), the prototype of which is

void vkDestroyBufferView (

VkDevice device,

VkBufferView bufferView,

const VkAllocationCallbacks* pAllocator);

Again, device is a handle to the device that owns the view, and bufferView is a handle to the view to be destroyed. pAllocator should point to a host memory allocator compatible with that used to create the view or should be set to nullptr if no allocator was used to create the view.

Destruction of images is almost identical to that of buffers. To destroy an image object, call vkDestroyImage(), the prototype of which is

void vkDestroyImage (

VkDevice device,

VkImage image,

const VkAllocationCallbacks* pAllocator);

device is the device that owns the image to be destroyed, and image is the handle to that image. Again, if a host memory allocator was used to create the original image, then pAllocator should point to one compatible with it; otherwise, pAllocator should be nullptr.

As with buffers, destroying an image invalidates all views of that image. It is not legal to access a view of an image that has already been destroyed. The only thing you can do with such views is to destroy them. Destroying an image view is accomplished by calling vkDestroyImageView(), the prototype of which is

void vkDestroyImageView (

VkDevice device,

VkImageView imageView,

const VkAllocationCallbacks* pAllocator);

As you might expect, device is the device that owns the view being destroyed, and imageView is the handle to that view. As with all other destruction functions mentioned so far, pAllocator is a pointer to an allocator compatible with the one used to create the view or nullptr if no allocator was used.