Classifying Tests

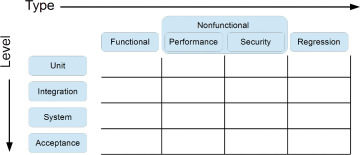

There are numerous ways to test software. Depending on the type of information we want to discover about it and the kind of feedback we’re interested in, a certain way of testing may be more appropriate than another. Tests are traditionally classified along two dimensions: test level and test type (see Figure 3.1). Combining them into a matrix provides a helpful visualization of the team’s testing activities.

Figure 3.1 Test levels and types covered in this chapter.

Test Levels

A test level can be thought of as expressing the proximity to the source code and the footprint of the test. As an example, unit tests are close to the source code and cover a few lines. On the contrary, acceptance tests aren’t concerned about implementation details and may span over multiple systems and processes, thus having a very large footprint.

Unit Test

Unit testing refers to authoring fast, low-level tests that target a small part of the system (Fowler 2014). Because of their natural coupling to the code, they’re written by developers and executed by unit testing frameworks.

This sounds simple enough, but the term comes with its gray areas: size and scope of a unit of work, collaborator isolation, and execution speed. Where the boundary of a unit is drawn depends on the programming language and type of system. A unit test may exercise a function or method, a class, or even a cluster of collaborating classes that provide some specific functionality. This description may seem fuzzy, but given some experience, it’s easy to spot unit tests that don’t make sense or are too complicated. Collaborator isolation, along with speed of execution, is subject to more intense debate. There are those who mandate that a unit test isolate all collaborators of the tested code. Others strive for a less ascetic approach and isolate only collaborators that, when invoked, would make the test fail because of unavailable or unreachable resources or external hosts. In either case, execution speed isn’t an issue. Finally, some people argue that unit tests don’t have to replace slower collaborators at all as long as the test is otherwise simple and to the point. This book uses a definition of unit testing that fits the second of the three aforementioned variants.

When doing research for this book, I found that some sources used the terms unit and component more or less interchangeably, in which case both referred to a rather small artifact that can be tested in isolation. To a developer, a unit and a component mean different things. As stated previously, a unit of work is a small chunk of functionality that can be tested in a meaningful way. Components have a more elusive definition, but the authors of Continuous Delivery—Reliable Software Delivery through Build, Test, and Deployment Automation nail it quite well: “. . . a reasonably large-scale code structure within an application, with well-defined API, that could potentially be swapped out for another implementation” (Humble & Farley 2010). This definition happens to coincide with how components are described in the literature about software architecture. Thus, components are much larger than units and require more sophisticated tests.

Integration Test

The term integration test is unfortunately both ambiguous and overloaded. The ambiguity comes from the fact that “integration” may refer to either two systems or components talking to each other via some kind of remote procedure call (RPC), a database, or message bus; or it may mean “an integration test is that which is not a unit test and not a system test.”

Actually there’s a point in maintaining this distinction. Testing whether two systems talk to each other correctly is a black box activity. Because the systems communicate through a (hopefully) well-defined interface, that communication is most likely to be verified using black box testing. Traditionally, this would fall into the tester’s domain.

It’s the second definition, encountered frequently enough, that gives rise to the overloading. The common reasoning goes something like the following, where Tracy Tester and David Developer argue about a test:

Tracy: Have you tested that the complex customer record is written correctly to the database?

David: Sure! I wrote a unit test where I stubbed out the database. Piece of cake!

Tracy: But the database contains both some triggers and constraints that could affect the persistence of the customer record. I don’t think your unit test can account for that.

David: Then it’s your job to test it! You’re responsible for the system tests.

Tracy: I’m not sure whether the database is a “system.” After all it’s your way of implementing persistence. And besides, wouldn’t you want to be certain that persisting the complex customer record won’t be messed up by somebody else on the team? Sure, I can test this manually, but there are only so many times I can do it.

David: You’re right, I guess. I need a test that runs in an automated manner, like a unit test, but more advanced. It must talk to the database. Hmm ... Let’s call this an integration test! After all, we’re integrating the system with the database.

Tracy: ...

Based on the preceding logic, a test that opens a file to write “Hello world” to it or just outputs the same string on the screen isn’t a unit test. Because it’s definitively not a system test, it must be an integration test by analogy. After all, something is integrated with the file system. Confused yet?

Integration tests, as per the second definition, are often intimately coupled to the source code. Given that the line where a test stops being a unit test and becomes something else is blurry and debated, many integration tests will feel like advanced or slower unit tests. Because of this, it shouldn’t be controversial that integration testing really is a developer’s job. The hard part is defining where that job starts and ends.

System Test

Systems are made up of finished and integrated building blocks. They may be components or other systems. System testing is the activity of verifying that the entire system works. System tests are often executed from a black box perspective and exercise integrations and processes that span large parts of the system. A word of caution about system testing: if the individual systems or components have been tested in isolation and have gone through integration testing, system testing will actually target the overall functionality of the system. However, if the underlying building blocks have remained untested, system tests will reveal defects that should have been caught by simpler and cheaper tests, like unit tests. In the worst cases, organizations with inferior and immature development processes, that is, where the developers just throw code over the wall for testing, have to compensate by running only system tests by dedicated QA people.2

Acceptance Test

In its traditional meaning acceptance testing refers to an activity performed by the end users to validate that the software they received conforms to the specifications and their expectations and is ready for use. Alas, the term has been kidnapped. Nowadays the aforementioned activity is called user acceptance testing (UAT) (Cimperman 2006), whereas acceptance testing tends to refer to automated black box testing performed by a framework to ensure that a story or part of a story has been correctly implemented. The major acceptance test frameworks gladly promote this definition.

Test Types

Test type refers to the purpose of the test and its specific objective. It may be to verify functionality at some level or to target a certain quality attribute. The most prevalent distinction between test types is that between functional and nonfunctional testing. The latter can be refined to target as many quality attributes as necessary. Regression testing is also a kind of testing that can be performed at all test levels, so it makes sense to treat it as a test type.

Functional Testing

Functional testing constitutes the core of testing. In a striking majority of cases, saying that something will need testing will refer to functional testing. Functional testing is the act of executing the software and checking whether its behavior matches explicit expectations, feeding it different inputs and comparing the results with the specification,3 and exploring it beyond the explicit specification to see if it violates any implicit expectations. Depending on the scope of the test, the specification may be an expected value, a table of values, a use case, a specification document, or even tacit knowledge. At its most fundamental, functional testing answers the questions:

Does the software do what it was intended to do?

Does it not do what it was not intended to do?

Developers will most often encounter functional tests at the unit test level, simply because they create many more of such tests in comparison to other types of tests. However, functional testing applies to all test levels: unit, integration, system, and acceptance.

Nonfunctional Testing

Nonfunctional testing, which by the way is a very unfortunate name, targets a solution’s quality attributes such as usability, reliability, performance, maintainability, and portability, to name a few. Some of them will be discussed further later on.

Quality attributes are sometimes expressed as nonfunctional requirements, hence the relation to nonfunctional testing.

Performance Testing

Performance testing focuses on a system’s responsiveness, throughput, and reliability given different loads. How fast does a web page load? If a user clicks a button on the screen, are the contents immediately updated? How long does it take to process 10,000 payment transactions? All of these questions can be asked for different loads.

Under light or normal load, they may indeed be answered by a performance test. However, as the load on the system is increased—let’s say by more and more users using the system at the same time, or more transactions being processed per second—we’re talking about load testing. The purpose of load testing is to determine the system’s behavior in response to increased load. When the load is increased beyond the maximum “normal load,” load testing turns into stress testing. A special type of stress testing is spike testing, where the maximum normal load is exceeded very rapidly, as if there were a spike in the load. Running the aforementioned tests helps in determining the capacity, the scaling strategy, and the location of the bottlenecks.

Performance testing usually requires a specially tailored environment or software capable of generating the required load and a way of measuring it.

Security Testing

This type of testing may require a very mixed set of skills and is typically performed by trained security professionals. Security testing may be performed as an audit, the purpose of which is to validate policies, or it may be done more aggressively in the form of a penetration test, the purpose of which is to compromise the system using black hat techniques.

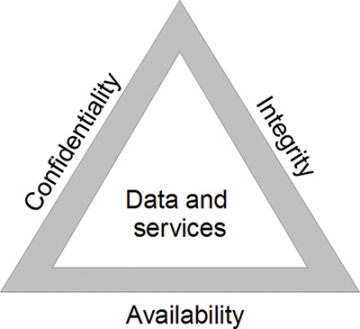

There are various aspects of security. The security triad known as CIA is a common model that brings them all together (Stallings & Brown 2007). Figure 3.2 provides an illustration of the concepts in the triad. They include the following:

Confidentiality

Data confidentiality—Private or confidential information stays that way.

Privacy—You have a degree of control over what information is stored about you, how, and by whom.

Integrity

Data integrity—Information and programs are changed by trusted sources.

System integrity—The system performs the way it’s supposed to without being compromised.

Availability

Resources are available to authorized users and denied to others.

Figure 3.2 The CIA security triad.

Each leg of the CIA triangle can be subject to an infinite number of attacks. Whereas some of them will assume the shape of social engineering or manipulation of the underlying operating system or network stack, many of them will make use of exploits that wouldn’t be possible without defects in the software (developer work!). Therefore, it follows that knowing at least the basics of how to make an application resilient to the most common attacks is something that a developer should know by profession.

The way security testing has been described so far really makes it sound like nonfunctional testing. However, there does exist a term like functional security testing (Bath & McKay 2008). It refers to testing security as performed by a “regular” tester. A functional security test may, for example, be about logging in as a nonprivileged user and attempting to do something in the system that only users with administrative privileges are allowed to do.

Normally, when we talk about security testing, we refer to the nonfunctional kind.

Regression Testing

How do we know that the system still behaves like it’s supposed to once we’ve changed some functionality or fixed a bug? How do we know that we haven’t broken anything? Enter regression testing.

The purpose of regression testing is to establish whether changes to the system have broken existing functionality or caused old defects to resurface. Traditionally, regression testing has been performed by rerunning a number of, or all, test cases on a system after changes have been made. In projects where tests are automated, regression testing isn’t much of a challenge. The test suite is simply executed once more. In fact, as soon as a test is added to an automated suite of tests, it becomes a regression test.

The true challenge of regression testing faces organizations that neither have a traditional QA department or tester group, nor automate their tests. In such organizations, regression testing quickly turns into the Smack-a-Bug game.

Putting Test Levels and Types to Work

Maintaining a clear distinction between the various test levels and types may sound quite rigid and academic, but it can have its advantages.

The first advantage is that all cards are on the table. The team clearly sees what activities there are to consider and may plan accordingly. Some testing will make it to the Definition of Done for every story, some testing may be done on an iteration basis, and some may be deferred to particular releases or a final delivery.4 Some might call this “agreeing on a testing strategy.” If this isn’t good enough and the team has decided on continuous deployment, having a chart of what to automate and in what order helps the team make informed decisions. Combinations of test levels and types map quite nicely to distinct steps in a continuous delivery pipeline.

A second positive effect is that the team gets to talk about its combined skill set, as the various kinds of tests require different levels of effort, time, resources, training, and experience. Relatively speaking, unit tests are simple. They take little time to write and maintain. On the other hand, some types of nonfunctional tests, like performance tests, may require specific expertise and tooling. Discussing how to address such a span of testing work and the kind of feedback that can be gained from it should help the team reach shared learning and improvement goals.

Third, we shouldn’t neglect the usefulness of having a crystal-clear picture of what not to do. For example, a team may decide not to do any nonfunctional integration testing. This means that nobody will be blamed if an integration between two components is slow. The issue still needs to be resolved, but at least it was agreed that testing for such a problem wasn’t a priority.

Finally, in larger projects where several teams are involved, being explicit about testing and quality assurance may help to avoid misunderstandings, omissions, blame, and potential conflicts. Again, a simple matrix of test levels and test types may serve as the basis for a discussion.